Caffe BVLC 的 BN 层是由 batchnormlayer + scalelayer 两层来实现的.

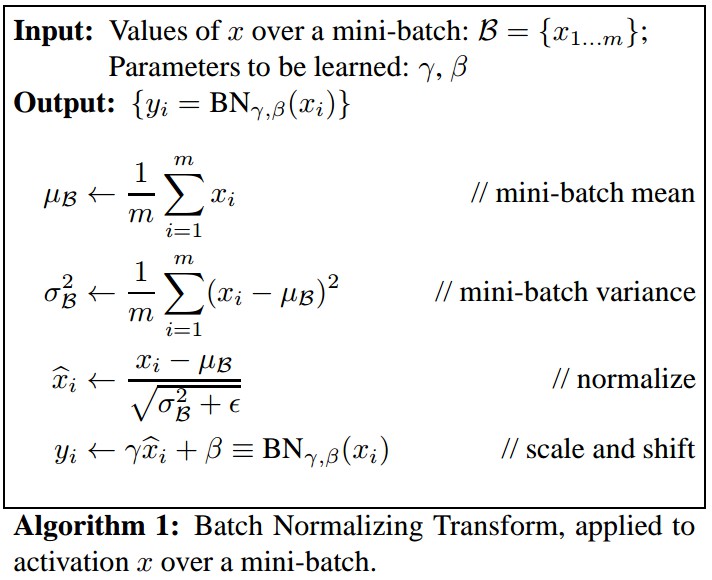

BatchNorm 主要有两部分计算:

[1] 对输入进行归一化,${ x_{norm} = \frac{x- \mu}{\sigma} }$,其中, $\mu$ 和 $\sigma$ 是计算的均值和方差;—— 对应 Caffe BatchNorm 层

[2] 归一化后进行缩放和平移,得到输出 ${ y = \gamma \cdot x_{norm} + \beta }$. —— 对应 Caffe Scale 层;Scale层设置 bias_term=True,即对应于 ${ \beta }$.

1. BatchNormalization 简述

Batch Normalization 论文给出的计算:

前向计算:

后向计算:

Caffe BatchNorm 层的训练,根据从总样本中的 mini-batch 个样本,进行多次前向训练,每次计算都会考虑已经计算得到的 mean 和 variance.

- 前向计算

Caffe 实现中,不是将每次计算的 mean 和 variance 的结果简单累加,而是通过一个因子(一般小于 1 的变量) 把前一次计算的 mean 和 variance 的作用逐渐较少,再加上本次计算的 mean 和 variance,作为最终的结果. 即滑动平均(Moving Average)的方式.

其过程如下:

${ S_{t-1} }$ - 前一次 mini-batch 计算的 mean;

${ Y_{t} }$ - 本次 mini-batch 计算的 mean;

${ \lambda }$ - 滑动平均因子, moving_average_fraction

Forward 计算中,

[F1] - 滑动系数, ${ s_{new} = \lambda s_{old} + 1 }$

[F2] - 均值,${ \mu _{new} = \lambda \mu _{old} + \mu }$

[F3] - 方差,${ \sigma _{new} = \lambda \sigma _{old} + m\sigma }$,其中,${ m > 1 } $ 时,${ m = \frac{m-1}{m} }$

Caffe 源码未加参数 ${ \gamma }$ 和 ${ \beta }$. - 反向计算

对输入的梯度进行计算,没有参数 $\gamma$ 和 $\beta$.

方差的梯度计算:

$\frac{\partial L}{\partial \sigma} = \sum _{i=0}^{n} \frac{\partial L}{\partial y_i} \cdot \frac{\partial y_i}{\partial \sigma} = \sum _{i=0}^{n} \frac{\partial L}{\partial y_i} \cdot (x_i- \mu)(-\frac{1}{2})(\sigma + eps)^{-\frac{3}{2}}$

均值的梯度计算:

$\frac{\partial L}{\partial \mu} = \sum _{i=0}^{n} \frac{\partial L}{\partial y_i} \cdot \frac{\partial y_i}{\partial \mu} = \sum _{i=0}^{n} \frac{\partial L}{\partial y_i} \cdot \frac{-1}{\sqrt {\sigma + eps}} $

输入 $x$ 的梯度计算:

$\frac{\partial L}{\partial x_i} = \frac{\partial L}{\partial y_i} \frac{1}{\sqrt{\sigma+eps}} + \frac{\partial L}{\partial \sigma} \frac{\partial \sigma}{\partial x_i} + \frac{\partial L}{\partial \mu} \frac{\partial \mu}{\partial x_i} $

$\ \ \ \ \ = \frac{\partial L}{\partial y_i} \frac{1}{\sqrt{\sigma + eps}} + \frac{\partial L}{\partial \sigma} \frac{2}{n} (x_i- \mu) + \frac{\partial L}{\partial \mu} \frac{1}{n} $

$\ \ \ \ \ = \frac{1}{\sqrt{\sigma + eps}} (\frac{\partial L}{\partial y_i}- \frac{1}{n}\sum_{i=0}^n \frac{\partial L}{\partial y_i}- (\frac{1}{n} \sum_{i=0}^n \frac{\partial L}{\partial y_i} y_i) y_i ) $

$\ \ \ \ \ = \frac{1}{\sqrt{\sigma + eps}} (\frac{\partial L}{\partial y_i}- \frac{1}{n}(\frac{\partial L}{\partial y_i})- \frac{1}{n}(\frac{\partial L}{\partial y_i} \cdot y_i) \cdot y_i)$

Caffe Scale 层是主要处理参数 $\gamma$ 和 $\beta$ (均为向量).

- 前向计算:

${ top = \gamma \cdot bottom + \beta }$

${ y = \gamma \cdot x + \beta }$ - 反向计算:

${ \frac{\partial y}{\partial x} = \gamma }$

${ \frac{\partial y}{\partial \gamma} = x }$

${ \frac{\partial y}{\partial \beta} = 1 }$

2. prototxt 中的定义

在Caffe 中,一般一个 BatchNorm 层后接 一个 Scale 层,例如:

layer {

bottom: "conv1"

top: "conv1"

name: "bn_conv1"

type: "BatchNorm"

batch_norm_param {

use_global_stats: true

}

param {

name: "bn_conv1_0"

lr_mult: 0

}

param {

name: "bn_conv1_1"

lr_mult: 0

}

param {

name: "bn_conv1_2"

lr_mult: 0

}

}

layer {

bottom: "conv1"

top: "conv1"

name: "scale_conv1"

type: "Scale"

scale_param {

bias_term: true

}

param {

name: "scale_conv1_0"

lr_mult: 0

}

param {

name: "scale_conv1_1"

lr_mult: 0

}

}

From train_voc_trainval_aug.prototxt

3. caffeproto 中 BatchNorm 的定义

message LayerParameter {

optional BatchNormParameter batch_norm_param = 139;

}

message BatchNormParameter {

// If false, normalization is performed over the current mini-batch

// and global statistics are accumulated (but not yet used) by a moving

// average.

// 如果 use_global_stats = 0,则对当前 mini-batch 内的数据归一化; 同时 global statistics 通过滑动平均逐渐累加.

// If true, those accumulated mean and variance values are used for the

// normalization.

// 如果 use_global_stats = 1,则采用累加的 均值和方差 对数据进行归一化.

// By default, it is set to false when the network is in the training

// phase and true when the network is in the testing phase.

// 默认情况下,网络训练时 use_global_stats = 0;网络测试时 use_global_stats = 1.

optional bool use_global_stats = 1;

// What fraction of the moving average remains each iteration?

// 滑动平均时每次迭代保留的百分比?

// Smaller values make the moving average decay faster, giving more

// weight to the recent values.

// 较小的值使得平均累加过程衰退较快,给予最近的值较大的权重

// Each iteration updates the moving average @f$S_{t- 1}@f$ with the

// current mean @f$ Y_t @f$ by

// @f$ S_t = (1-\beta)Y_t + \beta \cdot S_{t-1} @f$, where @f$ \beta @f$

// is the moving_average_fraction parameter.

optional float moving_average_fraction = 2 [default = .999];

// Small value to add to the variance estimate so that we don't divide by

// zero.

// 保持数值稳定性

optional float eps = 3 [default = 1e-5];

}

optional float moving_average_fraction = 2 [default = .999]:

每次迭代时,根据当前均值 ${ Y_t }$ 更新滑动平均值 ${ S_{t-1} }$:

${ S_t = (1-\beta)Y_t + \beta \cdot S_{t-1} }$

其中,${ \beta }$ 是 moving_average_fraction 参数.

4. batch_norm_layer.hpp

#ifndef CAFFE_BATCHNORM_LAYER_HPP_

#define CAFFE_BATCHNORM_LAYER_HPP_

#include <vector>

#include "caffe/blob.hpp"

#include "caffe/layer.hpp"

#include "caffe/proto/caffe.pb.h"

namespace caffe {

/**

* @brief Normalizes the input to have 0-mean and/or unit (1) variance across

* the batch.

* BatchNorm 功能:

* 将 mini-batch 的输入归一化为均值为0或方差为1.

*

* This layer computes Batch Normalization as described in [1]. For each channel

* in the data (i.e. axis 1), it subtracts the mean and divides by the variance,

* where both statistics are computed across both spatial dimensions and across

* the different examples in the batch.

* 对数据中的每一 channel,如 axis=1,BatchNorm 首先减均值,然后除以其方差.

* 其中,均值和方差是对 mini-batch 内的不同样本的所有 spatial 维度进行计算得到.

*

* By default, during training time, the network is computing global

* mean/variance statistics via a running average, which is then used at test

* time to allow deterministic outputs for each input. You can manually toggle

* whether the network is accumulating or using the statistics via the

* use_global_stats option. For reference, these statistics are kept in the

* layer's three blobs: (0) mean, (1) variance, and (2) moving average factor.

* 默认情况,训练时,网络通过平均累加,计算全局均值和方差值,然后用于测试来计算每一个输入的输出.

* 可以通过手工设置 use_global_stats 参数,来控制网络是采用累加还是统计值.

* 统计值被保存在网络层的三个 blobs:(0) mean, (1) variance, and (2) moving average factor

*

* Note that the original paper also included a per-channel learned bias and

* scaling factor. To implement this in Caffe, define a `ScaleLayer` configured

* with `bias_term: true` after each `BatchNormLayer` to handle both the bias

* and scaling factor.

* 原始论文中还包括一个 per-channel 的学习 bias 和一个 scaling 因子.

* 因此,Caffe 实现中,在每个 BatchNormLayer 后的 ScaleLayer 中配置 bias_term: true 来处理 bias 和 scaling 因子.

*

* [1] S. Ioffe and C. Szegedy, "Batch Normalization: Accelerating Deep Network

* Training by Reducing Internal Covariate Shift." arXiv preprint

* arXiv:1502.03167 (2015).

*

* TODO(dox): thorough documentation for Forward, Backward, and proto params.

*/

template <typename Dtype>

class BatchNormLayer : public Layer<Dtype> {

public:

explicit BatchNormLayer(const LayerParameter& param)

: Layer<Dtype>(param) {}

virtual void LayerSetUp(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top);

virtual void Reshape(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top);

virtual inline const char* type() const { return "BatchNorm"; }

virtual inline int ExactNumBottomBlobs() const { return 1; }

virtual inline int ExactNumTopBlobs() const { return 1; }

protected:

virtual void Forward_cpu(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top);

virtual void Forward_gpu(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top);

virtual void Backward_cpu(const vector<Blob<Dtype>*>& top,

const vector<bool>& propagate_down, const vector<Blob<Dtype>*>& bottom);

virtual void Backward_gpu(const vector<Blob<Dtype>*>& top,

const vector<bool>& propagate_down, const vector<Blob<Dtype>*>& bottom);

Blob<Dtype> mean_, variance_, temp_, x_norm_; // 均值,方差,

bool use_global_stats_;

Dtype moving_average_fraction_;

int channels_;

Dtype eps_;

// extra temporarary variables is used to carry out sums/broadcasting

// using BLAS

Blob<Dtype> batch_sum_multiplier_;

Blob<Dtype> num_by_chans_;

Blob<Dtype> spatial_sum_multiplier_;

};

} // namespace caffe

#endif // CAFFE_BATCHNORM_LAYER_HPP_

5. batch_norm_layer.cpp

#include <algorithm>

#include <vector>

#include "caffe/layers/batch_norm_layer.hpp"

#include "caffe/util/math_functions.hpp"

namespace caffe {

template <typename Dtype>

void BatchNormLayer<Dtype>::LayerSetUp(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) {

BatchNormParameter param = this->layer_param_.batch_norm_param(); // batchnorm 参数

moving_average_fraction_ = param.moving_average_fraction(); // 滑动平均参数

// 训练时,mean 和 variance 基于 mini-batch 计算

// 测试时,mean 和 variance 基于整个 dataset.

use_global_stats_ = this->phase_ == TEST;

if (param.has_use_global_stats())

use_global_stats_ = param.use_global_stats();

if (bottom[0]->num_axes() == 1)

channels_ = 1;

else

channels_ = bottom[0]->shape(1);

eps_ = param.eps();

if (this->blobs_.size() > 0) {

LOG(INFO) << "Skipping parameter initialization";

} else {

this->blobs_.resize(3); // 存储的学习参数

vector<int> sz;

sz.push_back(channels_);

this->blobs_[0].reset(new Blob<Dtype>(sz)); // 均值滑动平均值,channels_ 大小的数组

this->blobs_[1].reset(new Blob<Dtype>(sz)); // 方差滑动平均值,channels_ 大小的数组

sz[0] = 1;

this->blobs_[2].reset(new Blob<Dtype>(sz)); // 滑动平均系数,大小为 1 的数组

for (int i = 0; i < 3; ++i) {

caffe_set(this->blobs_[i]->count(), Dtype(0),

this->blobs_[i]->mutable_cpu_data()); // 值初始化为 0

}

}

// Mask statistics from optimization by setting local learning rates

// for mean, variance, and the bias correction to zero.

for (int i = 0; i < this->blobs_.size(); ++i) {

if (this->layer_param_.param_size() == i) {

ParamSpec* fixed_param_spec = this->layer_param_.add_param();

fixed_param_spec->set_lr_mult(0.f);

} else {

CHECK_EQ(this->layer_param_.param(i).lr_mult(), 0.f)

<< "Cannot configure batch normalization statistics as layer "

<< "parameters.";

}

}

}

template <typename Dtype>

void BatchNormLayer<Dtype>::Reshape(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) {

// 如果 bottom 是一维的,均值和方差的个数为1;否则,等于 channels

if (bottom[0]->num_axes() >= 1)

CHECK_EQ(bottom[0]->shape(1), channels_);

top[0]->ReshapeLike(*bottom[0]); // top[0] 与输入 bottom[0] 的形状一致

vector<int> sz;

sz.push_back(channels_);

mean_.Reshape(sz); // 存储均值

variance_.Reshape(sz); // 存储方差

temp_.ReshapeLike(*bottom[0]); // 存储减去均值 mean_ 后的每个数的方差

x_norm_.ReshapeLike(*bottom[0]);

sz[0] = bottom[0]->shape(0);

batch_sum_multiplier_.Reshape(sz); // batch size

// 空间维度, height*width

int spatial_dim = bottom[0]->count()/(channels_*bottom[0]->shape(0));

if (spatial_sum_multiplier_.num_axes() == 0 ||

spatial_sum_multiplier_.shape(0) != spatial_dim) {

sz[0] = spatial_dim;

spatial_sum_multiplier_.Reshape(sz);

Dtype* multiplier_data = spatial_sum_multiplier_.mutable_cpu_data();

// spatial_sum_multiplier_ 初始化值为1,其尺寸为 height*width

caffe_set(spatial_sum_multiplier_.count(), Dtype(1), multiplier_data);

}

int numbychans = channels_*bottom[0]->shape(0); // channels * batchsize

if (num_by_chans_.num_axes() == 0 ||

num_by_chans_.shape(0) != numbychans) {

sz[0] = numbychans;

num_by_chans_.Reshape(sz);

caffe_set(batch_sum_multiplier_.count(), Dtype(1),

batch_sum_multiplier_.mutable_cpu_data()); // 初始化值为 1

}

}

// Forward 函数,计算均值和方差,以矩阵-向量乘积的方式.

template <typename Dtype>

void BatchNormLayer<Dtype>::Forward_cpu(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) {

const Dtype* bottom_data = bottom[0]->cpu_data();

Dtype* top_data = top[0]->mutable_cpu_data();

int num = bottom[0]->shape(0); //

int spatial_dim = bottom[0]->count()/(bottom[0]->shape(0)*channels_); // height*width

// 判断 BN 层的输入输出是否是同一 blob

if (bottom[0] != top[0]) {

caffe_copy(bottom[0]->count(), bottom_data, top_data);

}

if (use_global_stats_) {

// 如果 use_global_stats_ = 1,则使用预定义的均值和方差估计值.

// use the stored mean/variance estimates.

const Dtype scale_factor = this->blobs_[2]->cpu_data()[0] == 0 ?

0 : 1 / this->blobs_[2]->cpu_data()[0];

caffe_cpu_scale(variance_.count(), scale_factor,

this->blobs_[0]->cpu_data(), mean_.mutable_cpu_data()); // 乘以缩放因子

caffe_cpu_scale(variance_.count(), scale_factor,

this->blobs_[1]->cpu_data(), variance_.mutable_cpu_data());

} else {

// 如果 use_global_stats_ = 0

// compute mean

// 均值计算

// num_by_chans_ = (1. / (num * spatial_dim)) * bottom_data * spatial_sum_multiplier_

// channels*num 行; spatial_dim 列

// 共 channels * num 个值

caffe_cpu_gemv<Dtype>(CblasNoTrans, channels_ * num, spatial_dim,

1. / (num * spatial_dim), bottom_data,

spatial_sum_multiplier_.cpu_data(), 0.,

num_by_chans_.mutable_cpu_data());

// mean_ = 1 * num_by_chans_ * batch_sum_multiplier_

// num 行; channels 列

// 每个通道值相加,得到 channel 个值

caffe_cpu_gemv<Dtype>(CblasTrans, num, channels_, 1.,

num_by_chans_.cpu_data(), batch_sum_multiplier_.cpu_data(), 0.,

mean_.mutable_cpu_data());

}

// subtract mean

// 减均值

// num_by_chans_ = 1 * batch_sum_multiplier_ * mean_

caffe_cpu_gemm<Dtype>(CblasNoTrans, CblasNoTrans, num, channels_, 1, 1,

batch_sum_multiplier_.cpu_data(), mean_.cpu_data(), 0.,

num_by_chans_.mutable_cpu_data());

// top_data = -1 * num_by_chans_ * + spatial_sum_multiplier_ + 1.0 * top_data

// top_data 中的数据减去均值 mean_

caffe_cpu_gemm<Dtype>(CblasNoTrans, CblasNoTrans, channels_ * num,

spatial_dim, 1, -1, num_by_chans_.cpu_data(),

spatial_sum_multiplier_.cpu_data(), 1., top_data);

if (!use_global_stats_) {

// 如果 use_global_stats_ = 0,计算方差

// compute variance using var(X) = E((X-EX)^2)

// 对向量的每一个值求其方差,得到结果为 temp_

caffe_sqr<Dtype>(top[0]->count(), top_data, temp_.mutable_cpu_data()); // (X-EX)^2

// num_by_chans_ = (1. / (num * spatial_dim)) * temp_ * spatial_sum_multiplier_

caffe_cpu_gemv<Dtype>(CblasNoTrans, channels_ * num, spatial_dim,

1. / (num * spatial_dim), temp_.cpu_data(),

spatial_sum_multiplier_.cpu_data(), 0.,

num_by_chans_.mutable_cpu_data()); // 矩阵向量乘

// variance_ = 1.0 * num_by_chans_ * batch_sum_multiplier_

caffe_cpu_gemv<Dtype>(CblasTrans, num, channels_, 1.,

num_by_chans_.cpu_data(), batch_sum_multiplier_.cpu_data(), 0.,

variance_.mutable_cpu_data()); // E((X_EX)^2)

// compute and save moving average

// 计算并保存滑动平均值

// 简述部分的 [F1] 步

this->blobs_[2]->mutable_cpu_data()[0] *= moving_average_fraction_;

this->blobs_[2]->mutable_cpu_data()[0] += 1;

// this->blobs_[0] = 1 * mean_ + moving_average_fraction_ * this->blobs_[0]

// 简述部分的 [F2] 步

caffe_cpu_axpby(mean_.count(), Dtype(1), mean_.cpu_data(),

moving_average_fraction_, this->blobs_[0]->mutable_cpu_data());

// m = num * height * width

int m = bottom[0]->count()/channels_;

Dtype bias_correction_factor = m > 1 ? Dtype(m)/(m-1) : 1;

// this->blobs_[1] = bias_correction_factor * variance_ + moving_average_fraction_ * this->blobs_[1]

// 无偏估计方差 m/(m-1)

// 简述部分的 [F3] 步

caffe_cpu_axpby(variance_.count(), bias_correction_factor,

variance_.cpu_data(), moving_average_fraction_,

this->blobs_[1]->mutable_cpu_data());

}

// normalize variance

// 方差归一化

// variance_ = variance_ + eps_ 添加一个很小的值

caffe_add_scalar(variance_.count(), eps_, variance_.mutable_cpu_data());

// 对 variance_ 的每个值进行操作,求开方

caffe_sqrt(variance_.count(), variance_.cpu_data(),

variance_.mutable_cpu_data());

// replicate variance to input size

// 下面两个 gemm 函数将 channels_ 个值的方差 variance_ 扩展到 channels_ * num * height * width

// num_by_chans_ = 1 * batch_sum_multiplier_ * variance_

caffe_cpu_gemm<Dtype>(CblasNoTrans, CblasNoTrans, num, channels_, 1, 1,

batch_sum_multiplier_.cpu_data(), variance_.cpu_data(), 0.,

num_by_chans_.mutable_cpu_data());

// temp_ = 1.0 * num_by_chans_ * spatial_sum_multiplier_

caffe_cpu_gemm<Dtype>(CblasNoTrans, CblasNoTrans, channels_ * num,

spatial_dim, 1, 1., num_by_chans_.cpu_data(),

spatial_sum_multiplier_.cpu_data(), 0., temp_.mutable_cpu_data());

// 逐元素操作,top_data[i] = top_data[i] / temp_[i]

caffe_div(temp_.count(), top_data, temp_.cpu_data(), top_data);

// TODO(cdoersch): The caching is only needed because later in-place layers

// might clobber the data. Can we skip this if they won't?

// 将 top_data 的计算结果 copy 到 x_norm_.

caffe_copy(x_norm_.count(), top_data, x_norm_.mutable_cpu_data());

}

// 参考简述中的反向计算公式.

template <typename Dtype>

void BatchNormLayer<Dtype>::Backward_cpu(const vector<Blob<Dtype>*>& top,

const vector<bool>& propagate_down,

const vector<Blob<Dtype>*>& bottom) {

const Dtype* top_diff; // 梯度

if (bottom[0] != top[0]) {

top_diff = top[0]->cpu_diff();

} else {

caffe_copy(x_norm_.count(), top[0]->cpu_diff(), x_norm_.mutable_cpu_diff());

top_diff = x_norm_.cpu_diff();

}

Dtype* bottom_diff = bottom[0]->mutable_cpu_diff();

if (use_global_stats_) {

caffe_div(temp_.count(), top_diff, temp_.cpu_data(), bottom_diff);

return;

}

const Dtype* top_data = x_norm_.cpu_data();

int num = bottom[0]->shape()[0];

int spatial_dim = bottom[0]->count()/(bottom[0]->shape(0)*channels_);

// if Y = (X-mean(X))/(sqrt(var(X)+eps)), then

//

// dE(Y)/dX =

// (dE/dY - mean(dE/dY) - mean(dE/dY \cdot Y) \cdot Y)

// ./ sqrt(var(X) + eps)

//

// where \cdot and ./ are hadamard product and elementwise division,

// respectively, dE/dY is the top diff, and mean/var/sum are all computed

// along all dimensions except the channels dimension. In the above

// equation, the operations allow for expansion (i.e. broadcast) along all

// dimensions except the channels dimension where required.

// sum(dE/dY \cdot Y)

caffe_mul(temp_.count(), top_data, top_diff, bottom_diff);

caffe_cpu_gemv<Dtype>(CblasNoTrans, channels_ * num, spatial_dim, 1.,

bottom_diff, spatial_sum_multiplier_.cpu_data(), 0.,

num_by_chans_.mutable_cpu_data());

caffe_cpu_gemv<Dtype>(CblasTrans, num, channels_, 1.,

num_by_chans_.cpu_data(), batch_sum_multiplier_.cpu_data(), 0.,

mean_.mutable_cpu_data());

// reshape (broadcast) the above

caffe_cpu_gemm<Dtype>(CblasNoTrans, CblasNoTrans, num, channels_, 1, 1,

batch_sum_multiplier_.cpu_data(), mean_.cpu_data(), 0.,

num_by_chans_.mutable_cpu_data());

caffe_cpu_gemm<Dtype>(CblasNoTrans, CblasNoTrans, channels_ * num,

spatial_dim, 1, 1., num_by_chans_.cpu_data(),

spatial_sum_multiplier_.cpu_data(), 0., bottom_diff);

// sum(dE/dY \cdot Y) \cdot Y

caffe_mul(temp_.count(), top_data, bottom_diff, bottom_diff);

// sum(dE/dY)-sum(dE/dY \cdot Y) \cdot Y

caffe_cpu_gemv<Dtype>(CblasNoTrans, channels_ * num, spatial_dim, 1.,

top_diff, spatial_sum_multiplier_.cpu_data(), 0.,

num_by_chans_.mutable_cpu_data());

caffe_cpu_gemv<Dtype>(CblasTrans, num, channels_, 1.,

num_by_chans_.cpu_data(), batch_sum_multiplier_.cpu_data(), 0.,

mean_.mutable_cpu_data());

// reshape (broadcast) the above to make

// sum(dE/dY)-sum(dE/dY \cdot Y) \cdot Y

caffe_cpu_gemm<Dtype>(CblasNoTrans, CblasNoTrans, num, channels_, 1, 1,

batch_sum_multiplier_.cpu_data(), mean_.cpu_data(), 0.,

num_by_chans_.mutable_cpu_data());

caffe_cpu_gemm<Dtype>(CblasNoTrans, CblasNoTrans, num * channels_,

spatial_dim, 1, 1., num_by_chans_.cpu_data(),

spatial_sum_multiplier_.cpu_data(), 1., bottom_diff);

// dE/dY - mean(dE/dY)-mean(dE/dY \cdot Y) \cdot Y

caffe_cpu_axpby(temp_.count(), Dtype(1), top_diff,

Dtype(-1. / (num * spatial_dim)), bottom_diff);

// note: temp_ still contains sqrt(var(X)+eps), computed during the forward

// pass.

caffe_div(temp_.count(), bottom_diff, temp_.cpu_data(), bottom_diff);

}

#ifdef CPU_ONLY

STUB_GPU(BatchNormLayer);

#endif

INSTANTIATE_CLASS(BatchNormLayer);

REGISTER_LAYER_CLASS(BatchNorm);

} // namespace caffe

6. caffeproto 中 Scale 的定义

message LayerParameter {

optional ScaleParameter scale_param = 142;

}

message ScaleParameter {

// The first axis of bottom[0] (the first input Blob) along which to apply

// bottom[1] (the second input Blob). May be negative to index from the end

// (e.g., -1 for the last axis).

// 根据 bottom[0] 指定 bottom[1] 的形状

// For example, if bottom[0] is 4D with shape 100x3x40x60, the output

// top[0] will have the same shape, and bottom[1] may have any of the

// following shapes (for the given value of axis):

// (axis == 0 == -4) 100; 100x3; 100x3x40; 100x3x40x60

// (axis == 1 == -3) 3; 3x40; 3x40x60

// (axis == 2 == -2) 40; 40x60

// (axis == 3 == -1) 60

// Furthermore, bottom[1] may have the empty shape (regardless of the value of

// "axis") -- a scalar multiplier.

// 例如,如果 bottom[0] 的 shape 为 100x3x40x60,则 top[0] 输出相同的 shape;

// bottom[1] 可以包含上面 shapes 中的任一种(对于给定 axis 值).

// 而且,bottom[1] 可以是 empty shape 的,没有任何的 axis 值,只是一个标量的乘子.

optional int32 axis = 1 [default = 1];

// (num_axes is ignored unless just one bottom is given and the scale is

// a learned parameter of the layer. Otherwise, num_axes is determined by the

// number of axes by the second bottom.)

// (忽略 num_axes 参数,除非只给定一个 bottom 及 scale 是网络层的一个学习到的参数.

// 否则,num_axes 是由第二个 bottom 的数量来决定的.)

// The number of axes of the input (bottom[0]) covered by the scale

// parameter, or -1 to cover all axes of bottom[0] starting from `axis`.

// Set num_axes := 0, to multiply with a zero-axis Blob: a scalar.

// bottom[0] 的 num_axes 是由 scale 参数覆盖的;

optional int32 num_axes = 2 [default = 1];

// (filler is ignored unless just one bottom is given and the scale is

// a learned parameter of the layer.)

// (忽略 filler 参数,除非只给定一个 bottom 及 scale 是网络层的一个学习到的参数.

// The initialization for the learned scale parameter.

// scale 参数学习的初始化

// Default is the unit (1) initialization, resulting in the ScaleLayer

// initially performing the identity operation.

// 默认是单位初始化,使 Scale 层初始进行单位操作.

optional FillerParameter filler = 3;

// Whether to also learn a bias (equivalent to a ScaleLayer+BiasLayer, but

// may be more efficient). Initialized with bias_filler (defaults to 0).

// 是否学习 bias,等价于 ScaleLayer+BiasLayer,只不过效率更高

// 采用 bias_filler 进行初始化. 默认为 0.

optional bool bias_term = 4 [default = false];

optional FillerParameter bias_filler = 5;

}

即,按元素计算连个输入的乘积。该过程以广播第二个输入来匹配第一个输入矩阵的大小。

也就是通过平铺第二个输入矩阵来计算按元素乘积(点乘)。

简化:

optional int32 axis [default = 1] ; 默认的处理维度 optional int32 num_axes [default = 1] ; //在BN中可以忽略,主要决定第二个bottom optional FillerParameter filler ; //初始alpha和beta的填充方式。 optional FillerParameter bias_filler; optional bool bias_term = 4 [default = false]; //是否学习bias,若不学习,则简化为 y = alpha*x

7. scale_layer.hpp

#ifndef CAFFE_SCALE_LAYER_HPP_

#define CAFFE_SCALE_LAYER_HPP_

#include <vector>

#include "caffe/blob.hpp"

#include "caffe/layer.hpp"

#include "caffe/proto/caffe.pb.h"

#include "caffe/layers/bias_layer.hpp"

namespace caffe {

/**

* @brief Computes the elementwise product of two input Blobs, with the shape of

* the latter Blob "broadcast" to match the shape of the former.

* Equivalent to tiling the latter Blob, then computing the elementwise

* product. Note: for efficiency and convenience, this layer can

* additionally perform a "broadcast" sum too when `bias_term: true`

* is set.

*

* The latter, scale input may be omitted, in which case it's learned as

* parameter of the layer (as is the bias, if it is included).

*/

template <typename Dtype>

class ScaleLayer: public Layer<Dtype> {

public:

explicit ScaleLayer(const LayerParameter& param)

: Layer<Dtype>(param) {}

virtual void LayerSetUp(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top);

virtual void Reshape(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top);

virtual inline const char* type() const { return "Scale"; }

// Scale

virtual inline int MinBottomBlobs() const { return 1; }

virtual inline int MaxBottomBlobs() const { return 2; }

virtual inline int ExactNumTopBlobs() const { return 1; }

protected:

/**

* In the below shape specifications, @f$ i @f$ denotes the value of the

* `axis` field given by `this->layer_param_.scale_param().axis()`, after

* canonicalization (i.e., conversion from negative to positive index,

* if applicable).

*

* @param bottom input Blob vector (length 2)

* -# @f$ (d_0 \times ... \times

* d_i \times ... \times d_j \times ... \times d_n) @f$

* the first factor @f$ x @f$

* -# @f$ (d_i \times ... \times d_j) @f$

* the second factor @f$ y @f$

* @param top output Blob vector (length 1)

* -# @f$ (d_0 \times ... \times

* d_i \times ... \times d_j \times ... \times d_n) @f$

* the product @f$ z = x y @f$ computed after "broadcasting" y.

* Equivalent to tiling @f$ y @f$ to have the same shape as @f$ x @f$,

* then computing the elementwise product.

*/

virtual void Forward_cpu(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top);

virtual void Forward_gpu(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top);

virtual void Backward_cpu(const vector<Blob<Dtype>*>& top,

const vector<bool>& propagate_down, const vector<Blob<Dtype>*>& bottom);

virtual void Backward_gpu(const vector<Blob<Dtype>*>& top,

const vector<bool>& propagate_down, const vector<Blob<Dtype>*>& bottom);

shared_ptr<Layer<Dtype> > bias_layer_;

vector<Blob<Dtype>*> bias_bottom_vec_;

vector<bool> bias_propagate_down_;

int bias_param_id_;

Blob<Dtype> sum_multiplier_;

Blob<Dtype> sum_result_;

Blob<Dtype> temp_;

int axis_;

int outer_dim_, scale_dim_, inner_dim_;

};

} // namespace caffe

#endif // CAFFE_SCALE_LAYER_HPP_

8. scale_layer.cpp

#include <algorithm>

#include <vector>

#include "caffe/filler.hpp"

#include "caffe/layer_factory.hpp"

#include "caffe/layers/scale_layer.hpp"

#include "caffe/util/math_functions.hpp"

namespace caffe {

template <typename Dtype>

void ScaleLayer<Dtype>::LayerSetUp(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) {

const ScaleParameter& param = this->layer_param_.scale_param(); // scale 参数

// 判断 bottom blobs 是否已经有值

if (bottom.size() == 1 && this->blobs_.size() > 0) {

LOG(INFO) << "Skipping parameter initialization";

} else if (bottom.size() == 1) {

// scale is a learned parameter; initialize it

// 待学习参数 scale,初始化

axis_ = bottom[0]->CanonicalAxisIndex(param.axis()); //

const int num_axes = param.num_axes();

CHECK_GE(num_axes, -1) << "num_axes must be non-negative, "

<< "or -1 to extend to the end of bottom[0]";

if (num_axes >= 0) {

CHECK_GE(bottom[0]->num_axes(), axis_ + num_axes)

<< "scale blob's shape extends past bottom[0]'s shape when applied "

<< "starting with bottom[0] axis = " << axis_;

}

this->blobs_.resize(1); // gamma

//

const vector<int>::const_iterator& shape_start =

bottom[0]->shape().begin() + axis_;

const vector<int>::const_iterator& shape_end =

(num_axes == -1) ? bottom[0]->shape().end() : (shape_start + num_axes);

vector<int> scale_shape(shape_start, shape_end);

this->blobs_[0].reset(new Blob<Dtype>(scale_shape));

FillerParameter filler_param(param.filler());

if (!param.has_filler()) {

// 未初始化时,初始化值为 1

// Default to unit (1) filler for identity operation.

filler_param.set_type("constant");

filler_param.set_value(1);

}

shared_ptr<Filler<Dtype> > filler(GetFiller<Dtype>(filler_param));

filler->Fill(this->blobs_[0].get());

}

if (param.bias_term()) { // 是否需要处理 bias 项

LayerParameter layer_param(this->layer_param_);

layer_param.set_type("Bias");

BiasParameter* bias_param = layer_param.mutable_bias_param();

bias_param->set_axis(param.axis());

if (bottom.size() > 1) {

bias_param->set_num_axes(bottom[1]->num_axes());

} else {

bias_param->set_num_axes(param.num_axes());

}

bias_param->mutable_filler()->CopyFrom(param.bias_filler());

bias_layer_ = LayerRegistry<Dtype>::CreateLayer(layer_param);

bias_bottom_vec_.resize(1);

bias_bottom_vec_[0] = bottom[0];

bias_layer_->SetUp(bias_bottom_vec_, top);

if (this->blobs_.size() + bottom.size() < 3) {

// case: blobs.size == 1 && bottom.size == 1

// or blobs.size == 0 && bottom.size == 2

bias_param_id_ = this->blobs_.size();

this->blobs_.resize(bias_param_id_ + 1);

this->blobs_[bias_param_id_] = bias_layer_->blobs()[0];

} else {

// bias param already initialized

bias_param_id_ = this->blobs_.size() - 1;

bias_layer_->blobs()[0] = this->blobs_[bias_param_id_];

}

bias_propagate_down_.resize(1, false);

}

this->param_propagate_down_.resize(this->blobs_.size(), true);

}

template <typename Dtype>

void ScaleLayer<Dtype>::Reshape(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) {

const ScaleParameter& param = this->layer_param_.scale_param();

Blob<Dtype>* scale = (bottom.size() > 1) ? bottom[1] : this->blobs_[0].get();

// Always set axis_ == 0 in special case where scale is a scalar

// (num_axes == 0). Mathematically equivalent for any choice of axis_, so the

// actual setting can be safely ignored; and computation is most efficient

// with axis_ == 0 and (therefore) outer_dim_ == 1. (Setting axis_ to

// bottom[0]->num_axes() - 1, giving inner_dim_ == 1, would be equally

// performant.)

axis_ = (scale->num_axes() == 0) ?

0 : bottom[0]->CanonicalAxisIndex(param.axis());

CHECK_GE(bottom[0]->num_axes(), axis_ + scale->num_axes())

<< "scale blob's shape extends past bottom[0]'s shape when applied "

<< "starting with bottom[0] axis = " << axis_;

for (int i = 0; i < scale->num_axes(); ++i) {

CHECK_EQ(bottom[0]->shape(axis_ + i), scale->shape(i))

<< "dimension mismatch between bottom[0]->shape(" << axis_ + i

<< ") and scale->shape(" << i << ")";

}

outer_dim_ = bottom[0]->count(0, axis_);

scale_dim_ = scale->count();

inner_dim_ = bottom[0]->count(axis_ + scale->num_axes());

// 如果 top 层和 bottom 层同名,则进行 in-place 计算

if (bottom[0] == top[0]) { // in-place computation

temp_.ReshapeLike(*bottom[0]);

} else {

top[0]->ReshapeLike(*bottom[0]);

}

sum_result_.Reshape(vector<int>(1, outer_dim_ * scale_dim_));

const int sum_mult_size = std::max(outer_dim_, inner_dim_);

sum_multiplier_.Reshape(vector<int>(1, sum_mult_size));

if (sum_multiplier_.cpu_data()[sum_mult_size - 1] != Dtype(1)) {

caffe_set(sum_mult_size, Dtype(1), sum_multiplier_.mutable_cpu_data());

}

if (bias_layer_) {

bias_bottom_vec_[0] = top[0];

bias_layer_->Reshape(bias_bottom_vec_, top);

}

}

template <typename Dtype>

void ScaleLayer<Dtype>::Forward_cpu(

const vector<Blob<Dtype>*>& bottom, const vector<Blob<Dtype>*>& top) {

const Dtype* bottom_data = bottom[0]->cpu_data();

if (bottom[0] == top[0]) {

// In-place computation; need to store bottom data before overwriting it.

// Note that this is only necessary for Backward; we could skip this if not

// doing Backward, but Caffe currently provides no way of knowing whether

// we'll need to do Backward at the time of the Forward call.

// in-place 计算,需要先临时复制一份,再进行计算.

caffe_copy(bottom[0]->count(), bottom[0]->cpu_data(),

temp_.mutable_cpu_data());

}

const Dtype* scale_data =

((bottom.size() > 1) ? bottom[1] : this->blobs_[0].get())->cpu_data();

Dtype* top_data = top[0]->mutable_cpu_data();

for (int n = 0; n < outer_dim_; ++n) {

for (int d = 0; d < scale_dim_; ++d) {

const Dtype factor = scale_data[d];

caffe_cpu_scale(inner_dim_, factor, bottom_data, top_data);

bottom_data += inner_dim_;

top_data += inner_dim_;

}

}

if (bias_layer_) {

bias_layer_->Forward(bias_bottom_vec_, top);

}

}

template <typename Dtype>

void ScaleLayer<Dtype>::Backward_cpu(const vector<Blob<Dtype>*>& top,

const vector<bool>& propagate_down, const vector<Blob<Dtype>*>& bottom) {

if (bias_layer_ &&

this->param_propagate_down_[this->param_propagate_down_.size() - 1]) {

bias_layer_->Backward(top, bias_propagate_down_, bias_bottom_vec_);

}

const bool scale_param = (bottom.size() == 1);

Blob<Dtype>* scale = scale_param ? this->blobs_[0].get() : bottom[1];

if ((!scale_param && propagate_down[1]) ||

(scale_param && this->param_propagate_down_[0])) {

const Dtype* top_diff = top[0]->cpu_diff();

const bool in_place = (bottom[0] == top[0]);

const Dtype* bottom_data = (in_place ? &temp_ : bottom[0])->cpu_data();

// Hack: store big eltwise product in bottom[0] diff, except in the special

// case where this layer itself does the eltwise product, in which case we

// can store it directly in the scale diff, and we're done.

// If we're computing in-place (and not doing eltwise computation), this

// hack doesn't work and we store the product in temp_.

const bool is_eltwise = (bottom[0]->count() == scale->count());

Dtype* product = (is_eltwise ? scale->mutable_cpu_diff() :

(in_place ? temp_.mutable_cpu_data() : bottom[0]->mutable_cpu_diff()));

caffe_mul(top[0]->count(), top_diff, bottom_data, product);

if (!is_eltwise) {

Dtype* sum_result = NULL;

if (inner_dim_ == 1) {

sum_result = product;

} else if (sum_result_.count() == 1) {

const Dtype* sum_mult = sum_multiplier_.cpu_data();

Dtype* scale_diff = scale->mutable_cpu_diff();

if (scale_param) {

Dtype result = caffe_cpu_dot(inner_dim_, product, sum_mult);

*scale_diff += result;

} else {

*scale_diff = caffe_cpu_dot(inner_dim_, product, sum_mult);

}

} else {

const Dtype* sum_mult = sum_multiplier_.cpu_data();

sum_result = (outer_dim_ == 1) ?

scale->mutable_cpu_diff() : sum_result_.mutable_cpu_data();

caffe_cpu_gemv(CblasNoTrans, sum_result_.count(), inner_dim_,

Dtype(1), product, sum_mult, Dtype(0), sum_result);

}

if (outer_dim_ != 1) {

const Dtype* sum_mult = sum_multiplier_.cpu_data();

Dtype* scale_diff = scale->mutable_cpu_diff();

if (scale_dim_ == 1) {

if (scale_param) {

Dtype result = caffe_cpu_dot(outer_dim_, sum_mult, sum_result);

*scale_diff += result;

} else {

*scale_diff = caffe_cpu_dot(outer_dim_, sum_mult, sum_result);

}

} else {

caffe_cpu_gemv(CblasTrans, outer_dim_, scale_dim_,

Dtype(1), sum_result, sum_mult, Dtype(scale_param),

scale_diff);

}

}

}

}

if (propagate_down[0]) {

const Dtype* top_diff = top[0]->cpu_diff();

const Dtype* scale_data = scale->cpu_data();

Dtype* bottom_diff = bottom[0]->mutable_cpu_diff();

for (int n = 0; n < outer_dim_; ++n) {

for (int d = 0; d < scale_dim_; ++d) {

const Dtype factor = scale_data[d];

caffe_cpu_scale(inner_dim_, factor, top_diff, bottom_diff);

bottom_diff += inner_dim_;

top_diff += inner_dim_;

}

}

}

}

#ifdef CPU_ONLY

STUB_GPU(ScaleLayer);

#endif

INSTANTIATE_CLASS(ScaleLayer);

REGISTER_LAYER_CLASS(Scale);

} // namespace caffe

Reference

[1] - caffe中batch_norm层代码详细注解

[2] - CAFFE源码学习笔记之batch_norm_layer

[3] - Caffe BatchNormalization 推导

[4] - Caffe Scale层解析