Lifted Struct Similarity Softmax Layer, 聚类, 图像检索等特征学习

论文: Deep Metric Learning via Lifted Structured Feature Embedding - CVPR2016

项目路径: Deep-Metric-Learning-CVPR16

1. 在 prototxt 中的定义

layer {

name: "fc_embedding"

type: "InnerProduct"

bottom: "pool_ave"

top: "fc_embedding"

param {

lr_mult: 1.0

decay_mult: 1.0

}

param {

lr_mult: 2.0

decay_mult: 0.0

}

inner_product_param {

num_output: 64 // feature dimension

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0.0

}

}

}

####### LiftedStructSimilaritySoftmaxLoss #####

layer {

name: "loss"

type: "LiftedStructSimilaritySoftmaxLoss"

bottom: "fc_embedding"

bottom: "label"

top: "loss"

lifted_struct_sim_softmax_loss_param {

margin: 1 // margin parameter \alpha

}

}

2. caffe.proto 中的定义

message LayerParameter {

optional LiftedStructSimilaritySoftmaxLossParameter lifted_struct_sim_softmax_loss_param = 148;

}

message LiftedStructSimilaritySoftmaxLossParameter {

optional float margin = 1 [default = 1.0]; // margin parameter \alpha

}

3. lifted_struct_similarity_softmax_layer.hpp

#ifndef CAFFE_LIFTED_STRUCT_SIMILARITY_LOSS_LAYER_HPP_

#define CAFFE_LIFTED_STRUCT_SIMILARITY_LOSS_LAYER_HPP_

#include <vector>

#include "caffe/blob.hpp"

#include "caffe/layer.hpp"

#include "caffe/proto/caffe.pb.h"

#include "caffe/layers/loss_layer.hpp"

namespace caffe {

template <typename Dtype>

class LiftedStructSimilaritySoftmaxLossLayer : public LossLayer<Dtype> {

public:

explicit LiftedStructSimilaritySoftmaxLossLayer(const LayerParameter& param)

: LossLayer<Dtype>(param) {}

virtual void LayerSetUp(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top);

virtual inline int ExactNumBottomBlobs() const { return 2; }

virtual inline const char* type() const { return "LiftedStructSimilaritySoftmaxLoss"; }

virtual inline bool AllowForceBackward(const int bottom_index) const {

return bottom_index != 1;

}

protected:

virtual void Forward_cpu(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top);

virtual void Backward_cpu(const vector<Blob<Dtype>*>& top,

const vector<bool>& propagate_down, const vector<Blob<Dtype>*>& bottom);

Blob<Dtype> dist_sq_; // cached for backward pass

Blob<Dtype> dot_; //

Blob<Dtype> ones_;

Blob<Dtype> blob_pos_diff_;

Blob<Dtype> blob_neg_diff_;

Blob<Dtype> loss_aug_inference_;

Blob<Dtype> summer_vec_;

Dtype num_constraints;

};

} // namespace caffe

#endif // CAFFE_LIFTED_STRUCT_SIMILARITY_LOSS_LAYER_HPP_

4. lifted_struct_similarity_softmax_layer.cpp

#include <algorithm>

#include <vector>

#include "caffe/layers/lifted_struct_similarity_softmax_layer.hpp"

#include "caffe/util/math_functions.hpp"

namespace caffe {

template <typename Dtype>

void LiftedStructSimilaritySoftmaxLossLayer<Dtype>::LayerSetUp(const vector<Blob<Dtype>*>& bottom, const vector<Blob<Dtype>*>& top) {

LossLayer<Dtype>::LayerSetUp(bottom, top);

CHECK_EQ(bottom[0]->height(), 1);

CHECK_EQ(bottom[0]->width(), 1);

CHECK_EQ(bottom[1]->channels(), 1);

CHECK_EQ(bottom[1]->height(), 1);

CHECK_EQ(bottom[1]->width(), 1);

// List of member variables defined in /include/caffe/loss_layers.hpp;

// diff_, dist_sq_, summer_vec_, loss_aug_inference_;

dist_sq_.Reshape(bottom[0]->num(), 1, 1, 1);

dot_.Reshape(bottom[0]->num(), bottom[0]->num(), 1, 1);

ones_.Reshape(bottom[0]->num(), 1, 1, 1); // n by 1 vector of ones.

for (int i=0; i < bottom[0]->num(); ++i){

ones_.mutable_cpu_data()[i] = Dtype(1);

}

blob_pos_diff_.Reshape(bottom[0]->channels(), 1, 1, 1);

blob_neg_diff_.Reshape(bottom[0]->channels(), 1, 1, 1);

}

template <typename Dtype>

void LiftedStructSimilaritySoftmaxLossLayer<Dtype>::Forward_cpu(const vector<Blob<Dtype>*>& bottom, const vector<Blob<Dtype>*>& top) {

const int channels = bottom[0]->channels(); // feature dims

for (int i = 0; i < bottom[0]->num(); i++){

dist_sq_.mutable_cpu_data()[i] = caffe_cpu_dot(channels, bottom[0]->cpu_data() + (i*channels), bottom[0]->cpu_data() + (i*channels)); // \hat{x} in papaers

}

int M_ = bottom[0]->num(); // mini-batch 内样本数

int N_ = bottom[0]->num();

int K_ = bottom[0]->channels(); // 特征维度

const Dtype* bottom_data1 = bottom[0]->cpu_data(); // 特征矩阵

const Dtype* bottom_data2 = bottom[0]->cpu_data();

Dtype dot_scaler(-2.0);

caffe_cpu_gemm<Dtype>(CblasNoTrans, CblasTrans, M_, N_, K_, dot_scaler, bottom_data1, bottom_data2, (Dtype)0., dot_.mutable_cpu_data());

// add ||x_i||^2 to all elements in row i

for (int i=0; i<N_; i++){

caffe_axpy(N_, dist_sq_.cpu_data()[i], ones_.cpu_data(), dot_.mutable_cpu_data() + i*N_);

}

// add the norm vector to row i

for (int i=0; i<N_; i++){

caffe_axpy(N_, Dtype(1.0), dist_sq_.cpu_data(), dot_.mutable_cpu_data() + i*N_);

caffe_abs(N_, dot_.mutable_cpu_data() + i*N_, dot_.mutable_cpu_data() + i*N_);

}

// construct pairwise label matrix

vector<vector<bool> > label_mat(N_, vector<bool>(N_, false));

for (int i=0; i<N_; i++){

for (int j=0; j<N_; j++){

label_mat[i][j] = (bottom[1]->cpu_data()[i] == bottom[1]->cpu_data()[j]);

}

}

Dtype margin = this->layer_param_.lifted_struct_sim_softmax_loss_param().margin();

Dtype loss(0.0);

num_constraints = Dtype(0.0);

const Dtype* bin = bottom[0]->cpu_data();

Dtype* bout = bottom[0]->mutable_cpu_diff();

// zero initialize bottom[0]->mutable_cpu_diff();

for (int i=0; i<N_; i++){

caffe_set(K_, Dtype(0.0), bout + i*K_);

}

// loop upper triangular matrix and look for positive anchors

for (int i=0; i<N_; i++){

for (int j=i+1; j<N_; j++){

// found a positive pair @ anchor (i, j)

if (label_mat[i][j]){

Dtype dist_pos = sqrt(dot_.cpu_data()[i*N_ + j] + 2e-10);

caffe_sub(K_, bin + i*K_, bin + j*K_, blob_pos_diff_.mutable_cpu_data());

// 1.count the number of negatives for this positive

int num_negatives = 0;

for (int k=0; k<N_; k++){

if (!label_mat[i][k]){

num_negatives += 1;

}

}

for (int k=0; k<N_; k++){

if (!label_mat[j][k]){

num_negatives += 1;

}

}

loss_aug_inference_.Reshape(num_negatives, 1, 1, 1);

// vector of ones used to sum along channels

summer_vec_.Reshape(num_negatives, 1, 1, 1);

for (int ss = 0; ss < num_negatives; ++ss){

summer_vec_.mutable_cpu_data()[ss] = Dtype(1);

}

// 2. compute loss augmented inference

int neg_idx = 0;

// mine negative (anchor i, neg k)

for (int k=0; k<N_; k++){

if (!label_mat[i][k]){

loss_aug_inference_.mutable_cpu_data()[neg_idx] = margin - sqrt(dot_.cpu_data()[i*N_ + k]);

neg_idx++;

}

}

// mine negative (anchor j, neg k)

for (int k=0; k<N_; k++){

if (!label_mat[j][k]){

loss_aug_inference_.mutable_cpu_data()[neg_idx] = margin - sqrt(dot_.cpu_data()[j*N_ + k]);

neg_idx++;

}

}

// compute softmax of loss aug inference vector;

Dtype max_elem = *std::max_element(loss_aug_inference_.cpu_data(), loss_aug_inference_.cpu_data() + num_negatives);

caffe_add_scalar(loss_aug_inference_.count(), Dtype(-1.0)*max_elem, loss_aug_inference_.mutable_cpu_data());

caffe_exp(loss_aug_inference_.count(), loss_aug_inference_.mutable_cpu_data(), loss_aug_inference_.mutable_cpu_data());

Dtype soft_maximum = log(caffe_cpu_dot(num_negatives, summer_vec_.cpu_data(), loss_aug_inference_.mutable_cpu_data())) + max_elem;

Dtype this_loss = std::max(soft_maximum + dist_pos, Dtype(0.0));

// squared hinge

loss += this_loss * this_loss;

num_constraints += Dtype(1.0);

// 3. compute gradients

Dtype sum_exp = caffe_cpu_dot(num_negatives, summer_vec_.cpu_data(), loss_aug_inference_.mutable_cpu_data());

// update from positive distance dJ_dD_{ij}; update x_i, x_j

Dtype scaler(0.0);

scaler = Dtype(2.0)*this_loss / dist_pos;

// update x_i

caffe_axpy(K_, scaler * Dtype(1.0), blob_pos_diff_.cpu_data(), bout + i*K_);

// update x_j

caffe_axpy(K_, scaler * Dtype(-1.0), blob_pos_diff_.cpu_data(), bout + j*K_);

// update from negative distance dJ_dD_{ik}; update x_i, x_k

neg_idx = 0;

Dtype dJ_dDik(0.0);

for (int k=0; k<N_; k++){

if (!label_mat[i][k]){

caffe_sub(K_, bin + i*K_, bin + k*K_, blob_neg_diff_.mutable_cpu_data());

dJ_dDik = Dtype(2.0)*this_loss * Dtype(-1.0)* loss_aug_inference_.cpu_data()[neg_idx] / sum_exp;

neg_idx++;

scaler = dJ_dDik / sqrt(dot_.cpu_data()[i*N_ + k]);

// update x_i

caffe_axpy(K_, scaler * Dtype(1.0), blob_neg_diff_.cpu_data(), bout + i*K_);

// update x_k

caffe_axpy(K_, scaler * Dtype(-1.0), blob_neg_diff_.cpu_data(), bout + k*K_);

}

}

// update from negative distance dJ_dD_{jk}; update x_j, x_k

Dtype dJ_dDjk(0.0);

for (int k=0; k<N_; k++){

if (!label_mat[j][k]){

caffe_sub(K_, bin + j*K_, bin + k*K_, blob_neg_diff_.mutable_cpu_data());

dJ_dDjk = Dtype(2.0)*this_loss * Dtype(-1.0)*loss_aug_inference_.cpu_data()[neg_idx] / sum_exp;

neg_idx++;

scaler = dJ_dDjk / sqrt(dot_.cpu_data()[j*N_ + k]);

// update x_j

caffe_axpy(K_, scaler * Dtype(1.0), blob_neg_diff_.cpu_data(), bout + j*K_);

// update x_k

caffe_axpy(K_, scaler * Dtype(-1.0), blob_neg_diff_.cpu_data(), bout + k*K_);

}

}

} // close this postive pair

}

}

loss = loss / num_constraints / Dtype(2.0);

top[0]->mutable_cpu_data()[0] = loss;

}

template <typename Dtype>

void LiftedStructSimilaritySoftmaxLossLayer<Dtype>::Backward_cpu(const vector<Blob<Dtype>*>& top,

const vector<bool>& propagate_down, const vector<Blob<Dtype>*>& bottom) {

const Dtype alpha = top[0]->cpu_diff()[0] / num_constraints / Dtype(2.0);

int num = bottom[0]->num();

int channels = bottom[0]->channels();

for (int i = 0; i < num; i++){

Dtype* bout = bottom[0]->mutable_cpu_diff();

caffe_scal(channels, alpha, bout + (i*channels));

}

}

#ifdef CPU_ONLY

STUB_GPU(LiftedStructSimilaritySoftmaxLossLayer);

#endif

INSTANTIATE_CLASS(LiftedStructSimilaritySoftmaxLossLayer);

REGISTER_LAYER_CLASS(LiftedStructSimilaritySoftmaxLoss);

} // namespace caffe

5. lifted struct similarity softmax layer 实现细节分析

主要是分析理解论文中公式及源码分析.

const int channels = bottom[0]->channels(); // bottom[0] - channels = 64 dim

for (int i = 0; i < bottom[0]->num(); i++){ // i = 1:m

dist_sq_.mutable_cpu_data()[i] = caffe_cpu_dot(channels, bottom[0]->cpu_data() + (i*channels), bottom[0]->cpu_data() + (i*channels)); // cpu 上的 dot 计算

}

dist_sq_[i]: ${ ||f(\mathbf{x} _i)|| _2^2 }$

dist_sq_: ${ \hat{\mathbf{x}} = [||f(\mathbf{x} _1)|| _2^2, ..., ||f(\mathbf{x} _m)|| _2^2]^T }$

int M_ = bottom[0]->num(); // M_ = m

int N_ = bottom[0]->num(); // N_ = m

int K_ = bottom[0]->channels(); // K_ = 64

const Dtype* bottom_data1 = bottom[0]->cpu_data(); // (m, 64, 1, 1)

const Dtype* bottom_data2 = bottom[0]->cpu_data(); // (m, 64, 1, 1)

Dtype dot_scaler(-2.0);

caffe_cpu_gemm<Dtype>(CblasNoTrans, CblasTrans, M_, N_, K_, dot_scaler, bottom_data1, bottom_data2, (Dtype)0., dot_.mutable_cpu_data());

void caffe_cpu_gemm(const CBLAS_TRANSPOSE TransA, const CBLAS_TRANSPOSE TransB, const int M, const int N, const int K, const Dtype alpha, const Dtype* A, const Dtype* B, const Dtype beta, Dtype* C);

功能: C=alpha*A*B+beta*C

A,B,C 是输入矩阵(一维数组格式)

CblasRowMajor :数据是行主序的(二维数据也是用一维数组储存的)

TransA, TransB:是否要对A和B做转置操作(CblasTrans CblasNoTrans)

M: A、C 的行数

N: B、C 的列数

K: A 的列数, B 的行数

lda : A的列数(不做转置)行数(做转置)

ldb: B的列数(不做转置)行数(做转置)

这里计算的是:

${ dot_ = -2.0 X X^T }$

// add ||x_i||^2 to all elements in row i

for (int i=0; i<N_; i++){ // N_ = 64

caffe_axpy(N_, dist_sq_.cpu_data()[i], ones_.cpu_data(), dot_.mutable_cpu_data() + i*N_);

}

// add the norm vector to row i

for (int i=0; i<N_; i++){

caffe_axpy(N_, Dtype(1.0), dist_sq_.cpu_data(), dot_.mutable_cpu_data() + i*N_); //这里可能出现负值

caffe_abs(N_, dot_.mutable_cpu_data() + i*N_, dot_.mutable_cpu_data() + i*N_); // 加绝对值

}

这里计算的是:

${ D^2 = \hat{\mathbf{x}} \mathbf{1}^T + \mathbf{1} \hat{\mathbf{x}}^T- 2XX^T }$

${ D_{ij}^2 = ||f(\mathbf{x} _i)- \mathbf{x} _j||_2^2 }$

源码中的实现,${ D^2 }$ 可能出现负值,添加了一行绝对值

caffe_abs处理.

// construct pairwise label matrix

vector<vector<bool> > label_mat(N_, vector<bool>(N_, false));

for (int i=0; i<N_; i++){

for (int j=0; j<N_; j++){

label_mat[i][j] = (bottom[1]->cpu_data()[i] == bottom[1]->cpu_data()[j]);

}

}

针对 mini-batch 内的正负样本建立矩阵,label_mat中相同 label 的位置值为 1, 不同则为 0.

正向传播计算:

// 在 label_mat 矩阵的上三角矩阵进行循环,寻找 positive anchors

for (int i=0; i<N_; i++){

for (int j=i+1; j<N_; j++){

// 如果 label_mat 值为1,则找到一个 positive pair @ anchor (i, j)

if (label_mat[i][j]){

Dtype dist_pos = sqrt(dot_.cpu_data()[i*N_ + j] + 2e-10); // D_{ij}

caffe_sub(K_, bin + i*K_, bin + j*K_, blob_pos_diff_.mutable_cpu_data());

// blob_pos_diff_ = [bin + i*K] - [bin + j*k]

dist_pos: ${ D_{ij} }$, 如果两个样本足够相似,且它们的特征向量相似值很小,可能会出现 ${ D_{ij} = 0 }$. 这里添加了一个很小的数 2e-10.

void caffe_sub

(const int n, const float* a, const float* b, float* y) { vsSub(n, a, b, y); } y[i] = a[i] - b[i]

// 1. 计算 positive 样本 i 的 negetives 样本数

int num_negatives = 0;

for (int k=0; k<N_; k++){

if (!label_mat[i][k]){

num_negatives += 1;

}

}

// 计算 positive 样本 j 的 negetives 样本数

for (int k=0; k<N_; k++){

if (!label_mat[j][k]){

num_negatives += 1;

}

}

loss_aug_inference_.Reshape(num_negatives, 1, 1, 1);

// vector of ones used to sum along channels

summer_vec_.Reshape(num_negatives, 1, 1, 1);

for (int ss = 0; ss < num_negatives; ++ss){

summer_vec_.mutable_cpu_data()[ss] = Dtype(1);

}

这里主要是统计 positive pair {i, j} 所对应的 negetives 样本总数.

// 2. 计算 loss

int neg_idx = 0;

// mine negative (anchor i, neg k)

for (int k=0; k<N_; k++){

if (!label_mat[i][k]){

loss_aug_inference_.mutable_cpu_data()[neg_idx] = margin - sqrt(dot_.cpu_data()[i*N_ + k]); // margin - D_{i,k}

neg_idx++;

}

}

// mine negative (anchor j, neg k)

for (int k=0; k<N_; k++){

if (!label_mat[j][k]){

loss_aug_inference_.mutable_cpu_data()[neg_idx] = margin - sqrt(dot_.cpu_data()[j*N_ + k]); // margin - D_{j,k}

neg_idx++;

}

}

// compute softmax of loss aug inference vector;

Dtype max_elem = *std::max_element(loss_aug_inference_.cpu_data(), loss_aug_inference_.cpu_data() + num_negatives);

caffe_add_scalar(loss_aug_inference_.count(), Dtype(-1.0)*max_elem, loss_aug_inference_.mutable_cpu_data());

caffe_exp(loss_aug_inference_.count(), loss_aug_inference_.mutable_cpu_data(), loss_aug_inference_.mutable_cpu_data());

Dtype soft_maximum = log(caffe_cpu_dot(num_negatives, summer_vec_.cpu_data(), loss_aug_inference_.mutable_cpu_data())) + max_elem;

// hinge the soft_maximum - S_ij (positive pair similarity)

Dtype this_loss = std::max(soft_maximum + dist_pos, Dtype(0.0));

// squared hinge

loss += this_loss * this_loss;

num_constraints += Dtype(1.0);

这里主要进行的计算是:

- positive i 与 negetive k 间的 ${ \alpha- D_{i, k} }$

- positive j 与 negetive k 间的 ${ \alpha- D_{j, k} }$

- 采用 Log-Sum-Exp 的计算技巧

soft_maximum(sm):

${ log(\sum_{(i, k) \in \mathcal{N}} exp(\alpha- D_{i, k}) + \sum_{(j, l) \in \mathcal{N}} exp(\alpha- D_{j, l})) }$this_loss:

${ \hat{J} = max(0, sm + D_{i, j}) }$loss:

${ \hat{J} = max(0, sm + D_{i, j})^2 }$

最终的loss是:

loss = loss / num_constraints / Dtype(2.0);

${ J = \frac{1}{2 \mathcal{P}} \sum_{(i,j) \in \mathcal{P}} max(0, sm + D_{i, j})^2 }$

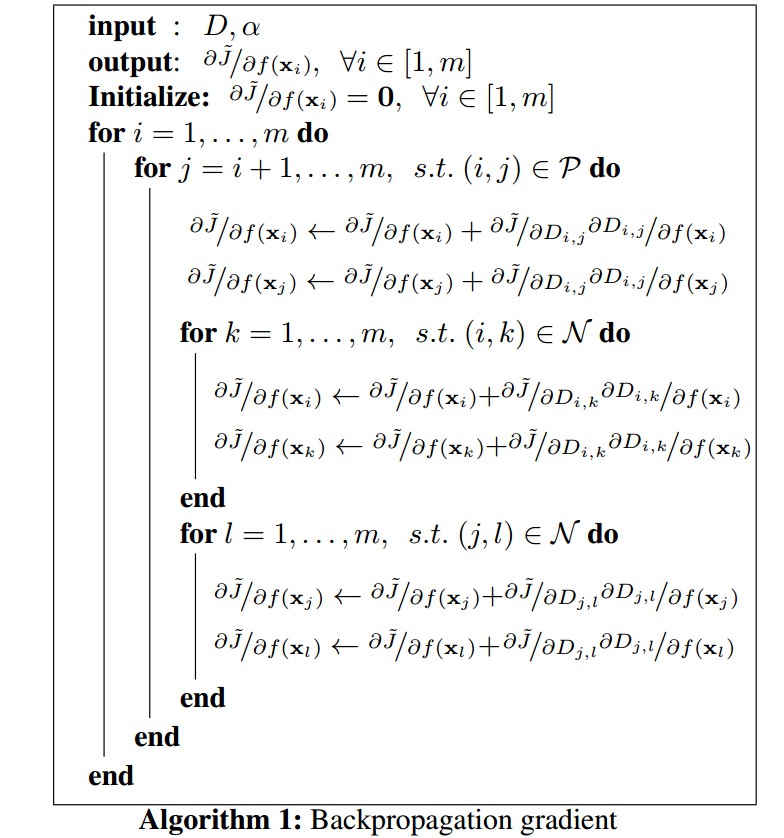

反向传播计算:

// 3. 梯度计算

Dtype sum_exp = caffe_cpu_dot(num_negatives, summer_vec_.cpu_data(), loss_aug_inference_.mutable_cpu_data());

// update from positive distance dJ_dD_{ij}; update x_i, x_j

Dtype scaler(0.0);

scaler = Dtype(2.0)*this_loss / dist_pos;

// update x_i

caffe_axpy(K_, scaler * Dtype(1.0), blob_pos_diff_.cpu_data(), bout + i*K_);

// update x_j

caffe_axpy(K_, scaler * Dtype(-1.0), blob_pos_diff_.cpu_data(), bout + j*K_);

// update from negative distance dJ_dD_{ik}; update x_i, x_k

neg_idx = 0;

Dtype dJ_dDik(0.0);

for (int k=0; k<N_; k++){

if (!label_mat[i][k]){

caffe_sub(K_, bin + i*K_, bin + k*K_, blob_neg_diff_.mutable_cpu_data());

dJ_dDik = Dtype(2.0)*this_loss * Dtype(-1.0)* loss_aug_inference_.cpu_data()[neg_idx] / sum_exp;

neg_idx++;

scaler = dJ_dDik / sqrt(dot_.cpu_data()[i*N_ + k]);

// update x_i

caffe_axpy(K_, scaler * Dtype(1.0), blob_neg_diff_.cpu_data(), bout + i*K_);

// update x_k

caffe_axpy(K_, scaler * Dtype(-1.0), blob_neg_diff_.cpu_data(), bout + k*K_);

}

}

// update from negative distance dJ_dD_{jk}; update x_j, x_k

Dtype dJ_dDjk(0.0);

for (int k=0; k<N_; k++){

if (!label_mat[j][k]){

caffe_sub(K_, bin + j*K_, bin + k*K_, blob_neg_diff_.mutable_cpu_data());

dJ_dDjk = Dtype(2.0)*this_loss * Dtype(-1.0)*loss_aug_inference_.cpu_data()[neg_idx] / sum_exp;

neg_idx++;

scaler = dJ_dDjk / sqrt(dot_.cpu_data()[j*N_ + k]);

// update x_j

caffe_axpy(K_, scaler * Dtype(1.0), blob_neg_diff_.cpu_data(), bout + j*K_);

// update x_k

caffe_axpy(K_, scaler * Dtype(-1.0), blob_neg_diff_.cpu_data(), bout + k*K_);

}

}

} // close this postive pair

dist_pos: ${ D_{i,j} }$

第 7 行的 scaler: ${ \frac{\partial J}{\partial D_{i,j}} }$

Loss ${ J }$ 关于 ${ x_i }$ 的梯度计算:

${ \frac{\partial J} {\partial x_i} = \frac{\partial J}{\partial D_{i,j}} \cdot \frac{\partial D_{i.j}}{\partial x_i} = \frac{\partial J}{\partial D_{i,j}} \cdot (2*||f(\mathbf{x} _i)- f(\mathbf{x} _j)||_2) }$

Loss ${ J }$ 关于 ${ x_j }$ 的梯度计算:

${ \frac{\partial J} {\partial x_j} = \frac{\partial J}{\partial D_{i,j}} \cdot \frac{\partial D_{i.j}}{\partial x_j} = \frac{\partial J}{\partial D_{i,j}} \cdot (-2*||f(\mathbf{x} _i)- f(\mathbf{x} _j)||_2) }$

Loss ${ J }$ 关于 ${ D_{i,k} }$ 的梯度计算:

${ \frac{\partial J}{\partial D_{i,k}} = J_{i,j} \cdot \frac{-exp(\alpha- D_{i, k}) }{exp (J_{i,j}- D_{i,j}) } }$

反向传播计算流程:

6. Related

[1] - 论文阅读 - Deep Metric Learning via Lifted Structured Feature Embedding