在前面已经测试过 YOLOV3 和 SSD 基于 OpenCV DNN 的目标检测实现,这里再简单实现下 Faster RCNN 基于 DNN 的实现.

1. Faster RCNN 模型下载

直接从 OpenCV DNN 提供的模型 weights 文件和 config 文件链接下载:

| Model | Version | ||

|---|---|---|---|

| Faster-RCNN Inception v2 | 2018_01_28 | weights | config |

| Faster-RCNN ResNet-50 | 2018_01_28 | weights | config |

或者,根据 TensorFlow 目标检测模型转换为 OpenCV DNN 可调用格式 - AIUAI 中的说明,自己进行模型转化. 如果是基于 TensorFlow 对定制数据集训练的模型,则采用这种方法.

这里以 faster_rcnn_resnet50_coco_2018_01_28 模型为例,手工得到 graph.pbtxt 文件,进行测试.

2. Faster RCNN DNN 实现之一

#!/usr/bin/python

#!--*-- coding:utf-8 --*--

import cv2

import matplotlib.pyplot as plt

pb_file = '/path/to/faster_rcnn_resnet50_coco_2018_01_28/frozen_inference_graph.pb'

pbtxt_file = '/path/to/faster_rcnn_resnet50_coco_2018_01_28/graph.pbtxt'

net = cv2.dnn.readNetFromTensorflow(pb_file, pbtxt_file)

score_threshold = 0.3

img_file = "test.jpg"

img_cv2 = cv2.imread(img_file)

height, width, _ = img_cv2.shape

net.setInput(cv2.dnn.blobFromImage(img_cv2,

size=(300, 300),

swapRB=True,

crop=False))

out = net.forward()

print(out)

for detection in out[0, 0, :,:]:

score = float(detection[2])

if score > score_threshold:

left = detection[3] * width

top = detection[4] * height

right = detection[5] * width

bottom = detection[6] * height

cv2.rectangle(img_cv2,

(int(left), int(top)),

(int(right), int(bottom)),

(23, 230, 210),

thickness=2)

t, _ = net.getPerfProfile()

label = 'Inference time: %.2f ms' % \

(t * 1000.0 / cv2.getTickFrequency())

cv2.putText(img_cv2, label, (0, 15),

cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 0, 255))

plt.figure(figsize=(10, 8))

plt.imshow(img_cv2[:, :, ::-1])

plt.title("OpenCV DNN Faster RCNN-ResNet50")

plt.axis("off")

plt.show()

3. Faster RCNN DNN 实现之二

#!/usr/bin/python3

# -*- coding: utf-8 -*-

import cv2

import os

import matplotlib.pyplot as plt

import time

class general_faster_rcnn(object):

def __init__(self, modelpath):

self.conf_threshold = 0.3 # Confidence threshold

self.nms_threshold = 0.4 # Non-maximum suppression threshold

self.net_width = 416 # 300 # Width of network's input image

self.net_height = 416 # 300 # Height of network's input image

self.classes = self.get_coco_names()

self.faster_rcnn_model = self.get_faster_rcnn_model(modelpath)

self.outputs_names = self.get_outputs_names()

def get_coco_names(self):

classes = ["person", "bicycle", "car", "motorcycle", "airplane",

"bus", "train", "truck", "boat", "traffic light",

"fire hydrant", "background", "stop sign", "parking meter",

"bench", "bird", "cat", "dog", "horse", "sheep", "cow",

"elephant", "bear", "zebra", "giraffe", "background",

"backpack", "umbrella", "background", "background",

"handbag", "tie", "suitcase", "frisbee", "skis",

"snowboard", "sports ball", "kite", "baseball bat",

"baseball glove", "skateboard", "surfboard", "tennis racket",

"bottle", "background", "wine glass", "cup", "fork", "knife",

"spoon", "bowl", "banana", "apple", "sandwich", "orange",

"broccoli", "carrot", "hot dog", "pizza", "donut", "cake",

"chair", "couch", "potted plant", "bed", "background",

"dining table", "background", "background", "toilet",

"background", "tv", "laptop", "mouse", "remote", "keyboard",

"cell phone", "microwave", "oven", "toaster", "sink",

"refrigerator", "background", "book", "clock", "vase",

"scissors", "teddy bear", "hair drier", "toothbrush",

"background" ]

return classes

def get_faster_rcnn_model(self, modelpath):

pb_file = os.path.join(modelpath, "frozen_inference_graph.pb")

pbtxt_file = os.path.join(modelpath, "graph.pbtxt")

net = cv2.dnn.readNetFromTensorflow(pb_file, pbtxt_file)

net.setPreferableBackend(cv2.dnn.DNN_BACKEND_OPENCV)

net.setPreferableTarget(cv2.dnn.DNN_TARGET_CPU)

return net

def get_outputs_names(self):

# 网络中所有网络层的名字

layersNames = self.faster_rcnn_model.getLayerNames()

# 网络输出层的名字,如,没有链接输出的网络层.

return [layersNames[i[0] - 1] for i in \

self.faster_rcnn_model.getUnconnectedOutLayers()]

# NMS 处理掉低 confidence 的边界框.

def postprocess(self, img_cv2, outputs):

img_height, img_width, _ = img_cv2.shape

class_ids = []

confidences = []

boxes = []

for output in outputs:

for detection in output[0, 0]:

# [batch_id, class_id, confidence, left, top, right, bottom]

confidence = detection[2]

if confidence > self.conf_threshold:

left = int(detection[3]*img_width)

top = int(detection[4]*img_height)

right = int(detection[5]*img_width)

bottom = int(detection[6]*img_height)

width = right - left + 1

height = bottom - top + 1

class_ids.append(int(detection[1]))

confidences.append(float(confidence))

boxes.append([left, top, width, height])

# NMS 处理

indices = cv2.dnn.NMSBoxes(boxes,

confidences,

self.conf_threshold,

self.nms_threshold)

results = []

for ind in indices:

res_box = {}

res_box["class_id"] = class_ids[ind[0]]

res_box["score"] = confidences[ind[0]]

box = boxes[ind[0]]

res_box["box"] = (box[0], box[1], box[0]+box[2], box[1]+box[3])

results.append(res_box)

return results

def predict(self, img_file):

img_cv2 = cv2.imread(img_file)

# 创建 4D blob.

blob = cv2.dnn.blobFromImage(

img_cv2,

size=(self.net_width, self.net_height),

swapRB=True, crop=False)

# 设置网络的输入 blob

self.faster_rcnn_model.setInput(blob)

# 打印网络的输出层名

print("[INFO]Net output layers: {}".format(self.outputs_names))

# Runs forward

outputs = self.faster_rcnn_model.forward(self.outputs_names)

# NMS

results = self.postprocess(img_cv2, outputs)

return results

def vis_res(self, img_file, results):

img_cv2 = cv2.imread(img_file)

for result in results:

left, top, right, bottom = result["box"]

cv2.rectangle(img_cv2,

(left, top),

(right, bottom),

(255, 178, 50), 3)

# Get the label for the class name and its confidence

label = '%.2f' % result["score"]

if self.classes:

assert (result["class_id"] < len(self.classes))

label = '%s:%s' % (self.classes[result["class_id"]], label)

label_size, baseline = cv2.getTextSize(

label, cv2.FONT_HERSHEY_SIMPLEX, 0.5, 1)

top = max(top, label_size[1])

cv2.rectangle(

img_cv2,

(left, top - round(1.5 * label_size[1])),

(left + round(1.5 * label_size[0]), top + baseline),

(255, 0, 0),

cv2.FILLED)

cv2.putText(img_cv2,

label,

(left, top),

cv2.FONT_HERSHEY_SIMPLEX,

0.75, (0, 0, 0), 1)

t, _ = self.faster_rcnn_model.getPerfProfile()

label = 'Inference time: %.2f ms' % \

(t * 1000.0 / cv2.getTickFrequency())

cv2.putText(img_cv2, label, (0, 15),

cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 0, 255))

plt.figure(figsize=(10, 8))

plt.imshow(img_cv2[:,:,::-1])

plt.title("OpenCV DNN Faster RCNN-ResNet50")

plt.axis("off")

plt.show()

if __name__ == '__main__':

print("[INFO]Faster RCNN object detection in OpenCV.")

img_file = "test.jpg"

start = time.time()

modelpath = "/path/to/faster_rcnn_resnet50_coco_2018_01_28/"

faster_rcnn_model = general_faster_rcnn(modelpath)

print("[INFO]Model loads time: ", time.time() - start)

start = time.time()

results = faster_rcnn_model.predict(img_file)

print("[INFO]Model predicts time: ", time.time() - start)

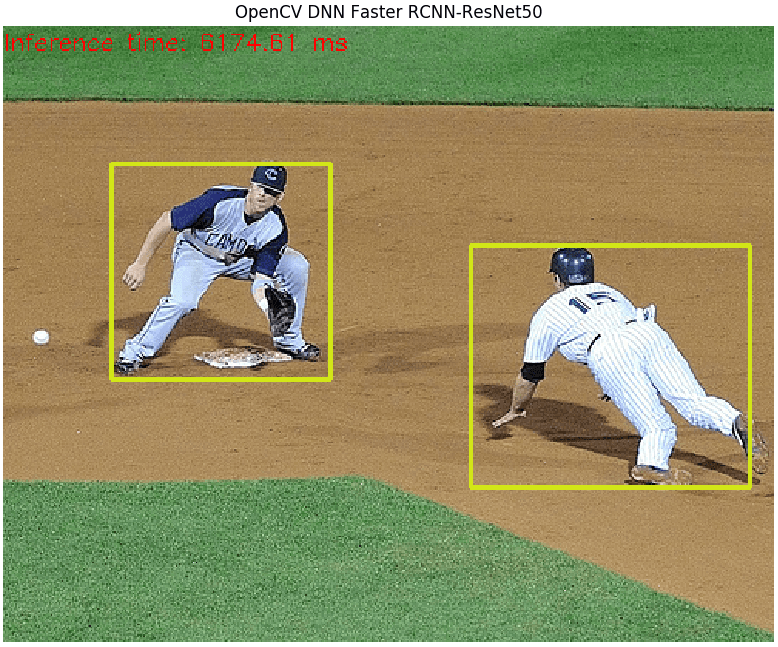

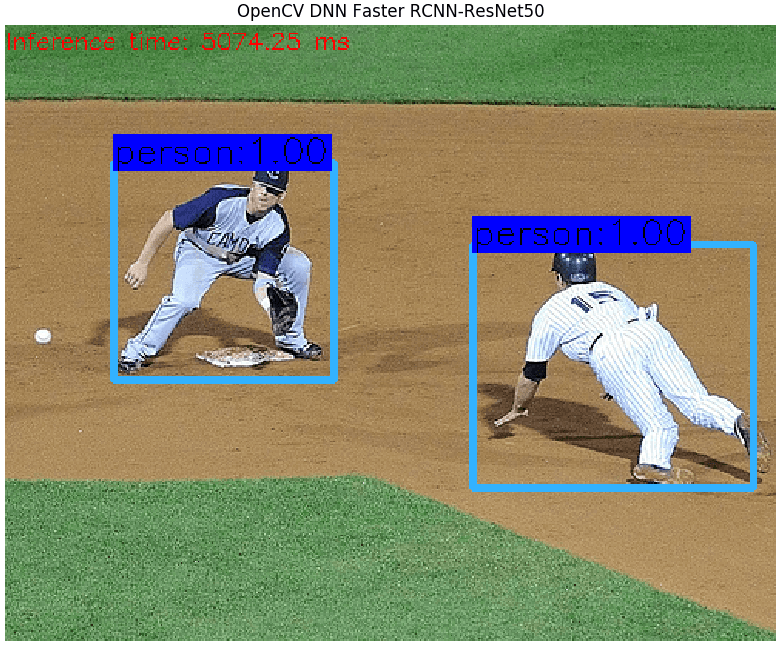

faster_rcnn_model.vis_res(img_file, results)网络输入为 (300, 300) 时,目标检测结果为(与 实现之一 中的结果一致.):

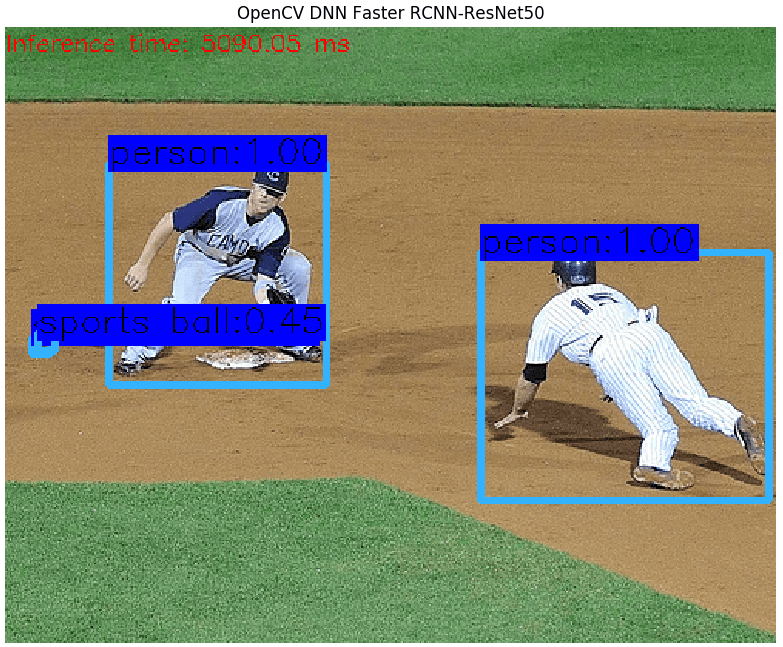

网络输入为 (416, 416) 时,目标检测结果为(提高输入图片分辨率有助于提升检测结果):

4. Faster RCNN TensorFlow 实现

采用 TensorFlow 目标检测 API 进行模型测试:

#!/usr/bin/python3

#!--*-- coding:utf-8 --*--

import os

import numpy as np

import cv2

import matplotlib.pyplot as plt

import tensorflow as tf

model_path = "/path/to/faster_rcnn_resnet50_coco_2018_01_28"

frozen_pb_file = os.path.join(model_path, 'frozen_inference_graph.pb')

score_threshold = 0.3

img_file = "test.jpg"

# Read the graph.

with tf.gfile.FastGFile(frozen_pb_file, 'rb') as f:

graph_def = tf.GraphDef()

graph_def.ParseFromString(f.read())

with tf.Session() as sess:

# Restore session

sess.graph.as_default()

tf.import_graph_def(graph_def, name='')

# Read and preprocess an image.

img_cv2 = cv2.imread(img_file)

img_height, img_width, _ = img_cv2.shape

img_in = cv2.resize(img_cv2, (416, 416))

img_in = img_in[:, :, [2, 1, 0]] # BGR2RGB

# Run the model

outputs = sess.run(

[sess.graph.get_tensor_by_name('num_detections:0'),

sess.graph.get_tensor_by_name('detection_scores:0'),

sess.graph.get_tensor_by_name('detection_boxes:0'),

sess.graph.get_tensor_by_name('detection_classes:0')],

feed_dict={'image_tensor:0': img_in.reshape(

1, img_in.shape[0], img_in.shape[1], 3)})

# Visualize detected bounding boxes.

num_detections = int(outputs[0][0])

for i in range(num_detections):

classId = int(outputs[3][0][i])

score = float(outputs[1][0][i])

bbox = [float(v) for v in outputs[2][0][i]]

if score > score_threshold:

x = bbox[1] * img_width

y = bbox[0] * img_height

right = bbox[3] * img_width

bottom = bbox[2] * img_height

cv2.rectangle(img_cv2,

(int(x), int(y)),

(int(right), int(bottom)),

(125, 255, 51),

thickness=2)

plt.figure(figsize=(10, 8))

plt.imshow(img_cv2[:, :, ::-1])

plt.title("TensorFlow Faster RCNN-ResNet50")

plt.axis("off")

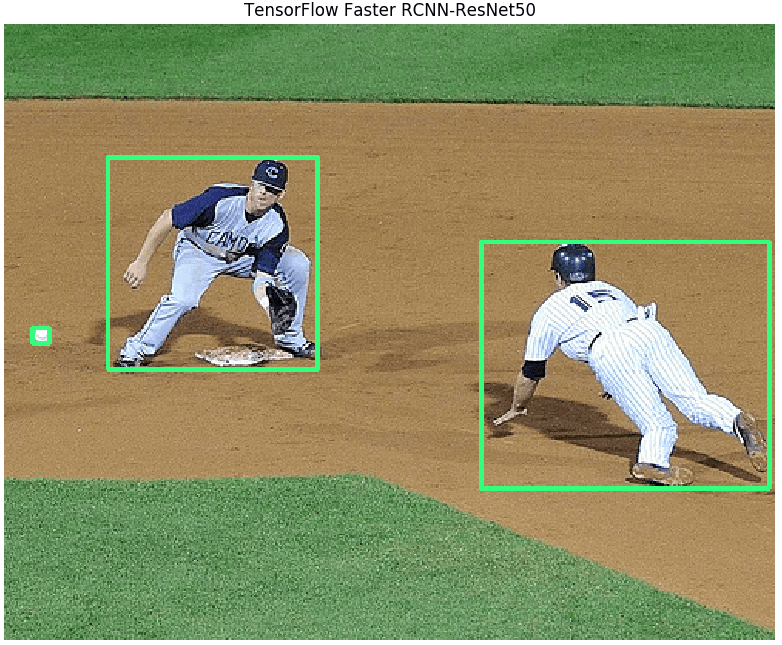

plt.show()目标检测结果如:

采用 TensorFlow 目标检测 API 对于相同的 (300, 300) 网络输入,得到的结果好像比 DNN 更好一些,原因暂未知.

2 comments

方法一中检测的对象没有label名称啊

实现一和实现二的 label 是一致的.