Github - Human Image Gender Classifier

论文:Expressive Body Capture: 3D Hands, Face, and Body from a Single Image - CVPR2019

基于OpenPose姿态估计结果,裁剪人体并识别人体性别.

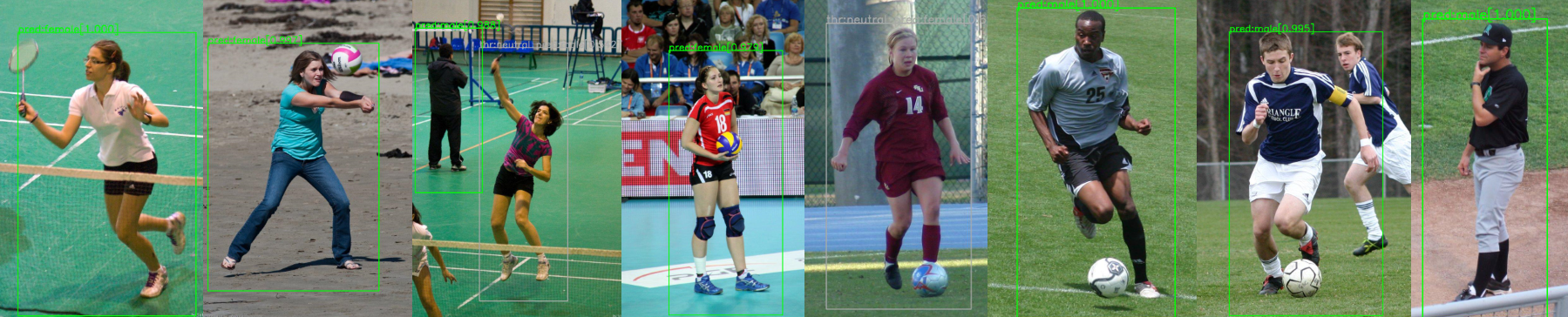

示例,如图:

1. 项目环境

- Python 3.7

- TensorFlow 1.13.1

- Configer

- CUDA-10.0, cuDNN-7.5, Ubuntu 18.04

requirements.txt:

imageio==2.5.0

pillow

numpy==1.16.3

scikit-image==0.15.0

scikit-learn==0.20.3

scipy==1.2.1

tensorflow==1.15.2安装:

pip install git+https://github.com/nghorbani/homogenus模型下载,假设权重文件存放路径homogenus/trained_models/tf:

2. 项目源码

项目结构:

├── homogenus

│ ├── tf

│ │ ├── homogenus_infer.py

│ │ └── __init__.py

│ ├── tools

│ │ ├── body_cropper.py

│ │ ├── image_tools.py

│ │ ├── __init__.py

│ │ └── omni_tools.py

│ └── trained_models

│ └── tf

│ ├── checkpoint

│ ├── TR02_E02_It_002010.ckpt.data-00000-of-00001

│ ├── TR02_E02_It_002010.ckpt.index

│ ├── TR02_E02_It_002010.ckpt.meta

│ └── version.txt

├── requirements.txt

└── setup.py2.1. 模型预测 homogenus_infer.py

#!/usr/bin/python3

#!--*-- coding: utf-8 --*--

import tensorflow as tf

import numpy as np

import os, sys

import json

import cv2

import glob

from homogenus.tools.image_tools import put_text_in_image, fontColors, read_prep_image, save_images

from homogenus.tools.body_cropper import cropout_openpose, should_accept_pose

from homogenus.tools.omni_tools import makepath

class Homogenus_infer(object):

def __init__(self, trained_model_dir, sess=None):

'''

:param trained_model_dir: the directory where you have put the homogenus TF trained models

:param sess:

'''

best_model_fname = sorted(glob.glob(os.path.join(trained_model_dir , '*.ckpt.index')), key=os.path.getmtime)

if len(best_model_fname):

self.best_model_fname = best_model_fname[-1].replace('.index', '')

else:

raise ValueError('Couldnt find TF trained model in the provided directory --trained_model_dir=%s. Make sure you have downloaded them there.' % trained_model_dir)

if sess is None:

self.sess = tf.Session()

else:

self.sess = sess

# Load graph.

self.saver = tf.train.import_meta_graph(self.best_model_fname+'.meta')

self.graph = tf.get_default_graph()

self.prepare()

def prepare(self):

print('Restoring checkpoint %s..' % self.best_model_fname)

self.saver.restore(self.sess, self.best_model_fname)

def predict_genders(self, images_indir, openpose_indir, images_outdir=None, openpose_outdir=None):

'''

Given a directory with images and another directory with corresponding openpose genereated jsons will

augment openpose jsons with gender labels.

:param images_indir: Input directory of images with common extensions

:param openpose_indir: Input directory of openpose jsons

:param images_outdir: If given will overlay the detected gender on detected humans that pass the criteria

:param openpose_outdir: If given will dump the gendered openpose files in this directory. if not will augment the origianls

:return:

'''

sys.stdout.write('\nRunning homogenus on --images_indir=%s --openpose_indir=%s\n'%(images_indir, openpose_indir))

im_fnames = []

for img_ext in ['png', 'jpg', 'jpeg', 'bmp']:

im_fnames.extend(glob.glob(os.path.join(images_indir, '*.%s'%img_ext)))

if len(im_fnames):

sys.stdout.write('Found %d images\n' % len(im_fnames))

else:

raise ValueError('No images could be found in %s'%images_indir)

accept_threshold = 0.9

crop_margin = 0.08

if images_outdir is not None:

makepath(images_outdir)

if openpose_outdir is None:

openpose_outdir = openpose_indir

else:

makepath(openpose_outdir)

Iph = self.graph.get_tensor_by_name(u'input_images:0')

probs_op = self.graph.get_tensor_by_name(u'probs_op:0')

for im_fname in im_fnames:

im_basename = os.path.basename(im_fname)

img_ext = im_basename.split('.')[-1]

openpose_in_fname = os.path.join(openpose_indir, im_basename.replace('.%s'%img_ext, '_keypoints.json'))

with open(openpose_in_fname, 'r') as f:

pose_data = json.load(f)

im_orig = cv2.imread(im_fname, 3)[:,:,::-1].copy()

for opnpose_pIdx in range(len(pose_data['people'])):

pose_data['people'][opnpose_pIdx]['gender_pd'] = 'neutral'

pose = np.asarray(pose_data['people'][opnpose_pIdx]['pose_keypoints_2d']).reshape(-1, 3)

if not should_accept_pose(pose, human_prob_thr=0.5):

continue

crop_info = cropout_openpose(im_fname, pose, want_image=True, crop_margin=crop_margin)

cropped_image = crop_info['cropped_image']

if cropped_image.shape[0] < 200 or cropped_image.shape[1] < 200:

continue

img = read_prep_image(cropped_image)[np.newaxis]

probs_ob = self.sess.run(probs_op, feed_dict={Iph: img})[0]

gender_id = np.argmax(probs_ob, axis=0)

gender_prob = probs_ob[gender_id]

gender_pd = 'male' if gender_id == 0 else 'female'

if gender_prob>accept_threshold:

color = 'green'

text = 'pred:%s[%.3f]' % (gender_pd, gender_prob)

else:

text = 'thr:%s_pred:%s[%.3f]' % ('neutral', gender_pd, gender_prob)

gender_pd = 'neutral'

color = 'grey'

x1 = crop_info['crop_boundary']['offset_width']

y1 = crop_info['crop_boundary']['offset_height']

x2 = crop_info['crop_boundary']['target_width'] + x1

y2 = crop_info['crop_boundary']['target_height'] + y1

im_orig = cv2.rectangle(im_orig, (x1, y1), (x2, y2), fontColors[color], 2)

im_orig = put_text_in_image(im_orig, [text], color, (x1, y1))[0]

pose_data['people'][opnpose_pIdx]['gender_pd'] = gender_pd

sys.stdout.write('%s -- peron_id %d --> %s\n'%(im_fname, opnpose_pIdx, gender_pd))

if images_outdir != None:

save_images(im_orig, images_outdir, [os.path.basename(im_fname)])

openpose_out_fname = os.path.join(openpose_outdir, im_basename.replace('.%s'%img_ext, '_keypoints.json'))

with open(openpose_out_fname, 'w') as f:

json.dump(pose_data, f)

if images_outdir is not None:

sys.stdout.write('Dumped overlayed images at %s'%images_outdir)

sys.stdout.write('Dumped gendered openpose keypoints at %s'%openpose_outdir)

if __name__ == '__main__':

import argparse

parser = argparse.ArgumentParser()

parser.add_argument("-tm", "--trained_model_dir", default="./homogenus/trained_models/tf/",

help="The path to the directory holding homogenus trained models in TF.")

parser.add_argument("-ii", "--images_indir", required= True,

help="Directory of the input images.")

parser.add_argument("-oi", "--openpose_indir", required=True,

help="Directory of openpose keypoints, e.g. json files.")

parser.add_argument("-io", "--images_outdir", default=None,

help="Directory to put predicted gender overlays. If not given, wont produce any overlays.")

parser.add_argument("-oo", "--openpose_outdir", default=None,

help="Directory to put the openpose gendered keypoints. If not given, it will augment the original openpose json files.")

ps = parser.parse_args()

hg = Homogenus_infer(trained_model_dir=ps.trained_model_dir)

hg.predict_genders(images_indir=ps.images_indir,

openpose_indir=ps.openpose_indir,

images_outdir=ps.images_outdir,

openpose_outdir=ps.openpose_outdir)2.2. body_cropper.py

#!/usr/bin/python3

#!--*-- coding: utf-8 --*--

from PIL import Image

import numpy as np

import os

import json

from homogenus.tools.image_tools import cropout_openpose

def should_accept_pose(pose, human_prob_thr=.5):

'''

:param pose:

:param human_prob_thr:

:return:

'''

rleg_ids = [12,13]

lleg_ids = [9,10]

rarm_ids = [5,6,7]

larm_ids = [2,3,4]

head_ids = [16,15,14,17,0,1]

human_prob = pose[:, 2].mean()

rleg = sum(pose[rleg_ids][:, 2] > 0.0)

lleg = sum(pose[lleg_ids][:, 2] > 0.0)

rarm = sum(pose[rarm_ids][:, 2] > 0.0)

larm = sum(pose[larm_ids][:, 2] > 0.0)

head = sum(pose[head_ids][:, 2] > 0.0)

if rleg<1 and lleg<1:

return False

if rarm<1 and larm<1:

return False

if head<2:

return False

if human_prob < human_prob_thr:

return False

return True

def crop_humans(im_fname, pose_fname, want_image=True, human_prob_thr=0.5):

'''

:param im_fname: the input image path

:param pose_fname: the corresponding openpose json file

:param want_image: if False will only return the crop boundaries, otherwise will also return the cropped image

:param human_prob_thr: the probability to threshold the detected humans

:return:

'''

crop_infos = {}

with open(pose_fname) as f:

pose_data = json.load(f)

if not len(pose_data['people']):

return crop_infos

for pIdx in range(len(pose_data['people'])):

pose = np.asarray(pose_data['people'][pIdx]['pose_keypoints_2d']).reshape(-1, 3)

if not should_accept_pose(pose, human_prob_thr=human_prob_thr):

continue

crop_info = cropout_openpose(im_fname, pose, want_image=want_image)

crop_infos['%02d'%pIdx] = {

'crop_info':crop_info['crop_boundary'],

#'pose':pose,

'pose_hash':hash(pose.tostring())}

if want_image:

crop_infos['%02d' % pIdx]['cropped_image'] = crop_info['cropped_image'].astype(np.uint8)

return crop_infos

def crop_dataset(base_dir, dataset_name, human_prob_thr=.5):

import glob

import random

results_dir = os.path.join(base_dir, dataset_name, 'cropped_body_tight')

images_dir = os.path.join(base_dir, dataset_name, 'images')

pose_jsonpath = os.path.join(base_dir, dataset_name, 'openpose_json')

if not os.path.exists(results_dir):

os.makedirs(results_dir)

crop_infos_jsonpath = os.path.join(results_dir, 'crop_infos.json')

fnames = glob.glob(os.path.join(images_dir, '*.jpg'))

random.shuffle(fnames)

crop_infos = {}

for fname in fnames:

pose_fname = os.path.join(pose_jsonpath, os.path.basename(fname).replace('.jpg', '_keypoints.json'))

if not os.path.exists(pose_fname):

continue

cur_crop_info = crop_humans(fname, pose_fname, human_prob_thr=human_prob_thr, want_image=True)

for pname in cur_crop_info.keys():

crop_id = '%s_%s' % (os.path.basename(fname).split('.')[0], pname)

crop_outpath = os.path.join(results_dir, '%s.jpg' % crop_id)

cropped_image = cur_crop_info[pname]['cropped_image']

if cropped_image.shape[0] < 200 or cropped_image.shape[1] < 200:

continue

cur_crop_info.pop('cropped_image')

# print(crop_outpath)

result = Image.fromarray(cropped_image[:,:,::-1])

result.save(crop_outpath)

crop_infos[crop_id] = cur_crop_info

with open(crop_infos_jsonpath, 'w') as f:

json.dump(crop_infos, f)2.3. image_tools.py

#!/usr/bin/python3

#!--*-- coding: utf-8 --*--

import cv2

import numpy as np

import os

fontColors = {'red': (255, 0, 0),

'green': (0, 255, 0),

'yellow': (255, 255, 0),

'blue': (0, 255, 255),

'orange': (255, 165, 0),

'black': (0, 0, 0),

'grey': (169, 169, 169),

'white': (255, 255, 255),

}

def crop_to_bounding_box(image, offset_height, offset_width, target_height, target_width):

cropped = image[offset_height:offset_height + target_height, offset_width:offset_width + target_width, :]

return cropped

def pad_to_bounding_box(image, offset_height, offset_width, target_height, target_width):

height, width, depth = image.shape

after_padding_width = target_width - offset_width - width

after_padding_height = target_height - offset_height - height

# Do not pad on the depth dimensions.

paddings = ((offset_height, after_padding_height), (offset_width, after_padding_width), (0, 0))

padded = np.pad(image, paddings, 'constant')

return padded

def resize_image_with_crop_or_pad(image, target_height, target_width):

# crop to ratio, center

height, width, c = image.shape

width_diff = target_width - width

offset_crop_width = max(-width_diff // 2, 0)

offset_pad_width = max(width_diff // 2, 0)

height_diff = target_height - height

offset_crop_height = max(-height_diff // 2, 0)

offset_pad_height = max(height_diff // 2, 0)

# Maybe crop if needed.

# print('image shape', image.shape)

cropped = crop_to_bounding_box(image, offset_crop_height, offset_crop_width,

min(target_height, height),

min(target_width, width))

# print('after cropp', cropped.shape)

# Maybe pad if needed.

resized = pad_to_bounding_box(cropped, offset_pad_height, offset_pad_width,

target_height, target_width)

# print('after pad', resized.shape)

return resized[:target_height, :target_width, :]

def cropout_openpose(im_fname, pose, want_image=True, crop_margin=0.08):

im_orig = cv2.imread(im_fname, 3)

im_height, im_width = im_orig.shape[0], im_orig.shape[1]

pose = pose[pose[:, 2] > 0.0]

x_min, x_max = pose[:, 0].min(), pose[:, 0].max()

y_min, y_max = pose[:, 1].min(), pose[:, 1].max()

margin_h = crop_margin * im_height

margin_w = crop_margin * im_width

offset_height = int(max((y_min - margin_h), 0))

target_height = int(min((y_max + margin_h), im_height)) - offset_height

offset_width = int(max((x_min - margin_w), 0))

target_width = int(min((x_max + margin_w), im_width)) - offset_width

crop_info = {'crop_boundary':

{'offset_height':offset_height,

'target_height':target_height,

'offset_width':offset_width,

'target_width':target_width}}

if want_image:

crop_info['cropped_image'] = crop_to_bounding_box(im_orig, offset_height, offset_width, target_height, target_width)

return crop_info

def put_text_in_image(images, text, color ='white', position=None):

'''

:param images: 4D array of images

:param text: list of text to be printed in each image

:param color: the color or colors of each text

:return:

'''

import cv2

if not isinstance(text, list):

text = [text]

if not isinstance(color, list):

color = [color for _ in range(images.shape[0])]

if images.ndim == 3:

images = images.reshape(1,images.shape[0],images.shape[1],3)

images_out = []

for imIdx in range(images.shape[0]):

img = images[imIdx].astype(np.uint8)

font = cv2.FONT_HERSHEY_SIMPLEX

if position is None:position = (10, img.shape[1])

fontScale = 1.

lineType = 2

fontColor = fontColors[color[imIdx]]

cv2.putText(img, text[imIdx],

position,

font,

fontScale,

fontColor,

lineType)

images_out.append(img)

return np.array(images_out)

def read_prep_image(im_fname, avoid_distortion=True):

'''

if min(height, width) is larger than 224 subsample to 224. this will also affect the larger dimension.

in the end crop and pad the whole image to get to 224x224

:param im_fname:

:return:

'''

if isinstance(im_fname, np.ndarray):

image_data = im_fname

else:

image_data = cv2.imread(im_fname, 3)

# height, width = image_reader.read_image_dims(sess, image_data)

# image_data = image_reader.decode_jpeg(sess, image_data)

# print(image_data.min(), image_data.max(), image_data.shape)

# import matplotlib.pyplot as plt

# plt.imshow(image_data[:,:,::-1].astype(np.uint8))

# plt.show()

# height, width = image_data.shape[0], image_data.shape[1]

# if min(height, width) > 224:

# print(image_data.shape)

# rt = 224. / min(height, width)

# image_data = cv2.resize(image_data, (int(rt * width), int(rt * height)), interpolation=cv2.INTER_AREA)

# print('>>resized to>>',image_data.shape)

height, width = image_data.shape[0], image_data.shape[1]

if avoid_distortion:

if max(height, width) > 224:

# print(image_data.shape)

rt = 224. / max(height, width)

image_data = cv2.resize(image_data, (int(rt * width), int(rt * height)), interpolation=cv2.INTER_AREA)

# print('>>resized to>>',image_data.shape)

else:

from skimage.transform import resize

image_data = resize(image_data, (224, 224), mode='constant', anti_aliasing=False, preserve_range=True)

# print(image_data.min(), image_data.max(), image_data.shape)

# import matplotlib.pyplot as plt

# plt.imshow(image_data[:,:,::-1].astype(np.uint8))

# plt.show()

image_data = resize_image_with_crop_or_pad(image_data, 224, 224)

# print(image_data.min(), image_data.max(), image_data.shape)

# import matplotlib.pyplot as plt

# plt.imshow(image_data[:, :, ::-1].astype(np.uint8))

# plt.show()

#return image_data.astype(np.float32)

return image_data.astype(np.uint8)

def save_images(images, out_dir, im_names = None):

from homogenus.tools.omni_tools import id_generator

if images.ndim == 3: images = images.reshape(1,images.shape[0],images.shape[1],3)

from PIL import Image

if im_names is None:

im_names = ['%s.jpg'%id_generator(4) for i in range(images.shape[0])]

for imIdx in range(images.shape[0]):

result = Image.fromarray(images[imIdx].astype(np.uint8))

result.save(os.path.join(out_dir, im_names[imIdx]))

return True2.4. omni_tools.py

#!/usr/bin/python3

#!--*-- coding: utf-8 --*--

import numpy as np

copy2cpu = lambda tensor: tensor.detach().cpu().numpy()

colors = {

'pink': [.7, .7, .9],

'purple': [.9, .7, .7],

'cyan': [.7, .75, .5],

'red': [1.0,0.0,0.0],

'green': [.0, 1., .0],

'yellow': [1., 1., 0],

'brown': [.5, .7, .7],

'blue': [.0, .0, 1.],

'offwhite': [.8, .9, .9],

'white': [1., 1., 1.],

'orange': [.5, .65, .9],

'grey': [.7, .7, .7],

'black': np.zeros(3),

'white': np.ones(3),

'yellowg': [0.83,1,0],

}

def id_generator(size=13):

import string

import random

chars = string.ascii_uppercase + string.digits

return ''.join(random.choice(chars) for _ in range(size))

def log2file(logpath=None, auto_newline = True):

import sys

if logpath is not None:

makepath(logpath, isfile=True)

fhandle = open(logpath,'a+')

else:

fhandle = None

def _(text):

if auto_newline:

if not text.endswith('\n'):

text = text + '\n'

sys.stderr.write(text)

if fhandle is not None:

fhandle.write(text)

fhandle.flush()

return lambda text: _(text)

def makepath(desired_path, isfile = False):

'''

if the path does not exist make it

:param desired_path: can be path to a file or a folder name

:return:

'''

import os

if isfile:

if not os.path.exists(os.path.dirname(desired_path)):

os.makedirs(os.path.dirname(desired_path))

else:

if not os.path.exists(desired_path): os.makedirs(desired_path)

return desired_path