该文是关于 DeepDream 的简单实现,可参考 Alexander Mordvintsev 的 blog - Inceptionism: Going Deeper into Neural Networks.

DeepDream 是对于神经网络所学习的模式(patterns)的可视化实验. 类似于孩子看着天空中的云,并尝试解释随机形状时,DeepDream 会过度解释并增强其在图像中看到的图案.

DeepDream 首先将图像进行网络的前向计算,然后计算图像关于特定网络层的激活值的梯度. 则图像被修改,以增强这些激活值,增强网络可见的模式,并生成类似梦境的图像. 该过程被称为"Inceptionism".

下面开始说明如何进行神经网络梦境化,并增强其在图像中看到的超现实模式.

1. 配置

import numpy as np

import matplotlib.pyplot as plt

import matplotlib as mpl

from PIL import Image

import tensorflow as tf

from tensorflow.keras.preprocessing import image2. 选择待梦境化的图片

url = 'https://storage.googleapis.com/download.tensorflow.org/example_images/YellowLabradorLooking_new.jpg'

# Download an image and read it into a NumPy array.

def download(url, max_dim=None):

name = url.split('/')[-1]

image_path = tf.keras.utils.get_file(name, origin=url)

img = PIL.Image.open(image_path)

if max_dim:

img.thumbnail((max_dim, max_dim))

return np.array(img)

# Normalize an image

def deprocess(img):

img = 255*(img + 1.0)/2.0

return tf.cast(img, tf.uint8)

# Display an image

def show(img):

img = Image.fromarray(np.array(img))

plt.imshow(img)

plt.show()

# 图像下载

original_img = download(url, max_dim=500)

show(original_img)

3. 特征提取模型

下载一个预训练的图像分类模型. 可以根据需要选择任意预训练模型, 仅需要调整网络层的名字.

这里采用类似于 DeepDream 原始采用的 InceptionV3 模型.

base_model = tf.keras.applications.InceptionV3(include_top=False, weights='imagenet')DeepDream 的思想是,选择一个网络层(或多个网络层),以图像逐渐“刺激(excites)” 网络层的方式最大化损失函数. 合并的特征复杂度取决于所选择的网络层,如,越浅(lower)的网络层得到图像中简单的模式,而越深(deeper)的网络层得到图像中更复杂的特征.

InceptionV3 网络结构是比较大的. 对于DeepDream 而言,其感兴趣的是连接卷积层的网络层. InceptionV3 中有 11 个这样的网络层,网络层名为 "mixed0" 到 "mixed10". 采用不同的网络层会得到不同的梦境化图像. 越深网络层对应于 higher-level 特征(例如,eyes 和 faces),而越浅网络层对应于简单特征(如边缘、形状、纹理等).

其中,越深网络层的训练时间越久,因为梯度计算更深.

# Maximize the activations of these layers

names = ['mixed3', 'mixed5']

layers = [base_model.get_layer(name).output for name in names]

# Create the feature extraction model

dream_model = tf.keras.Model(inputs=base_model.input, outputs=layers)4. 计算损失

损失值是所选择网络层的激活值之和.

损失值在每一层进行了归一化,因此,数值较大的网络层不会比数值较小的网络层对损失值的作用更大.

一般来说,损失值是通过梯度下降进行最小化的标准. 但 DeepDream 中,是通过梯度上升(Gradient ascent)对损失函数最大化.

def calc_loss(img, model):

# Pass forward the image through the model to retrieve the activations.

# Converts the image into a batch of size 1.

img_batch = tf.expand_dims(img, axis=0)

layer_activations = model(img_batch)

if len(layer_activations) == 1:

layer_activations = [layer_activations]

losses = []

for act in layer_activations:

loss = tf.math.reduce_mean(act)

losses.append(loss)

return tf.reduce_sum(losses)5. 梯度上升

当计算了所选择网络层的损失值后,然后就是计算关于图像的梯度,并将其与原始图像相加.

添加梯度到图像上,可以增强网络所看到的模式. 在每一步,都会创建一张新图片,其递增的刺激网络中特定网络层的激活值.

class DeepDream(tf.Module):

def __init__(self, model):

self.model = model

#性能起见,进行 tf.function封装,

@tf.function(

input_signature=(

tf.TensorSpec(shape=[None,None,3], dtype=tf.float32),

tf.TensorSpec(shape=[], dtype=tf.int32),

tf.TensorSpec(shape=[], dtype=tf.float32),)

)

def __call__(self, img, steps, step_size):

print("Tracing")

loss = tf.constant(0.0)

for n in tf.range(steps):

with tf.GradientTape() as tape:

# This needs gradients relative to `img`

# `GradientTape` only watches `tf.Variable`s by default

tape.watch(img)

loss = calc_loss(img, self.model)

# 计算关于输入图像像素的loss的梯度

gradients = tape.gradient(loss, img)

# Normalize the gradients.

gradients /= tf.math.reduce_std(gradients) + 1e-8

# In gradient ascent, the "loss" is maximized so that the input image increasingly "excites" the layers.

# You can update the image by directly adding the gradients (because they're the same shape!)

img = img + gradients*step_size

img = tf.clip_by_value(img, -1, 1)

return loss, img

#

deepdream = DeepDream(dream_model)6. 主循环

def run_deep_dream_simple(img, steps=100, step_size=0.01):

# Convert from uint8 to the range expected by the model.

img = tf.keras.applications.inception_v3.preprocess_input(img)

img = tf.convert_to_tensor(img)

step_size = tf.convert_to_tensor(step_size)

steps_remaining = steps

step = 0

while steps_remaining:

if steps_remaining>100:

run_steps = tf.constant(100)

else:

run_steps = tf.constant(steps_remaining)

steps_remaining -= run_steps

step += run_steps

loss, img = deepdream(img, run_steps, tf.constant(step_size))

show(deprocess(img))

print ("Step {}, loss {}".format(step, loss))

#

result = deprocess(img)

show(result)

return result

#

dream_img = run_deep_dream_simple(img=original_img, steps=100, step_size=0.01)

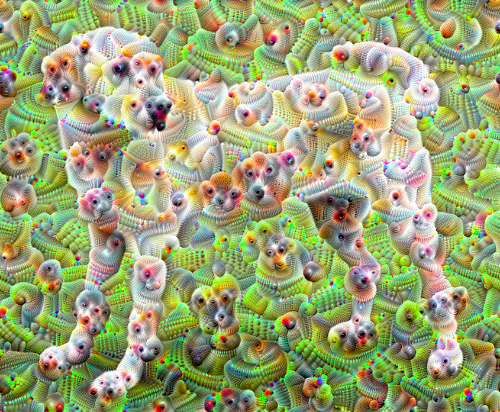

7. 升级版

前面的实现存在一些问题:

[1] - 输出是存在噪声的(其可以通过添加 tf.image.total_variation 损失来解决)

[2] - 图像是低分辨率的

[3] - 模式看起来好像都是发生在相同的粒度上.

解决这些问题的一个方法是,在不同的尺度进行梯度上升. 以使得在更小尺度生成的模式能被融合进更高尺度里,并填充一些细节.

import time

start = time.time()

OCTAVE_SCALE = 1.30

img = tf.constant(np.array(original_img))

base_shape = tf.shape(img)[:-1]

float_base_shape = tf.cast(base_shape, tf.float32)

for n in range(-2, 3):

new_shape = tf.cast(float_base_shape*(OCTAVE_SCALE**n), tf.int32)

img = tf.image.resize(img, new_shape).numpy()

img = run_deep_dream_simple(img=img, steps=50, step_size=0.01)

#

img = tf.image.resize(img, base_shape)

img = tf.image.convert_image_dtype(img/255.0, dtype=tf.uint8)

show(img)

end = time.time()

end-start

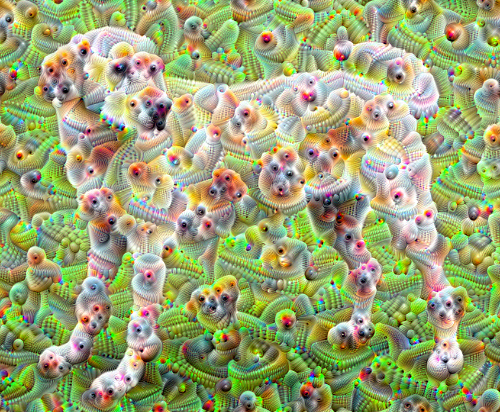

8. 再升级 - 图片分片

需要考虑的一点是,当图片尺寸增加时,梯度计算会需要更多的时间和内存消耗. 上面的实现可能就行不通了.

对此,可以将图片进行分片,并分别计算每一个分片的梯度.

为了避免图像分片连接处出现不协调,在分片计算前,对图像随机平移.

def random_roll(img, maxroll):

# Randomly shift the image to avoid tiled boundaries.

shift = tf.random.uniform(shape=[2], minval=-maxroll, maxval=maxroll, dtype=tf.int32)

img_rolled = tf.roll(img, shift=shift, axis=[0,1])

return shift, img_rolled

#

shift, img_rolled = random_roll(np.array(original_img), 512)

show(img_rolled)

图片分片后的 deepdream 函数:

class TiledGradients(tf.Module):

def __init__(self, model):

self.model = model

@tf.function(

input_signature=(

tf.TensorSpec(shape=[None,None,3], dtype=tf.float32),

tf.TensorSpec(shape=[], dtype=tf.int32),)

)

def __call__(self, img, tile_size=512):

shift, img_rolled = random_roll(img, tile_size)

# Initialize the image gradients to zero.

gradients = tf.zeros_like(img_rolled)

# Skip the last tile, unless there's only one tile.

xs = tf.range(0, img_rolled.shape[0], tile_size)[:-1]

if not tf.cast(len(xs), bool):

xs = tf.constant([0])

ys = tf.range(0, img_rolled.shape[1], tile_size)[:-1]

if not tf.cast(len(ys), bool):

ys = tf.constant([0])

for x in xs:

for y in ys:

# Calculate the gradients for this tile.

with tf.GradientTape() as tape:

# This needs gradients relative to `img_rolled`.

# `GradientTape` only watches `tf.Variable`s by default.

tape.watch(img_rolled)

# Extract a tile out of the image.

img_tile = img_rolled[x:x+tile_size, y:y+tile_size]

loss = calc_loss(img_tile, self.model)

# Update the image gradients for this tile.

gradients = gradients + tape.gradient(loss, img_rolled)

# Undo the random shift applied to the image and its gradients.

gradients = tf.roll(gradients, shift=-shift, axis=[0,1])

# Normalize the gradients.

gradients /= tf.math.reduce_std(gradients) + 1e-8

return gradients

#

get_tiled_gradients = TiledGradients(dream_model)主循环:

def run_deep_dream_with_octaves(img, steps_per_octave=100, step_size=0.01,

octaves=range(-2,3), octave_scale=1.3):

base_shape = tf.shape(img)

img = tf.keras.preprocessing.image.img_to_array(img)

img = tf.keras.applications.inception_v3.preprocess_input(img)

initial_shape = img.shape[:-1]

img = tf.image.resize(img, initial_shape)

for octave in octaves:

# Scale the image based on the octave

new_size = tf.cast(tf.convert_to_tensor(base_shape[:-1]), tf.float32)*(octave_scale**octave)

img = tf.image.resize(img, tf.cast(new_size, tf.int32))

for step in range(steps_per_octave):

gradients = get_tiled_gradients(img)

img = img + gradients*step_size

img = tf.clip_by_value(img, -1, 1)

if step % 10 == 0:

display.clear_output(wait=True)

show(deprocess(img))

print ("Octave {}, Step {}".format(octave, step))

result = deprocess(img)

return result

#

img = run_deep_dream_with_octaves(img=original_img, step_size=0.01)

display.clear_output(wait=True)

img = tf.image.resize(img, base_shape)

img = tf.image.convert_image_dtype(img/255.0, dtype=tf.uint8)

show(img)

9. 材料

[1] - TensorFlow Lucid