AI 技术中,CV 是被广泛应用的. 其中一个很好的应用场景就是时尚行业. 图片资源的可用性也提升了 CV 在在很多有意思场景中的应用.

Github - Zalando 提供了很多 AI 解决方案,以及其研究成果.

Zalando 是总部位于德国柏林的大型网络电子商城, 其主要产品是服装和鞋类。

AI 社区中,Zalando 团队因开源的 Fashion-MNIST 数据集而出名,该数据集旨在替换机器学习研究中的传统 MNIST 数据集.

近期,Zalando 团队又开源了一个新的数据集: Feidegger. 该数据集由连衣裙图片和对应的文本描述组成. 其主要用于很多文本图像任务(text-image tasks)场景中,比如 captioning 和 image retrieval.

这里,基于该数据集,主要实现了:

[1] - 基于图片相似性,实现连衣裙推荐系统(Dress Recomendation System);

[2] - 只基于文本描述,实现连衣裙标注系统(Dress Tagging System).

1. Feidegger 数据集

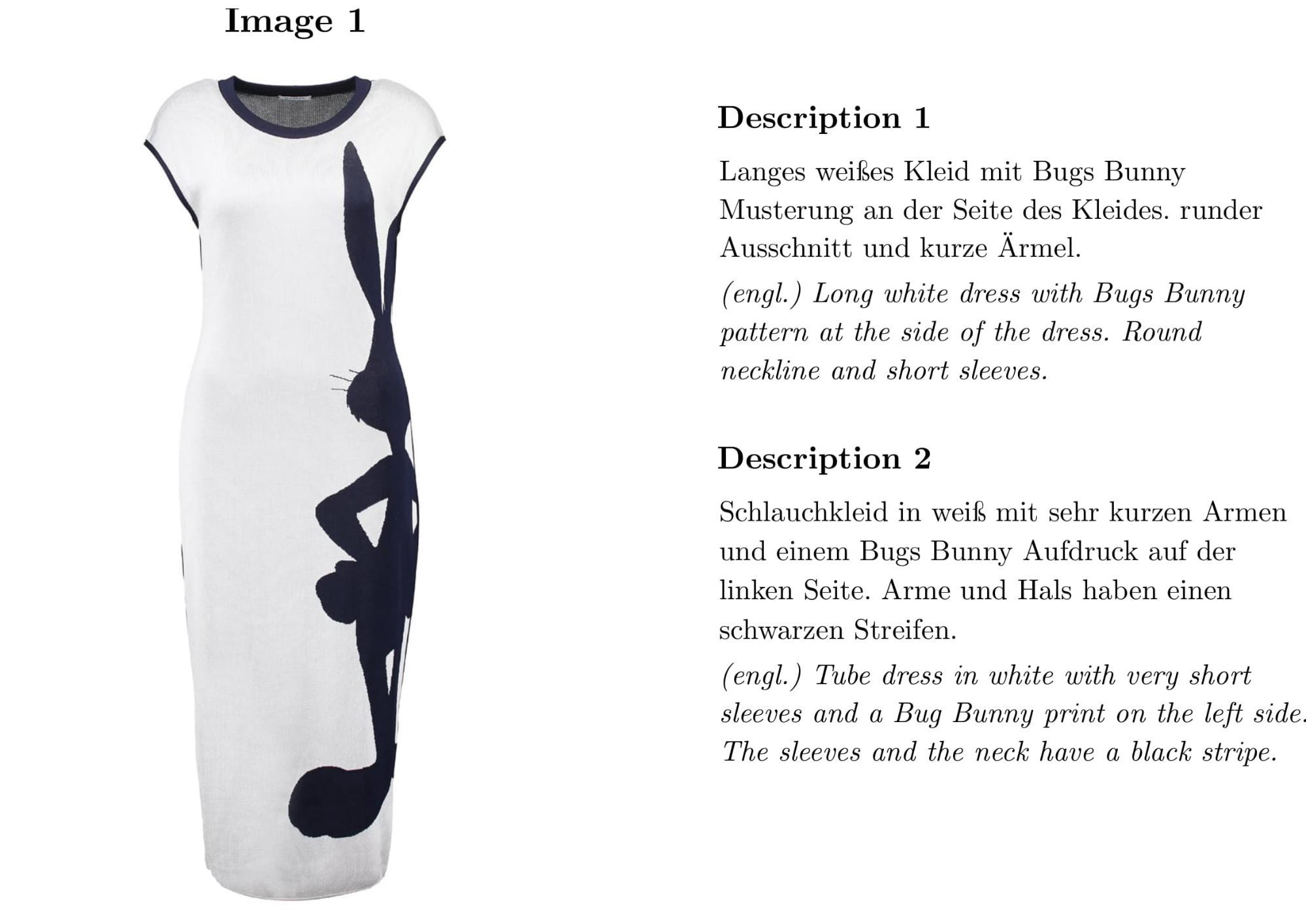

数据集共有 8732 张高分辨率图片,每张图片都是从 Zalando 电商网站获取的连衣裙白色背景图片(white-background). 对于每张图片,提供了 5 条德语文本描述(textual annotations),每句文本描述分别是由不同的用户给定的. 示例如下,是一张连衣裙图片的 5 条描述中的 2 条.(翻译后的英文仅供示例,并不是数据集中的一部分.)

原始数据集中,为每条单一描述存储了相关图像(以 url 格式):有一个单一的连衣裙加上类目. 因此,需要将相同连衣裙的描述进行合并,以便于以图片为对象进行操作和去重.

data = pd.read_csv('./FEIDEGGER.csv').fillna(' ')

newdata = data.groupby('Image URL')['Description'].apply(lambda x: x.str.cat(sep=' ')).reset_index()2. 连衣裙推荐系统

为了构建连衣裙推荐系统,需要用到迁移学习(transfer learning).

具体的,采用预训练的 VGG16 模型来从连衣裙图片中提取相关特征,并构建特征的相似性分数(similarity score).

vgg_model = vgg16.VGG16(weights='imagenet')

feat_extractor = Model(inputs=vgg_model.input, outputs=vgg_model.get_layer("fc2").output)移除 VGG 模型中的最后两层,因此,对于每张图片,可以得到一个 1x4096 维的向量. 并可以在 2D 空间中画出所有的特征. 如图:

图: VGG 特征的 TSNE 可视化.

为了测试构建的推荐系统的性能,留出一部分连衣裙数据(大约 10% )用于测试,其它的用于构建相似性分数矩阵(similarity score matrix). 相似性度量方式采用余弦相似性(cosine similarity). 每次送入一张连衣裙图片到推荐系统,并计算其与训练数据集中所有连衣裙的相似性,然后选择最相似的(最高相似性分数的结果).

sim = cosine_similarity(train, test[test_id].reshape(1,-1))下面给出一些示例. 其中,原始图片是从测试数据集中选取的. 右边 5 张是与其最相似的图片.

从结果来看,VGG 模型很不错,取得了很不错的效果.

3. 连衣裙标注系统

连衣裙标注系统的方法,是与连衣裙相似性方法不同的.

连衣裙标注场景与传统的标注问题也是不同的,传统的标注问题中,数据是包含图片及对应的几个单词的标签. 而这里,仅有连衣裙图片的文本描述,必须从中提取有用信息. 这是有点棘手的,因为首先需要分析用户所给出的文本. 因此,这里采用的思想是,从文本描述中提取最核心的单词标签,以用作图片的标签.

工作流如下:

由于数据集中给出的文本描述是德文,因此,这里也采用了德文描述,以免还要借助于谷歌翻译.

这里计划开发两种不同的模型:一种采用名词(nouns),另一种采用形容词(adjectives, adj.).

为了进行分词,首先对原始数据集中图片描述,生成 POS 标签.

tokenizer = nltk.tokenize.RegexpTokenizer(r'[a-zA-ZäöüßÄÖÜ]+')

nlp = spacy.load('de_core_news_sm')def clean(txt):

text = tokenizer.tokenize(txt)

text = nlp(" ".join(text))

adj, noun = [], []

for token in text:

if token.pos_ == 'ADJ' and len(token)>2:

adj.append(token.lemma_)

elif token.pos_ in ['NOUN','PROPN'] and len(token)>2:

noun.append(token.lemma_)

return " ".join(adj).lower(), " ".join(noun).lower()adj, noun = zip(*map(clean,tqdm(data['Description'])))然后,对于相同图片,组合所有的形容词(adj);类似的,对于相同图片,组合所有的名词(noun).

newdata = data.groupby(‘Image URL’)[‘adj_Description’].apply(lambda x: x.str.cat(sep=’ XXX ‘)).reset_index()接着,为了提取每张图片的重要性标签,采用 TFIDF 处理,并得到最终的 ADJs 和 NOUNs(这里选择最好的 3 个 ADJs 和 NOUNs. 如果没有找到 ADJs 和 NOUNs 单词,则返回一系列的 “xxx”.) 此外,还计算了一系列不明确的 ADJs/NOUNs 并进行排除.

def tagging(comments, remove=None, n_word=3):

comments = comments.split('XXX')

try:

counter = TfidfVectorizer(min_df=2, analyzer='word', stop_words=remove)

counter.fit(comments)

score = counter.transform(comments).toarray().sum(axis=0)

word = counter.get_feature_names()

vocab = pd.DataFrame({'w':word,'s':score}).sort_values('s').tail(n_word)['w'].values

return " ".join(list(vocab)+['xxx']*(n_word-len(vocab)))

except:

return " ".join(['xxx']*n_word)至此,对于每张连衣裙图片,可以得到最多 3 个 ADJs 和 NOUNs. 后面开始构建标注模型.

模型创建时,还利用了 VGG 模型提取的特征. 此外,每张连衣裙图片出现最多三次,因为最多 3 个不同的标签(相对于 3 种不同的 ADJs/NOUNs). 模型结构非常简单,如:

inp = Input(shape=(4096, ))

dense1 = Dense(256, activation='relu')(inp)

dense2 = Dense(128, activation='relu')(dense1)

drop = Dropout(0.5)(dense2)

dense3 = Dense(64, activation='relu')(drop)

out = Dense(y.shape[1], activation='softmax')(dense3)

model = Model(inputs=inp, outputs=out)

model.compile(optimizer='adam', loss='categorical_crossentropy')结果如下:

4. Python 实现

from keras.applications import vgg16, resnet50

from keras.preprocessing.image import load_img,img_to_array

from keras.models import Model, Sequential

from keras.applications.imagenet_utils import preprocess_input

from keras.layers import Dense, Activation, Dropout, Reshape, Flatten, Input, concatenate

from keras.utils import np_utils

from PIL import Image

import cv2 as cv

import requests

from io import BytesIO

import os

import matplotlib.pyplot as plt

from matplotlib import offsetbox

import numpy as np

import pandas as pd

from tqdm import tqdm

tqdm.pandas()

import pickle

import string

import re

import spacy

import nltk

from nltk.stem.snowball import SnowballStemmer

from sklearn.manifold import TSNE

from sklearn.decomposition import PCA

from sklearn.feature_extraction.text import TfidfVectorizer, CountVectorizer

from sklearn.metrics.pairwise import cosine_similarity

#加载数据

data = pd.read_csv('./FEIDEGGER.csv').fillna(' ')

print(data.shape)

#(43944, 2)

data.head(3)

### MERGE DESCRIPTION 4 IMAGE URL ###

newdata = data.groupby('Image URL')['Description'].apply(lambda x: x.str.cat(sep=' ')).reset_index()

print(newdata.shape)

newdata.head(3)

#加载VGG模型

vgg_model = vgg16.VGG16(weights='imagenet')

feat_extractor = Model(inputs=vgg_model.input, outputs=vgg_model.get_layer("fc2").output)

feat_extractor.summary()

#为VGG准备数据

#A)读取图片,并EXPAND DIM

importedImages = []

for url in tqdm(newdata['Image URL'][0:5]):

response = requests.get(url)

img = Image.open(BytesIO(response.content))

img = img.resize((224, 224))

numpy_img = img_to_array(img)

img_batch = np.expand_dims(numpy_img, axis=0)

importedImages.append(img_batch.astype('float16'))

images = np.vstack(importedImages)

processed_imgs = preprocess_input(images.copy())

#提取特征

##B)模型预测

imgs_features = feat_extractor.predict(processed_imgs)

imgs_features.shape

### A + B (DONE AND STORED IN A PICKLE) ###

with open("./img2feat.pkl", 'rb') as pickle_file:

imgs_features = pickle.load(pickle_file)

print(imgs_features.shape)

#(8792, 4096)

### SPLIT TRAIN TEST ###

train = imgs_features[:8000]

print(train.shape)

test = imgs_features[8000:]

print(test.shape)

#特征可视化

#特征降维

pca = PCA(n_components=50)

pca_score = pca.fit_transform(imgs_features)

tsne = TSNE(n_components=2, random_state=42, n_iter=300, perplexity=5)

T = tsne.fit_transform(pca_score)

#

fig, ax = plt.subplots(figsize=(16,9))

ax.scatter(T.T[0], T.T[1])

plt.grid(False)

shown_images = np.array([[1., 1.]])

for i in tqdm(np.random.randint(1,T.shape[0],200)):

response = requests.get(newdata['Image URL'][i])

img = Image.open(BytesIO(response.content))

img = img.resize((16, 16))

shown_images = np.r_[shown_images, [T[i]]]

imagebox = offsetbox.AnnotationBbox(offsetbox.OffsetImage(img, cmap=plt.cm.gray_r), T[i])

ax.add_artist(imagebox)

plt.show()

#图片相似性

### PASS THE TEST ID TO GET THE MOST SIMILAR PRODUCTS ###

def most_similar_products(test_id, n_sim = 3):

#open-plot image

plt.subplot(1, n_sim+1, 1)

org_response = requests.get(newdata['Image URL'][train.shape[0]+test_id])

original = Image.open(BytesIO(org_response.content))

original = original.resize((224, 224))

plt.imshow(original)

plt.title('ORIGINAL')

#compute similarity matrix

cosSimilarities_serie = cosine_similarity(train, test[test_id].reshape(1,-1)).ravel()

cos_similarities = pd.DataFrame({

'sim':cosSimilarities_serie,

'id':newdata[:train.shape[0]].index},

index=newdata['Image URL'][:train.shape[0]]).sort_values('sim',ascending=False)[0:n_sim+1]

#plot n most similar

for i in range(0,n_sim):

plt.subplot(1, n_sim+1, i+1+1)

org_response = requests.get(cos_similarities.index[i])

original = Image.open(BytesIO(org_response.content))

original = original.resize((224, 224))

plt.imshow(original)

plt.title('Similar'+str(i+1)+': '+str(cos_similarities.sim[i].round(3)))

#

plt.figure(figsize=(16,8))

most_similar_products(7,5)

plt.show()

#

plt.figure(figsize=(16,8))

most_similar_products(28,5)

plt.show()

#

plt.figure(figsize=(16,8))

most_similar_products(9,5)

plt.show()

#

plt.figure(figsize=(16,8))

most_similar_products(10,5)

plt.show()

#

plt.figure(figsize=(16,8))

most_similar_products(347,5)

plt.show()

#文本相似性

del newdata

### CLEAN DESCRIPTION ###

tokenizer = nltk.tokenize.RegexpTokenizer(r'[a-zA-ZäöüßÄÖÜ]+')

nlp = spacy.load('de_core_news_sm')

# keep only noun and adj: return lemma

def clean(txt):

text = tokenizer.tokenize(txt)

text = nlp(" ".join(text))

adj, noun = [], []

for token in text:

if token.pos_ == 'ADJ' and len(token)>2:

adj.append(token.lemma_)

elif token.pos_ in ['NOUN','PROPN'] and len(token)>2:

noun.append(token.lemma_)

return " ".join(adj).lower(), " ".join(noun).lower()

adj, noun = zip(*map(clean,tqdm(data['Description'])))

data['adj_Description'] = list(adj)

data['noun_Description'] = list(noun)

### MERGE CLEAN DESCRIPTION 4 IMAGE URL ###

newdata = data.groupby('Image URL')['adj_Description'].apply(lambda x: x.str.cat(sep=' XXX ')).reset_index()

newdata['noun_Description'] = data.groupby('Image URL')['noun_Description'].apply(lambda x: x.str.cat(sep=' XXX ')).values

print(newdata.shape)

#(8792, 3)

newdata.head(3)

### DEFINE UTILITY FUNCTION TO OPERATE WITH TEXT ###

remove_adj = ['rund','lang','kurz','kurze','klein','knien','langen','weit']

remove_noun = ['kleid','ärmel','ausschnitt','rock','knie','knielanges','halsen','seite',

'träge','träger','arm','brust','fuß','schulter','taille','oberteil']

# extract most important words with tfidf

# complete empty space with 'xxx'

def tagging(comments, remove=None, n_word=3):

comments = comments.split('XXX')

try:

counter = TfidfVectorizer(min_df=2, analyzer='word', stop_words=remove)

counter.fit(comments)

score = counter.transform(comments).toarray().sum(axis=0)

word = counter.get_feature_names()

vocab = pd.DataFrame({'w':word,'s':score}).sort_values('s').tail(n_word)['w'].values

return " ".join(list(vocab)+['xxx']*(n_word-len(vocab)))

except:

return " ".join(['xxx']*n_word)

### GENERATE LABELS: EXTRACT MOST IMPORTANT WORD ###

tag_noun = newdata['noun_Description'][:train.shape[0]].progress_apply(lambda x: tagging(x,remove_noun))

tag_adj = newdata['adj_Description'][:train.shape[0]].progress_apply(lambda x: tagging(x,remove_adj))

label_noun = np.asarray(tag_noun.str.cat(sep=' ').split(' '))

label_adj = np.asarray(tag_adj.str.cat(sep=' ').split(' '))

### GENERATE TRAIN FEATURES: REPLICATE FEATURES WITH THE SAME ORDER OF LABELS ###

features = np.repeat(train, 3, axis=0)

print(features.shape)

#(24000, 4096)

### ENCODE LABELS FOR NN ###

y_noun = np_utils.to_categorical(pd.Series(label_noun[label_noun!='xxx']).factorize()[0])

y_adj = np_utils.to_categorical(pd.Series(label_adj[label_adj!='xxx']).factorize()[0])

print(y_noun.shape)

print(y_adj.shape)

#(7161, 443)

#(15394, 330)

##

inp_adj = Input(shape=(4096, ))

dense_adj1 = Dense(256, activation='relu')(inp_adj)

dense_adj2 = Dense(128, activation='relu')(dense_adj1)

drop_adj = Dropout(0.5)(dense_adj2)

dense_adj3 = Dense(64, activation='relu')(drop_adj)

out_adj = Dense(y_adj.shape[1], activation='softmax')(dense_adj3)

model_adj = Model(inputs=inp_adj, outputs=out_adj)

model_adj.compile(optimizer='adam', loss='categorical_crossentropy')

##

inp_noun = Input(shape=(4096, ))

dense_noun1 = Dense(256, activation='relu')(inp_noun)

dense_noun2 = Dense(128, activation='relu')(dense_noun1)

drop_noun = Dropout(0.5)(dense_noun2)

dense_noun3 = Dense(64, activation='relu')(drop_noun)

out_noun = Dense(y_noun.shape[1], activation='softmax')(dense_noun3)

model_noun = Model(inputs=inp_noun, outputs=out_noun)

model_noun.compile(optimizer='adam', loss='categorical_crossentropy')

#

model_adj.fit(features[label_adj!='xxx'],y_adj,epochs=10,batch_size=512,verbose=2)

model_noun.fit(features[label_noun!='xxx'],y_noun,epochs=10,batch_size=512,verbose=2)

#

def tag_products(test_id):

# open-plot image

org_response = requests.get(newdata['Image URL'][train.shape[0]+test_id])

original = Image.open(BytesIO(org_response.content))

original = original.resize((224, 224))

plt.imshow(original)

plt.title('ORIGINAL')

plt.show()

#pred noun

pred_noun = model_noun.predict(test[test_id].reshape(1,-1))

pred_noun_class = np.argsort(-pred_noun)[0][0:2]

return_noun_class = pd.Series(label_noun[label_noun!='xxx']).factorize()[1][pred_noun_class].tolist()

#pred adj

pred_adj = model_adj.predict(test[test_id].reshape(1,-1))

pred_adj_class = np.argsort(-pred_adj)[0][0:2]

return_adj_class = pd.Series(label_adj[label_adj!='xxx']).factorize()[1][pred_adj_class].tolist()

print('NOUN:', return_noun_class,'\n','ADJ:',return_adj_class)

#

tag_products(7)

tag_products(8)

tag_products(9)

tag_products(10)

tag_products(11)

tag_products(278)

tag_products(1)