作者: Qidian213

QQ group: 姿态检测&跟踪 781184396

<Github 项目 - deep_sort_yolov3>

https://github.com/nwojke/deep_sort

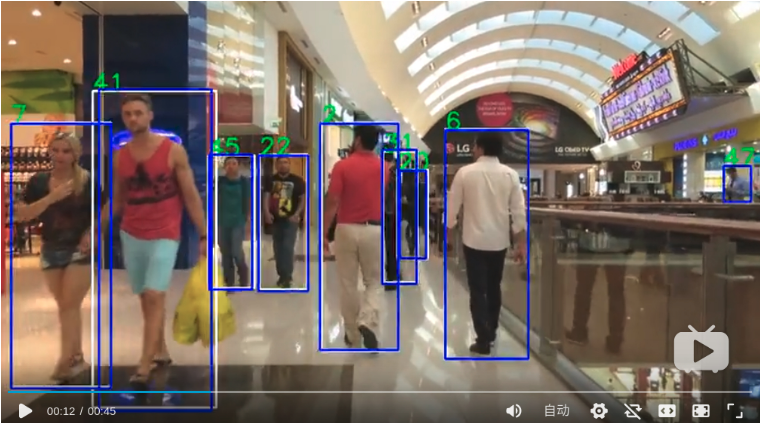

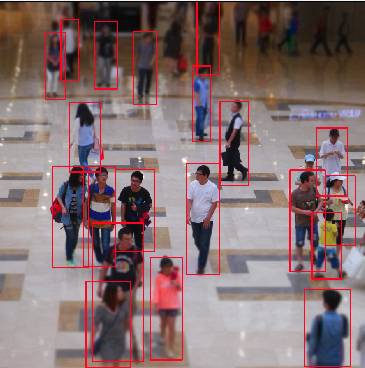

采用 TensorFlow Backend 的 Keras 框架,基于 YOLOV3 和 Deep_Sort 实现的实时多人追踪. 还可以用于人流统计.

该项目现支持 tiny_yolo v3, 但仅用于测试. 如果需要进行模型训练, 可以采用在 darknet 中进行, 或者等待该项目的后续支持.

该项目可以追踪多个目标, 目标类为 COCO 类别. 如果是其它类别,需要修改 yolo.py 中的类别.

该项目也可以在测试时调用相机.

1. 依赖项

项目代码兼容 Python2.7 和 Python3.

追踪器(tracker) 代码依赖项:

NumPy

sklean

OpenCV特征生成(feature generation) 需要基于 TensorFlow1.4.0.

注:

多目标跟踪算法 DeepSort 的模型文件 model_data/mars-small128.pb 需要转换为 TensorFlow1.4.0.

2. 测试结果

速度: 只运行 yolo 检测, 速率大概为 11-13 fps, 添加 deep_sort 多目标追踪后, 速率大概为 11.5 fps (显卡 GTX1060.)

测试结果: https://www.bilibili.com/video/av23500163/

3. 多目标追踪示例

[1] - 从 YOLO website 下载 YOLOV3 或 tiny_yolov3 权重.

[2] - 将下载的 Darknet YOLO 模型转换为 Keras 模型, 并放到 model_data/ 路径.

项目里提供了转换后的 yolo.h5模型:

https://drive.google.com/file/d/1uvXFacPnrSMw6ldWTyLLjGLETlEsUvcE/view?usp=sharing

(基于 TF-1.4.0)

3.1. 模型转换

用法:

# 首先下载 darknet yolov3 权重

python convert.py yolov3.cfg yolov3.weights model_data/yolo.h5convert.py:

#! /usr/bin/env python

"""

Reads Darknet config and weights

and

creates Keras model with TF backend.

"""

import argparse

import configparser

import io

import os

from collections import defaultdict

import numpy as np

from keras import backend as K

from keras.layers import (Conv2D, Input, ZeroPadding2D, Add,

UpSampling2D, Concatenate)

from keras.layers.advanced_activations import LeakyReLU

from keras.layers.normalization import BatchNormalization

from keras.models import Model

from keras.regularizers import l2

from keras.utils.vis_utils import plot_model as plot

parser = argparse.ArgumentParser(description='Darknet To Keras Converter.')

parser.add_argument('config_path', help='Path to Darknet cfg file.')

parser.add_argument('weights_path', help='Path to Darknet weights file.')

parser.add_argument('output_path', help='Path to output Keras model file.')

parser.add_argument(

'-p',

'--plot_model',

help='Plot generated Keras model and save as image.',

action='store_true')

def unique_config_sections(config_file):

"""

Convert all config sections to have unique names.

Adds unique suffixes to config sections for compability with configparser.

"""

section_counters = defaultdict(int)

output_stream = io.BytesIO() #io.StringIO()

with open(config_file) as fin:

for line in fin:

if line.startswith('['):

section = line.strip().strip('[]')

_section = section + '_' + str(section_counters[section])

section_counters[section] += 1

line = line.replace(section, _section)

output_stream.write(line)

output_stream.seek(0)

return output_stream

# %%

def _main(args):

config_path = os.path.expanduser(args.config_path)

weights_path = os.path.expanduser(args.weights_path)

assert config_path.endswith('.cfg'), '{} is not a .cfg file'.format(

config_path)

assert weights_path.endswith(

'.weights'), '{} is not a .weights file'.format(weights_path)

output_path = os.path.expanduser(args.output_path)

assert output_path.endswith(

'.h5'), 'output path {} is not a .h5 file'.format(output_path)

output_root = os.path.splitext(output_path)[0]

# Load weights and config.

print('Loading weights.')

weights_file = open(weights_path, 'rb')

major, minor, revision = np.ndarray(shape=(3, ),

dtype='int32',

buffer=weights_file.read(12))

if (major*10+minor)>=2 and major<1000 and minor<1000:

seen = np.ndarray(shape=(1,),

dtype='int64',

buffer=weights_file.read(8))

else:

seen = np.ndarray(shape=(1,),

dtype='int32',

buffer=weights_file.read(4))

print('Weights Header: ', major, minor, revision, seen)

print('Parsing Darknet config.')

unique_config_file = unique_config_sections(config_path)

cfg_parser = configparser.ConfigParser()

cfg_parser.read_file(unique_config_file)

print('Creating Keras model.')

input_layer = Input(shape=(None, None, 3))

prev_layer = input_layer

all_layers = []

weight_decay = float(cfg_parser['net_0']['decay']

) if 'net_0' in cfg_parser.sections() else 5e-4

count = 0

out_index = []

for section in cfg_parser.sections():

print('Parsing section {}'.format(section))

if section.startswith('convolutional'):

filters = int(cfg_parser[section]['filters'])

size = int(cfg_parser[section]['size'])

stride = int(cfg_parser[section]['stride'])

pad = int(cfg_parser[section]['pad'])

activation = cfg_parser[section]['activation']

batch_normalize = 'batch_normalize' in cfg_parser[section]

padding = 'same' if pad == 1 and stride == 1 else 'valid'

# Setting weights.

# Darknet serializes convolutional weights as:

# [bias/beta, [gamma, mean, variance], conv_weights]

prev_layer_shape = K.int_shape(prev_layer)

weights_shape = (size, size, prev_layer_shape[-1], filters)

darknet_w_shape = (filters, weights_shape[2], size, size)

weights_size = np.product(weights_shape)

print('conv2d',

'bn' if batch_normalize else ' ',

activation,

weights_shape)

conv_bias = np.ndarray(

shape=(filters, ),

dtype='float32',

buffer=weights_file.read(filters * 4))

count += filters

if batch_normalize:

bn_weights = np.ndarray(

shape=(3, filters),

dtype='float32',

buffer=weights_file.read(filters * 12))

count += 3 * filters

bn_weight_list = [

bn_weights[0], # scale gamma

conv_bias, # shift beta

bn_weights[1], # running mean

bn_weights[2] # running var

]

conv_weights = np.ndarray(

shape=darknet_w_shape,

dtype='float32',

buffer=weights_file.read(weights_size * 4))

count += weights_size

# DarkNet conv_weights are serialized Caffe-style:

# (out_dim, in_dim, height, width)

# We would like to set these to Tensorflow order:

# (height, width, in_dim, out_dim)

conv_weights = np.transpose(conv_weights, [2, 3, 1, 0])

conv_weights = [conv_weights] if batch_normalize else [

conv_weights, conv_bias

]

# Handle activation.

act_fn = None

if activation == 'leaky':

pass # Add advanced activation later.

elif activation != 'linear':

raise ValueError(

'Unknown activation function `{}` in section {}'.format(

activation, section))

# Create Conv2D layer

if stride>1:

# Darknet uses left and top padding instead of 'same' mode

prev_layer = ZeroPadding2D(((1,0),(1,0)))(prev_layer)

conv_layer = (Conv2D(

filters, (size, size),

strides=(stride, stride),

kernel_regularizer=l2(weight_decay),

use_bias=not batch_normalize,

weights=conv_weights,

activation=act_fn,

padding=padding))(prev_layer)

if batch_normalize:

conv_layer = (BatchNormalization(

weights=bn_weight_list))(conv_layer)

prev_layer = conv_layer

if activation == 'linear':

all_layers.append(prev_layer)

elif activation == 'leaky':

act_layer = LeakyReLU(alpha=0.1)(prev_layer)

prev_layer = act_layer

all_layers.append(act_layer)

elif section.startswith('route'):

ids = [int(i) for i in cfg_parser[section]['layers'].split(',')]

layers = [all_layers[i] for i in ids]

if len(layers) > 1:

print('Concatenating route layers:', layers)

concatenate_layer = Concatenate()(layers)

all_layers.append(concatenate_layer)

prev_layer = concatenate_layer

else:

skip_layer = layers[0] # only one layer to route

all_layers.append(skip_layer)

prev_layer = skip_layer

elif section.startswith('shortcut'):

index = int(cfg_parser[section]['from'])

activation = cfg_parser[section]['activation']

assert activation == 'linear', 'Only linear activation supported.'

all_layers.append(Add()([all_layers[index], prev_layer]))

prev_layer = all_layers[-1]

elif section.startswith('upsample'):

stride = int(cfg_parser[section]['stride'])

assert stride == 2, 'Only stride=2 supported.'

all_layers.append(UpSampling2D(stride)(prev_layer))

prev_layer = all_layers[-1]

elif section.startswith('yolo'):

out_index.append(len(all_layers)-1)

all_layers.append(None)

prev_layer = all_layers[-1]

elif section.startswith('net'):

pass

else:

raise ValueError(

'Unsupported section header type: {}'.format(section))

# Create and save model.

model = Model(inputs=input_layer, outputs=[all_layers[i] for i in out_index])

print(model.summary())

model.save('{}'.format(output_path))

print('Saved Keras model to {}'.format(output_path))

# Check to see if all weights have been read.

remaining_weights = len(weights_file.read()) / 4

weights_file.close()

print('Read {} of {} from Darknet weights.'.format(count, count +

remaining_weights))

if remaining_weights > 0:

print('Warning: {} unused weights'.format(remaining_weights))

if args.plot_model:

plot(model, to_file='{}.png'.format(output_root), show_shapes=True)

print('Saved model plot to {}.png'.format(output_root))

if __name__ == '__main__':

_main(parser.parse_args())3.2. 多人目标追踪Demo

#! /usr/bin/env python

# -*- coding: utf-8 -*-

from __future__ import division, print_function, absolute_import

from timeit import time

import warnings

import cv2

import numpy as np

from PIL import Image

from yolo import YOLO

from deep_sort import preprocessing

from deep_sort import nn_matching

from deep_sort.detection import Detection

from deep_sort.tracker import Tracker

from tools import generate_detections as gdet

from deep_sort.detection import Detection as ddet

warnings.filterwarnings('ignore')

def main(yolo):

# 参数定义

max_cosine_distance = 0.3

nn_budget = None

nms_max_overlap = 1.0

# deep_sort 目标追踪算法

model_filename = 'model_data/mars-small128.pb'

encoder = gdet.create_box_encoder(model_filename,batch_size=1)

metric = nn_matching.NearestNeighborDistanceMetric(

"cosine", max_cosine_distance, nn_budget)

tracker = Tracker(metric)

writeVideo_flag = True

video_capture = cv2.VideoCapture(0)

if writeVideo_flag:

# Define the codec and create VideoWriter object

w = int(video_capture.get(3))

h = int(video_capture.get(4))

fourcc = cv2.VideoWriter_fourcc(*'MJPG')

out = cv2.VideoWriter('output.avi', fourcc, 15, (w, h))

list_file = open('detection.txt', 'w')

frame_index = -1

fps = 0.0

while True:

ret, frame = video_capture.read() # frame shape 640*480*3

if ret != True:

break;

t1 = time.time()

image = Image.fromarray(frame)

boxs = yolo.detect_image(image)

# print("box_num",len(boxs))

features = encoder(frame,boxs)

# score to 1.0 here).

detections = [Detection(bbox, 1.0, feature) for

bbox, feature in zip(boxs, features)]

# Run non-maxima suppression.

boxes = np.array([d.tlwh for d in detections])

scores = np.array([d.confidence for d in detections])

indices = preprocessing.non_max_suppression(boxes, nms_max_overlap, scores)

detections = [detections[i] for i in indices]

# Call the tracker

tracker.predict()

tracker.update(detections)

for track in tracker.tracks:

if not track.is_confirmed() or track.time_since_update > 1:

continue

bbox = track.to_tlbr()

cv2.rectangle(frame,

(int(bbox[0]), int(bbox[1])),

(int(bbox[2]), int(bbox[3])),

(255,255,255),

2)

cv2.putText(frame,

str(track.track_id),

(int(bbox[0]), int(bbox[1])),

0, 5e-3 * 200, (0,255,0),2)

for det in detections:

bbox = det.to_tlbr()

cv2.rectangle(frame,

(int(bbox[0]), int(bbox[1])),

(int(bbox[2]), int(bbox[3])),

(255,0,0),

2)

cv2.imshow('', frame)

if writeVideo_flag:

# save a frame

out.write(frame)

frame_index = frame_index + 1

list_file.write(str(frame_index)+' ')

if len(boxs) != 0:

for i in range(0,len(boxs)):

list_file.write(str(boxs[i][0]) + ' '+

str(boxs[i][1]) + ' '+

str(boxs[i][2]) + ' '+

str(boxs[i][3]) + ' ')

list_file.write('\n')

fps = ( fps + (1./(time.time()-t1)) ) / 2

print("fps= %f"%(fps))

# Press Q to stop!

if cv2.waitKey(1) & 0xFF == ord('q'):

break

video_capture.release()

if writeVideo_flag:

out.release()

list_file.close()

cv2.destroyAllWindows()

if __name__ == '__main__':

main(YOLO())3.3. 多人目标检测Demo

#! /usr/bin/env python

# -*- coding: utf-8 -*-

from __future__ import division, print_function, absolute_import

import os

from timeit import time

import warnings

import sys

import cv2

import numpy as np

from PIL import Image

import matplotlib.pyplot as plt

from yolo import YOLO

from deep_sort import preprocessing

from deep_sort.detection import Detection

from tools import generate_detections as gdet

warnings.filterwarnings('ignore')

def main(yolo):

nms_max_overlap = 1.0

# deep_sort

model_filename = 'model_data/mars-small128.pb'

encoder = gdet.create_box_encoder(model_filename, batch_size=1)

imgs_dir = "/path/to/test/"

imgs_list = os.listdir(imgs_dir)[20:]

imgs_file = [os.path.join(imgs_dir, tmp) for tmp in imgs_list]

for idx in range(len(imgs_file)):

frame = np.array(Image.open(imgs_file[idx]))

image = Image.fromarray(frame)

boxs = yolo.detect_image(image)

# print("box_num",len(boxs))

features = encoder(frame, boxs)

# score to 1.0 here).

detections = [Detection(bbox, 1.0, feature)

for bbox, feature in zip(boxs, features)]

# Run non-maxima suppression.

boxes = np.array([d.tlwh for d in detections])

scores = np.array([d.confidence for d in detections])

indices = preprocessing.non_max_suppression(

boxes, nms_max_overlap, scores)

detections = [detections[i] for i in indices]

for det in detections:

bbox = det.to_tlbr()

cv2.rectangle(frame,

(int(bbox[0]), int(bbox[1])),

(int(bbox[2]), int(bbox[3])),

(255, 0, 0), 2)

plt.imshow(frame)

plt.show()

if __name__ == '__main__':

main(YOLO())如:

4. YOLOV3 检测模型

4.1. yolo3/model.py

"""YOLO_v3 Model Defined in Keras."""

from functools import wraps

import numpy as np

import tensorflow as tf

from keras import backend as K

from keras.layers import Conv2D, Add, ZeroPadding2D, UpSampling2D, Concatenate

from keras.layers.advanced_activations import LeakyReLU

from keras.layers.normalization import BatchNormalization

from keras.models import Model

from keras.regularizers import l2

from yolo3.utils import compose

@wraps(Conv2D)

def DarknetConv2D(*args, **kwargs):

"""

Wrapper to set Darknet parameters for Convolution2D.

"""

darknet_conv_kwargs = {'kernel_regularizer': l2(5e-4)}

darknet_conv_kwargs['padding'] = 'valid' if kwargs.get('strides')==(2,2) else 'same'

darknet_conv_kwargs.update(kwargs)

return Conv2D(*args, **darknet_conv_kwargs)

def DarknetConv2D_BN_Leaky(*args, **kwargs):

"""

Darknet Convolution2D followed by BatchNormalization and LeakyReLU.

"""

no_bias_kwargs = {'use_bias': False}

no_bias_kwargs.update(kwargs)

return compose(

DarknetConv2D(*args, **no_bias_kwargs),

BatchNormalization(),

LeakyReLU(alpha=0.1))

def resblock_body(x, num_filters, num_blocks):

'''

A series of resblocks starting with a downsampling Convolution2D

'''

# Darknet uses left and top padding instead of 'same' mode

x = ZeroPadding2D(((1,0),(1,0)))(x)

x = DarknetConv2D_BN_Leaky(num_filters, (3,3), strides=(2,2))(x)

for i in range(num_blocks):

y = compose(

DarknetConv2D_BN_Leaky(num_filters//2, (1,1)),

DarknetConv2D_BN_Leaky(num_filters, (3,3)))(x)

x = Add()([x,y])

return x

def darknet_body(x):

'''

Darknent body having 52 Convolution2D layers

'''

x = DarknetConv2D_BN_Leaky(32, (3,3))(x)

x = resblock_body(x, 64, 1)

x = resblock_body(x, 128, 2)

x = resblock_body(x, 256, 8)

x = resblock_body(x, 512, 8)

x = resblock_body(x, 1024, 4)

return x

def make_last_layers(x, num_filters, out_filters):

'''

6 Conv2D_BN_Leaky layers followed by a Conv2D_linear layer

'''

x = compose(

DarknetConv2D_BN_Leaky(num_filters, (1,1)),

DarknetConv2D_BN_Leaky(num_filters*2, (3,3)),

DarknetConv2D_BN_Leaky(num_filters, (1,1)),

DarknetConv2D_BN_Leaky(num_filters*2, (3,3)),

DarknetConv2D_BN_Leaky(num_filters, (1,1)))(x)

y = compose(

DarknetConv2D_BN_Leaky(num_filters*2, (3,3)),

DarknetConv2D(out_filters, (1,1)))(x)

return x, y

def yolo_body(inputs, num_anchors, num_classes):

"""

Create YOLO_V3 model CNN body in Keras.

"""

darknet = Model(inputs, darknet_body(inputs))

x, y1 = make_last_layers(darknet.output,

512,

num_anchors*(num_classes+5))

x = compose(DarknetConv2D_BN_Leaky(256, (1,1)),

UpSampling2D(2))(x)

x = Concatenate()([x,darknet.layers[152].output])

x, y2 = make_last_layers(x, 256, num_anchors*(num_classes+5))

x = compose(DarknetConv2D_BN_Leaky(128, (1,1)),

UpSampling2D(2))(x)

x = Concatenate()([x,darknet.layers[92].output])

x, y3 = make_last_layers(x, 128, num_anchors*(num_classes+5))

return Model(inputs, [y1,y2,y3])

def yolo_head(feats, anchors, num_classes, input_shape):

"""

Convert final layer features to bounding box parameters.

"""

num_anchors = len(anchors)

# Reshape to batch, height, width, num_anchors, box_params.

anchors_tensor = K.reshape(K.constant(anchors), [1, 1, 1, num_anchors, 2])

grid_shape = K.shape(feats)[1:3] # height, width

grid_y = K.tile(

K.reshape(K.arange(0, stop=grid_shape[0]), [-1, 1, 1, 1]),

[1, grid_shape[1], 1, 1])

grid_x = K.tile(

K.reshape(K.arange(0, stop=grid_shape[1]), [1, -1, 1, 1]),

[grid_shape[0], 1, 1, 1])

grid = K.concatenate([grid_x, grid_y])

grid = K.cast(grid, K.dtype(feats))

feats = K.reshape(feats,

[-1,

grid_shape[0],

grid_shape[1],

num_anchors,

num_classes + 5])

box_xy = K.sigmoid(feats[..., :2])

box_wh = K.exp(feats[..., 2:4])

box_confidence = K.sigmoid(feats[..., 4:5])

box_class_probs = K.sigmoid(feats[..., 5:])

# Adjust preditions to each spatial grid point and anchor size.

box_xy = (box_xy + grid) / K.cast(grid_shape[::-1], K.dtype(feats))

box_wh = box_wh * anchors_tensor / K.cast(input_shape[::-1], K.dtype(feats))

return box_xy, box_wh, box_confidence, box_class_probs

def yolo_correct_boxes(box_xy, box_wh, input_shape, image_shape):

'''

Get corrected boxes

'''

box_yx = box_xy[..., ::-1]

box_hw = box_wh[..., ::-1]

input_shape = K.cast(input_shape, K.dtype(box_yx))

image_shape = K.cast(image_shape, K.dtype(box_yx))

new_shape = K.round(image_shape * K.min(input_shape/image_shape))

offset = (input_shape-new_shape)/2./input_shape

scale = input_shape/new_shape

box_yx = (box_yx - offset) * scale

box_hw *= scale

box_mins = box_yx - (box_hw / 2.)

box_maxes = box_yx + (box_hw / 2.)

boxes = K.concatenate([

box_mins[..., 0:1], # y_min

box_mins[..., 1:2], # x_min

box_maxes[..., 0:1], # y_max

box_maxes[..., 1:2] # x_max

])

# Scale boxes back to original image shape.

boxes *= K.concatenate([image_shape, image_shape])

return boxes

def yolo_boxes_and_scores(feats, anchors, num_classes, input_shape, image_shape):

'''

Process Conv layer output

'''

box_xy, box_wh, box_confidence, box_class_probs = yolo_head(feats,

anchors, num_classes, input_shape)

boxes = yolo_correct_boxes(box_xy, box_wh, input_shape, image_shape)

boxes = K.reshape(boxes, [-1, 4])

box_scores = box_confidence * box_class_probs

box_scores = K.reshape(box_scores, [-1, num_classes])

return boxes, box_scores

def yolo_eval(yolo_outputs,

anchors,

num_classes,

image_shape,

max_boxes=20,

score_threshold=.6,

iou_threshold=.5):

"""

Evaluate YOLO model on given input and return filtered boxes.

"""

anchor_mask = [[6,7,8], [3,4,5], [0,1,2]]

input_shape = K.shape(yolo_outputs[0])[1:3] * 32

boxes = []

box_scores = []

for l in range(3):

_boxes, _box_scores = yolo_boxes_and_scores(yolo_outputs[l],

anchors[anchor_mask[l]], num_classes, input_shape, image_shape)

boxes.append(_boxes)

box_scores.append(_box_scores)

boxes = K.concatenate(boxes, axis=0)

box_scores = K.concatenate(box_scores, axis=0)

mask = box_scores >= score_threshold

max_boxes_tensor = K.constant(max_boxes, dtype='int32')

boxes_ = []

scores_ = []

classes_ = []

for c in range(num_classes):

# TODO: use keras backend instead of tf.

class_boxes = tf.boolean_mask(boxes, mask[:, c])

class_box_scores = tf.boolean_mask(box_scores[:, c], mask[:, c])

nms_index = tf.image.non_max_suppression(

class_boxes,

class_box_scores,

max_boxes_tensor,

iou_threshold=iou_threshold)

class_boxes = K.gather(class_boxes, nms_index)

class_box_scores = K.gather(class_box_scores, nms_index)

classes = K.ones_like(class_box_scores, 'int32') * c

boxes_.append(class_boxes)

scores_.append(class_box_scores)

classes_.append(classes)

boxes_ = K.concatenate(boxes_, axis=0)

scores_ = K.concatenate(scores_, axis=0)

classes_ = K.concatenate(classes_, axis=0)

return boxes_, scores_, classes_

def preprocess_true_boxes(true_boxes, input_shape, anchors, num_classes):

'''

Preprocess true boxes to training input format

Parameters

----------

true_boxes: array, shape=(m, T, 5)

Absolute x_min, y_min, x_max, y_max, class_code reletive to input_shape.

input_shape: array-like, hw, multiples of 32

anchors: array, shape=(N, 2), wh

num_classes: integer

Returns

-------

y_true: list of array, shape like yolo_outputs, xywh are reletive value

'''

anchor_mask = [[6,7,8], [3,4,5], [0,1,2]]

true_boxes = np.array(true_boxes, dtype='float32')

input_shape = np.array(input_shape, dtype='int32')

boxes_xy = (true_boxes[..., 0:2] + true_boxes[..., 2:4]) // 2

boxes_wh = true_boxes[..., 2:4] - true_boxes[..., 0:2]

true_boxes[..., 0:2] = boxes_xy/input_shape[::-1]

true_boxes[..., 2:4] = boxes_wh/input_shape[::-1]

m = true_boxes.shape[0]

grid_shapes = [input_shape//{0:32, 1:16, 2:8}[l] for l in range(3)]

y_true = [

np.zeros((m,

grid_shapes[l][0],

grid_shapes[l][1],

len(anchor_mask[l]),

5+num_classes),

dtype='float32') for l in range(3)]

# Expand dim to apply broadcasting.

anchors = np.expand_dims(anchors, 0)

anchor_maxes = anchors / 2.

anchor_mins = -anchor_maxes

valid_mask = boxes_wh[..., 0]>0

for b in range(m):

# Discard zero rows.

wh = boxes_wh[b, valid_mask[b]]

# Expand dim to apply broadcasting.

wh = np.expand_dims(wh, -2)

box_maxes = wh / 2.

box_mins = -box_maxes

intersect_mins = np.maximum(box_mins, anchor_mins)

intersect_maxes = np.minimum(box_maxes, anchor_maxes)

intersect_wh = np.maximum(intersect_maxes - intersect_mins, 0.)

intersect_area = intersect_wh[..., 0] * intersect_wh[..., 1]

box_area = wh[..., 0] * wh[..., 1]

anchor_area = anchors[..., 0] * anchors[..., 1]

iou = intersect_area / (box_area + anchor_area - intersect_area)

# Find best anchor for each true box

best_anchor = np.argmax(iou, axis=-1)

for t, n in enumerate(best_anchor):

for l in range(3):

if n in anchor_mask[l]:

i = np.floor(true_boxes[b,t,0]*grid_shapes[l][1]).astype('int32')

j = np.floor(true_boxes[b,t,1]*grid_shapes[l][0]).astype('int32')

n = anchor_mask[l].index(n)

c = true_boxes[b,t, 4].astype('int32')

y_true[l][b, j, i, n, 0:4] = true_boxes[b,t, 0:4]

y_true[l][b, j, i, n, 4] = 1

y_true[l][b, j, i, n, 5+c] = 1

break

return y_true

def box_iou(b1, b2):

'''

Return iou tensor

Parameters

----------

b1: tensor, shape=(i1,...,iN, 4), xywh

b2: tensor, shape=(j, 4), xywh

Returns

-------

iou: tensor, shape=(i1,...,iN, j)

'''

# Expand dim to apply broadcasting.

b1 = K.expand_dims(b1, -2)

b1_xy = b1[..., :2]

b1_wh = b1[..., 2:4]

b1_wh_half = b1_wh/2.

b1_mins = b1_xy - b1_wh_half

b1_maxes = b1_xy + b1_wh_half

# Expand dim to apply broadcasting.

b2 = K.expand_dims(b2, 0)

b2_xy = b2[..., :2]

b2_wh = b2[..., 2:4]

b2_wh_half = b2_wh/2.

b2_mins = b2_xy - b2_wh_half

b2_maxes = b2_xy + b2_wh_half

intersect_mins = K.maximum(b1_mins, b2_mins)

intersect_maxes = K.minimum(b1_maxes, b2_maxes)

intersect_wh = K.maximum(intersect_maxes - intersect_mins, 0.)

intersect_area = intersect_wh[..., 0] * intersect_wh[..., 1]

b1_area = b1_wh[..., 0] * b1_wh[..., 1]

b2_area = b2_wh[..., 0] * b2_wh[..., 1]

iou = intersect_area / (b1_area + b2_area - intersect_area)

return iou

def yolo_loss(args, anchors, num_classes, ignore_thresh=.5):

'''

Return yolo_loss tensor

Parameters

----------

yolo_outputs: list of tensor, the output of yolo_body

y_true: list of array, the output of preprocess_true_boxes

anchors: array, shape=(T, 2), wh

num_classes: integer

ignore_thresh: float, the iou threshold whether to ignore object confidence loss

Returns

-------

loss: tensor, shape=(1,)

'''

yolo_outputs = args[:3]

y_true = args[3:]

anchor_mask = [[6,7,8], [3,4,5], [0,1,2]]

input_shape = K.cast(K.shape(yolo_outputs[0])[1:3] * 32, K.dtype(y_true[0]))

grid_shapes = [K.cast(K.shape(yolo_outputs[l])[1:3], K.dtype(y_true[0])) for l in range(3)]

loss = 0

m = K.shape(yolo_outputs[0])[0]

for l in range(3):

object_mask = y_true[l][..., 4:5]

true_class_probs = y_true[l][..., 5:]

pred_xy, pred_wh, pred_confidence, pred_class_probs = yolo_head(

yolo_outputs[l],

anchors[anchor_mask[l]],

num_classes,

input_shape)

pred_box = K.concatenate([pred_xy, pred_wh])

# Darknet box loss.

xy_delta = (y_true[l][..., :2]-pred_xy)*grid_shapes[l][::-1]

wh_delta = K.log(y_true[l][..., 2:4]) - K.log(pred_wh)

# Avoid log(0)=-inf.

wh_delta = K.switch(object_mask, wh_delta, K.zeros_like(wh_delta))

box_delta = K.concatenate([xy_delta, wh_delta], axis=-1)

box_delta_scale = 2 - y_true[l][...,2:3]*y_true[l][...,3:4]

# Find ignore mask, iterate over each of batch.

ignore_mask = tf.TensorArray(K.dtype(y_true[0]), size=1, dynamic_size=True)

object_mask_bool = K.cast(object_mask, 'bool')

def loop_body(b, ignore_mask):

true_box = tf.boolean_mask(y_true[l][b,...,0:4], object_mask_bool[b,...,0])

iou = box_iou(pred_box[b], true_box)

best_iou = K.max(iou, axis=-1)

ignore_mask = ignore_mask.write(b, K.cast(best_iou<ignore_thresh, K.dtype(true_box)))

return b+1, ignore_mask

_, ignore_mask = K.control_flow_ops.while_loop(lambda b,*args: b<m, loop_body, [0, ignore_mask])

ignore_mask = ignore_mask.stack()

ignore_mask = K.expand_dims(ignore_mask, -1)

box_loss = object_mask * K.square(box_delta*box_delta_scale)

confidence_loss = object_mask * K.square(1-pred_confidence) + \

(1-object_mask) * K.square(0-pred_confidence) * ignore_mask

class_loss = object_mask * K.square(true_class_probs-pred_class_probs)

loss += K.sum(box_loss) + K.sum(confidence_loss) + K.sum(class_loss)

return loss / K.cast(m, K.dtype(loss))

4.2. yolov3/utils.py

"""Miscellaneous utility functions."""

from functools import reduce

from PIL import Image

def compose(*funcs):

"""

Compose arbitrarily many functions, evaluated left to right.

Reference: https://mathieularose.com/function-composition-in-python/

"""

# return lambda x: reduce(lambda v, f: f(v), funcs, x)

if funcs:

return reduce(lambda f, g: lambda *a, **kw: g(f(*a, **kw)), funcs)

else:

raise ValueError('Composition of empty sequence not supported.')

def letterbox_image(image, size):

'''

resize image with unchanged aspect ratio using padding

'''

image_w, image_h = image.size

w, h = size

new_w = int(image_w * min(w*1.0/image_w, h*1.0/image_h))

new_h = int(image_h * min(w*1.0/image_w, h*1.0/image_h))

resized_image = image.resize((new_w,new_h), Image.BICUBIC)

boxed_image = Image.new('RGB', size, (128,128,128))

boxed_image.paste(resized_image, ((w-new_w)//2,(h-new_h)//2))

return boxed_image

4.3. yolo.py

#! /usr/bin/env python

# -*- coding: utf-8 -*-

"""

Run a YOLO_v3 style detection model on test images.

"""

import colorsys

import os

import random

import numpy as np

from keras import backend as K

from keras.models import load_model

from yolo3.model import yolo_eval

from yolo3.utils import letterbox_image

class YOLO(object):

def __init__(self):

self.model_path = 'model_data/yolo.h5'

self.anchors_path = 'model_data/yolo_anchors.txt'

self.classes_path = 'model_data/coco_classes.txt'

self.score = 0.5

self.iou = 0.5

self.class_names = self._get_class()

self.anchors = self._get_anchors()

self.sess = K.get_session()

self.model_image_size = (416, 416) # fixed size or (None, None)

self.is_fixed_size = self.model_image_size != (None, None)

self.boxes, self.scores, self.classes = self.generate()

def _get_class(self):

classes_path = os.path.expanduser(self.classes_path)

with open(classes_path) as f:

class_names = f.readlines()

class_names = [c.strip() for c in class_names]

return class_names

def _get_anchors(self):

anchors_path = os.path.expanduser(self.anchors_path)

with open(anchors_path) as f:

anchors = f.readline()

anchors = [float(x) for x in anchors.split(',')]

anchors = np.array(anchors).reshape(-1, 2)

return anchors

def generate(self):

model_path = os.path.expanduser(self.model_path)

assert model_path.endswith('.h5'), 'Keras model must be a .h5 file.'

self.yolo_model = load_model(model_path, compile=False)

print('{} model, anchors, and classes loaded.'.format(model_path))

# Generate colors for drawing bounding boxes.

hsv_tuples = [(x / len(self.class_names), 1., 1.)

for x in range(len(self.class_names))]

self.colors = list(map(lambda x: colorsys.hsv_to_rgb(*x), hsv_tuples))

self.colors = list(

map(lambda x: (int(x[0] * 255), int(x[1] * 255), int(x[2] * 255)),

self.colors))

random.seed(10101) # Fixed seed for consistent colors across runs.

random.shuffle(self.colors) # Shuffle colors to decorrelate adjacent classes.

random.seed(None) # Reset seed to default.

# Generate output tensor targets for filtered bounding boxes.

self.input_image_shape = K.placeholder(shape=(2, ))

boxes, scores, classes = yolo_eval(self.yolo_model.output, self.anchors,

len(self.class_names), self.input_image_shape,

score_threshold=self.score, iou_threshold=self.iou)

return boxes, scores, classes

def detect_image(self, image):

if self.is_fixed_size:

assert self.model_image_size[0]%32 == 0, 'Multiples of 32 required'

assert self.model_image_size[1]%32 == 0, 'Multiples of 32 required'

boxed_image = letterbox_image(

image,

tuple(reversed(self.model_image_size)))

else:

new_image_size = (image.width - (image.width % 32),

image.height - (image.height % 32))

boxed_image = letterbox_image(image, new_image_size)

image_data = np.array(boxed_image, dtype='float32')

#print(image_data.shape)

image_data /= 255.

image_data = np.expand_dims(image_data, 0) # Add batch dimension.

out_boxes, out_scores, out_classes = self.sess.run(

[self.boxes, self.scores, self.classes],

feed_dict={

self.yolo_model.input: image_data,

self.input_image_shape: [image.size[1], image.size[0]],

K.learning_phase(): 0

})

return_boxs = []

for i, c in reversed(list(enumerate(out_classes))):

predicted_class = self.class_names[c]

if predicted_class != 'person' :

continue

box = out_boxes[i]

# score = out_scores[i]

x = int(box[1])

y = int(box[0])

w = int(box[3]-box[1])

h = int(box[2]-box[0])

if x < 0 :

w = w + x

x = 0

if y < 0 :

h = h + y

y = 0

return_boxs.append([x,y,w,h])

return return_boxs

def close_session(self):

self.sess.close()

48 comments

你好 deepsort 用的mars-small128.pb,我看到这个pb是基于Market1501训练出来的,只能追踪人。要是追踪其他物体,比如汽车,该怎么处理呢?

做一个追踪模型呢

嗯 谢谢了 做自己追踪模型从哪方面入手了?目前还没做过yolo3的自己的数据集,做了sort的追踪,deepsort的追踪模型不知从哪入手合适。

Appreciate it, Ample info.

您好!想问下yolo.py中self.score=0.5和self.iou=0.5的值有什么影响,还有demo.py中max_cosine_distance=0.3这个值的大小与结果有什么关系呢?感谢!

self.score 和 self.iou 是用于检测的类目和重叠的阈值;max_cosine_distance 是最近邻匹配的阈值.

博主你好,请问如果想跟踪行人以外的目标,需要单独训练特征生成做重识别吗?

不一定需要,只要有相应的目标的检测器,可以的

mars-small128.pb这个文件适用于多种物体的跟踪吗,我运行成功了,但是没有弹出摄像头窗口就自动关闭了

多目标追踪的模型

请问博主,,您是采用的GPU运行的程序嘛,看到您的代码上说能达到11FPS,但是我运行只有1FPS

我测试用的是 GPU

哦哦,谢谢博主

博主 我问一下detection.txt中的信息是什么意思

检测的输出结果.

您好,我想问一下这个代码是针对gpu运行的吗,我运行程序,fps很低才2左右,yolo部分是用cpu运行的,这是为什么呢?

换成 yolov3-tiny 试试.

好的,我试一下,谢谢

您好,请问一下该工程能够实现基于摄像头的实时监测追踪吗?如何实现呢?

摄像头只是图像采集设备,实时处理的话,需要根据硬件环境综合考虑.

好的,谢谢!

你好,我激活函数用的relu,为什么运行convert.py时显示,ValueError: Unknown activation function

reluin section convolutional_1。tf 版本?哪个模型?

for i, c in reversed(list(enumerate(out_classes))): 博主您好,请问这里的reversed是什么作用呢

reversed 函数简单介绍 https://blog.csdn.net/gymaisyl/article/details/83785853

博主,我用训练过的yolo的h5文件去运行demo报错了,请问能指教一下吗?input to reshape is a tensor with 3042 values,but the requested shape requires a multiple of 3549

您好,请问您问题解决了吗?我也遇到了这个问题

输入的 shape 不一致

博主您好,我想请问一下,这个模型转换挑不挑模型呢?就是想问,自己基于darknet训练的yolov3模型都能转完进一步应用吗?预设anchor的个数与分配以及网络的输入尺寸对其有影响吗?您有没有转过自己训练的模型呢?

自定义训练的模型是可以进行应用的,这是包含两部分,检测和追踪,yolov3 完成的是检测部分的工作. 预设anchor的个数与分配以及网络的输入尺寸对yolov3有影响.

像您说的shape不一致导致的问题,但是没搞清楚需要调整哪里,所以想先跟您确定一下,这个模型转换是不是对模型的训练配置有要求,如果没有的话我就再进一步找一下报错的原因,或者您帮忙具体指出一下应该调整哪里,谢谢您啦!

模型转换是需要训练的 cfg 文件和对应得到的训练模型的,可以看下 convert.py 的参数项.

嗯嗯,谢谢您的回答~ 就是我现在将自己训练的yolov3(我调整了anchor数以及每个YOLO层的anchor设置,网络输入的尺寸)转成.h5,运行demo时出现了Input to reshape is a tensor with 4056 values, but the requested shape requires a multiple of 43095的错误

请问下您有没有试着复现商汤最近的多目标追踪算法

最近比较忙,算法论文还没顾得上

博主,你的这套算法能用motchallenge的训练集或测试集吗?路径怎么改呢

你好,DeepSort 的模型文件 model_data/mars-small128.pb 需要转换为 TensorFlow1.4.0. 能说详细点吗?必须用TensorFlow1.4.0.吗?

基于其它TF版本没有运行成功.

您好,博主,有个问题想请教一下,该项目可以直接使用tiny_yolov3的权重来测试吗?我用tiny_yolov3权重测试,报错Cannot create group in read only mode,有什么问题吗?万分感谢!

是不是因为文件的权限是只读而导致的

权限是读写的,我想确认下是不是只需更改cfg文件和weights文件,其他不用改,看别的地方说是h5文件只保存了权重,并没有模型也就是计算图,所以应该重建模型,是这样吗?

不需要其它文件了,这个是测试过可以运行的.

好的,谢谢!那我再试一下

不好意思,博主,还有个问题想请教你一下,就是yolov3-tiny.weights在转换成h5文件时,不识别maxpool层,convert.py代码里面没有针对maxpool层的转换,这个要怎么改一下呢?

博主,我在转换格式时候出现了问题,能指教一下吗

错误内容是?

NearestNeighborDistanceMetric这个类的第三个参数budget请问是做什么用的?

budget : Optional[int]. If not None, fix samples per class to at most this number. Removes the oldest samples when the budget is reached. 应该是类似于清除缓存的作用.