Project - PointRend - detectron2

Tutorial - PointRend in Detectron2

主要包括两部分:

[1] - PointRend 预训练模型的测试.

[2] - PointRend 中间特征表示(intermediate representations).

Colab 里基于 CUDA10.1 的环境配置如下:

# 1. torch, torchvision 安装

pip install -U torch==1.5 torchvision==0.6 -f https://download.pytorch.org/whl/cu101/torch_stable.html

pip install cython pyyaml==5.1

pip install -U 'git+https://github.com/cocodataset/cocoapi.git#subdirectory=PythonAPI'

#import torch, torchvision

#torch.__version__

# 2. detectron2 安装

pip install detectron2==0.1.3 -f https://dl.fbaipublicfiles.com/detectron2/wheels/cu101/torch1.5/index.html

# clone the repo to access PointRend code. Use the same version as the installed detectron2

git clone --branch v0.1.3 https://github.com/facebookresearch/detectron2 detectron2_repo1. PointRend 预训练模型测试

#!/usr/bin/python3

#!--*-- coding: utf-8 --*--

import numpy as np

import cv2

import matplotlib.pyplot as plt

import torch

# import some common detectron2 utilities

import detectron2

from detectron2.utils.logger import setup_logger

setup_logger()

from detectron2 import model_zoo

from detectron2.engine import DefaultPredictor

from detectron2.config import get_cfg

from detectron2.utils.visualizer import Visualizer, ColorMode

from detectron2.data import MetadataCatalog

coco_metadata = MetadataCatalog.get("coco_2017_val")

# import PointRend project

import sys; sys.path.insert(1, "detectron2_repo/projects/PointRend")

import point_rend

# 测试图片

# http://images.cocodataset.org/val2017/000000005477.jpg

im = cv2.imread("/path/to/input.jpg")

cv2_imshow(im)

# 标准 Mask RCNN 模型

cfg = get_cfg()

cfg.merge_from_file(model_zoo.get_config_file("COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x.yaml"))

cfg.MODEL.ROI_HEADS.SCORE_THRESH_TEST = 0.5 # set threshold for this model

cfg.MODEL.WEIGHTS = model_zoo.get_checkpoint_url("COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x.yaml")

mask_rcnn_predictor = DefaultPredictor(cfg)

mask_rcnn_outputs = mask_rcnn_predictor(im)

# PointRend 模型

cfg = get_cfg()

# Add PointRend-specific config

point_rend.add_pointrend_config(cfg)

# Load a config from file

cfg.merge_from_file("detectron2_repo/projects/PointRend/configs/InstanceSegmentation/pointrend_rcnn_R_50_FPN_3x_coco.yaml")

cfg.MODEL.ROI_HEADS.SCORE_THRESH_TEST = 0.5 # set threshold for this model

# Use a model from PointRend model zoo: https://github.com/facebookresearch/detectron2/tree/master/projects/PointRend#pretrained-models

cfg.MODEL.WEIGHTS = "detectron2://PointRend/InstanceSegmentation/pointrend_rcnn_R_50_FPN_3x_coco/164955410/model_final_3c3198.pkl"

predictor = DefaultPredictor(cfg)

outputs = predictor(im)

# 可视化 - 对比两个输出结果.

v = Visualizer(im[:, :, ::-1], coco_metadata, scale=1.2, instance_mode=ColorMode.IMAGE_BW)

mask_rcnn_result = v.draw_instance_predictions(mask_rcnn_outputs["instances"].to("cpu")).get_image()

v = Visualizer(im[:, :, ::-1], coco_metadata, scale=1.2, instance_mode=ColorMode.IMAGE_BW)

point_rend_result = v.draw_instance_predictions(outputs["instances"].to("cpu")).get_image()

print("Mask R-CNN with PointRend (top) vs. Default Mask R-CNN (bottom)")

#

plt.figure(fig_size=(10, 8))

plt.subplot(121)

plt.imshow(point_rend_result[:, :, ::-1])

plt.axis('off')

plt.subplot(122)

plt.imshow(mask_rcnn_result[:, :, ::-1])

plt.axis('off')

plt.show()输出如:

2. PointRend 点采样过程可视化

主要是介绍 PointRend 中 point sampling 处理的工作原理. 对此,采用 model.forward()预测的中间特征表示.

首先,定义一个可视化中间特征的函数:

import matplotlib.pyplot as plt

def plot_mask(mask, title="", point_coords=None, figsize=10, point_marker_size=5):

'''

Simple plotting tool to show intermediate mask predictions and points

where PointRend is applied.

Args:

mask (Tensor): mask prediction of shape HxW

title (str): title for the plot

point_coords ((Tensor, Tensor)): x and y point coordinates

figsize (int): size of the figure to plot

point_marker_size (int): marker size for points

'''

H, W = mask.shape

plt.figure(figsize=(figsize, figsize))

if title:

title += ", "

plt.title("{}resolution {}x{}".format(title, H, W), fontsize=30)

plt.ylabel(H, fontsize=30)

plt.xlabel(W, fontsize=30)

plt.xticks([], [])

plt.yticks([], [])

plt.imshow(mask, interpolation="nearest", cmap=plt.get_cmap('gray'))

if point_coords is not None:

plt.scatter(x=point_coords[0], y=point_coords[1], color="red", s=point_marker_size, clip_on=True)

plt.xlim(-0.5, W - 0.5)

plt.ylim(H - 0.5, - 0.5)

plt.show()网络前向计算 - predictor:

#

model = predictor.model

# In this image we detect several objects but show only the first one.

instance_idx = 0

# Mask predictions are class-specific, "plane" class has id 4.

category_idx = 4

with torch.no_grad():

# Prepare input image.

height, width = im.shape[:2]

im_transformed = predictor.transform_gen.get_transform(im).apply_image(im)

batched_inputs = [{"image": torch.as_tensor(im_transformed).permute(2, 0, 1)}]

# Get bounding box predictions first to simplify the code.

detected_instances = [x["instances"] for x in model.inference(batched_inputs)]

[r.remove("pred_masks") for r in detected_instances] # remove existing mask predictions

pred_boxes = [x.pred_boxes for x in detected_instances]

# Run backbone.

images = model.preprocess_image(batched_inputs)

features = model.backbone(images.tensor)

# Given the bounding boxes, run coarse mask prediction head.

mask_coarse_logits = model.roi_heads._forward_mask_coarse(features, pred_boxes)

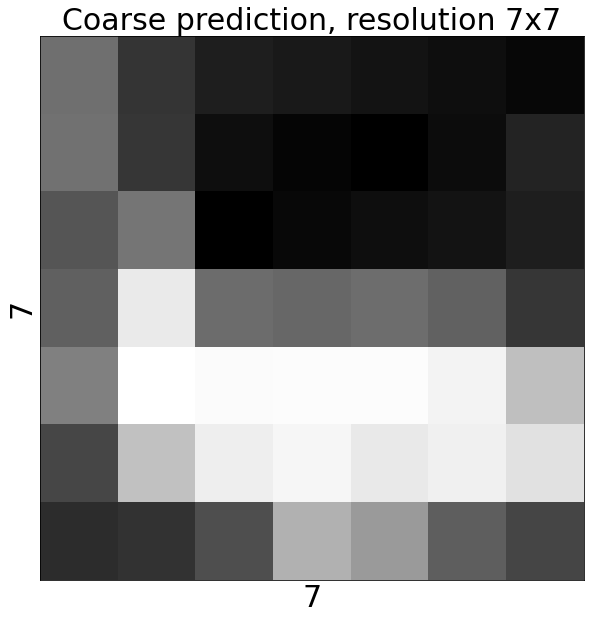

plot_mask(mask_coarse_logits[instance_idx, category_idx].to("cpu"),

title="Coarse prediction")# Prepare features maps to use later

mask_features_list = [features[k] for k in model.roi_heads.mask_point_in_features]

features_scales = [model.roi_heads._feature_scales[k]

for k in model.roi_heads.mask_point_in_features]2.1. 训练阶段 - Point Sampling

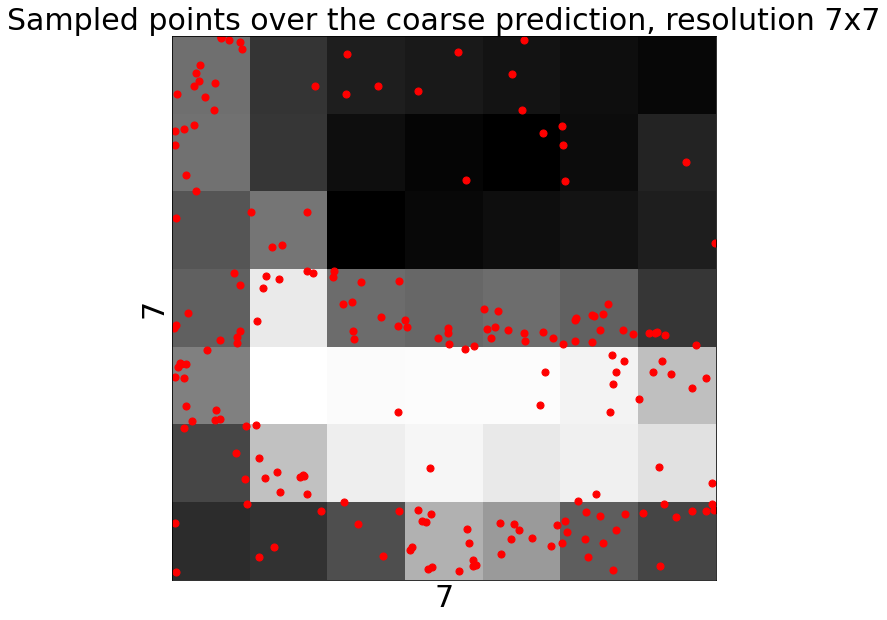

在训练阶段,选择 coarse prediction 中不确定的点,用于训练 PointRend head.

为了可视化不同采样策略的影响,改变 oversample_ratio 和 importance_sample_ratio 参数:

from point_rend.roi_heads import calculate_uncertainty

from point_rend.point_features import get_uncertain_point_coords_with_randomness

# Change number of points to select

num_points = 14 * 14

# Change randomness parameters

oversample_ratio = 3 # `k` in the paper

importance_sample_ratio = 0.75 # `\beta` in the paper

with torch.no_grad():

# We take predicted classes, whereas during real training ground truth classes are used.

pred_classes = torch.cat([x.pred_classes for x in detected_instances])

# Select points given a corse prediction mask

point_coords = get_uncertain_point_coords_with_randomness(

mask_coarse_logits,

lambda logits: calculate_uncertainty(logits, pred_classes),

num_points=num_points,

oversample_ratio=oversample_ratio,

importance_sample_ratio=importance_sample_ratio)

H, W = mask_coarse_logits.shape[-2:]

plot_mask(

mask_coarse_logits[instance_idx, category_idx].to("cpu"),

title="Sampled points over the coarse prediction",

point_coords=(

W * point_coords[instance_idx, :, 0].to("cpu") - 0.5,

H * point_coords[instance_idx, :, 1].to("cpu") - 0.5),

point_marker_size=50

)输出如:

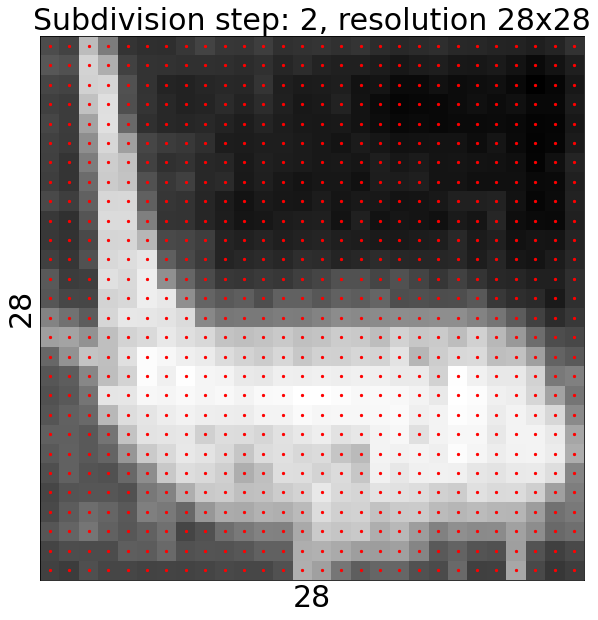

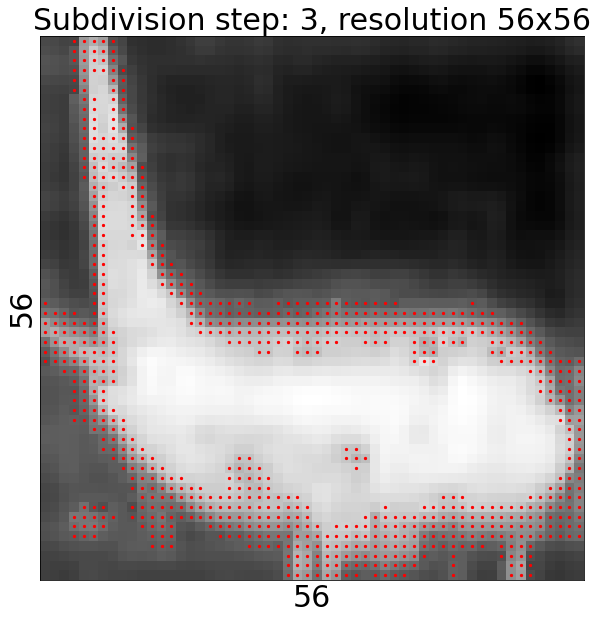

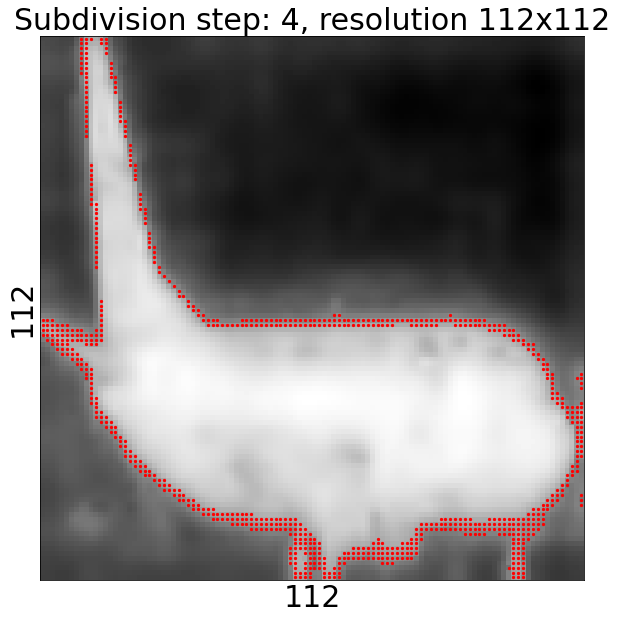

2.2. 推断阶段 - Point Sampling

对于 7x7 的 coarse prediction,采用双线性上采样(bilinearly upsample) num_subdivision_steps 步处理.

对于每一步上采样处理,寻找 num_subdivision_points 个最不确定的点(uncertain points),并采用 PointRend head 网络来对它们进行预测.

修改 num_subdivision_steps 和 num_subdivision_points 参数,改变下面的推断结果.

from detectron2.layers import interpolate

from point_rend.roi_heads import calculate_uncertainty

from point_rend.point_features import (

get_uncertain_point_coords_on_grid,

point_sample,

point_sample_fine_grained_features,

)

num_subdivision_steps = 5

num_subdivision_points = 28 * 28

with torch.no_grad():

plot_mask(

mask_coarse_logits[0, category_idx].to("cpu").numpy(),

title="Coarse prediction"

)

mask_logits = mask_coarse_logits

for subdivions_step in range(num_subdivision_steps):

# Upsample mask prediction

mask_logits = interpolate(

mask_logits, scale_factor=2, mode="bilinear", align_corners=False

)

# If `num_subdivision_points` is larger or equalt to the

# resolution of the next step, then we can skip this step

H, W = mask_logits.shape[-2:]

if (num_subdivision_points >= 4 * H * W

and subdivions_step < num_subdivision_steps - 1):

continue

# Calculate uncertainty for all points on the upsampled regular grid

uncertainty_map = calculate_uncertainty(mask_logits, pred_classes)

# Select most `num_subdivision_points` uncertain points

point_indices, point_coords = get_uncertain_point_coords_on_grid(

uncertainty_map,

num_subdivision_points)

# Extract fine-grained and coarse features for the points

fine_grained_features, _ = point_sample_fine_grained_features(

mask_features_list, features_scales, pred_boxes, point_coords

)

coarse_features = point_sample(mask_coarse_logits, point_coords, align_corners=False)

# Run PointRend head for these points

point_logits = model.roi_heads.mask_point_head(fine_grained_features, coarse_features)

# put mask point predictions to the right places on the upsampled grid.

R, C, H, W = mask_logits.shape

x = (point_indices[instance_idx] % W).to("cpu")

y = (point_indices[instance_idx] // W).to("cpu")

point_indices = point_indices.unsqueeze(1).expand(-1, C, -1)

mask_logits = (mask_logits.reshape(R, C, H * W)

.scatter_(2, point_indices, point_logits)

.view(R, C, H, W) )

#

plot_mask(

mask_logits[instance_idx, category_idx].to("cpu"),

title="Subdivision step: {}".format(subdivions_step + 1),

point_coords=(x, y)

)可视化前面的预测mask.

from detectron2.modeling import GeneralizedRCNN

from detectron2.modeling.roi_heads.mask_head import mask_rcnn_inference

results = detected_instances

mask_rcnn_inference(mask_logits, results)

results = GeneralizedRCNN._postprocess(results, batched_inputs, images.image_sizes)[0]

# We can use `Visualizer` to draw the predictions on the image.

v = Visualizer(im_transformed[:, :, ::-1], coco_metadata)

v = v.draw_instance_predictions(results["instances"].to("cpu"))

cv2_imshow(v.get_image()[:, :, ::-1])3. PointRend 相关代码

point_feature.py:

import torch

from torch.nn import functional as F

from detectron2.layers import cat

from detectron2.structures import Boxes

"""

Shape shorthand in this module:

N: minibatch dimension size, i.e. the number of RoIs for instance segmenation or the

number of images for semantic segmenation.

R: number of ROIs, combined over all images, in the minibatch

P: number of points

"""

def point_sample(input, point_coords, **kwargs):

"""

A wrapper around :function:`torch.nn.functional.grid_sample` to support 3D point_coords tensors.

Unlike :function:`torch.nn.functional.grid_sample` it assumes `point_coords` to lie inside

[0, 1] x [0, 1] square.

Args:

input (Tensor): A tensor of shape (N, C, H, W) that contains features map on a H x W grid.

point_coords (Tensor): A tensor of shape (N, P, 2) or (N, Hgrid, Wgrid, 2) that contains

[0, 1] x [0, 1] normalized point coordinates.

Returns:

output (Tensor): A tensor of shape (N, C, P) or (N, C, Hgrid, Wgrid) that contains

features for points in `point_coords`. The features are obtained via bilinear

interplation from `input` the same way as :function:`torch.nn.functional.grid_sample`.

"""

add_dim = False

if point_coords.dim() == 3:

add_dim = True

point_coords = point_coords.unsqueeze(2)

output = F.grid_sample(input, 2.0 * point_coords - 1.0, **kwargs)

if add_dim:

output = output.squeeze(3)

return output

def generate_regular_grid_point_coords(R, side_size, device):

"""

Generate regular square grid of points in [0, 1] x [0, 1] coordinate space.

Args:

R (int): The number of grids to sample, one for each region.

side_size (int): The side size of the regular grid.

device (torch.device): Desired device of returned tensor.

Returns:

(Tensor): A tensor of shape (R, side_size^2, 2) that contains coordinates

for the regular grids.

"""

aff = torch.tensor([[[0.5, 0, 0.5], [0, 0.5, 0.5]]], device=device)

r = F.affine_grid(aff, torch.Size((1, 1, side_size, side_size)), align_corners=False)

return r.view(1, -1, 2).expand(R, -1, -1)

def get_uncertain_point_coords_with_randomness(

coarse_logits, uncertainty_func, num_points, oversample_ratio, importance_sample_ratio

):

"""

Sample points in [0, 1] x [0, 1] coordinate space based on their uncertainty. The unceratinties

are calculated for each point using 'uncertainty_func' function that takes point's logit

prediction as input.

See PointRend paper for details.

Args:

coarse_logits (Tensor): A tensor of shape (N, C, Hmask, Wmask) or (N, 1, Hmask, Wmask) for

class-specific or class-agnostic prediction.

uncertainty_func: A function that takes a Tensor of shape (N, C, P) or (N, 1, P) that

contains logit predictions for P points and returns their uncertainties as a Tensor of

shape (N, 1, P).

num_points (int): The number of points P to sample.

oversample_ratio (int): Oversampling parameter.

importance_sample_ratio (float): Ratio of points that are sampled via importnace sampling.

Returns:

point_coords (Tensor): A tensor of shape (N, P, 2) that contains the coordinates of P

sampled points.

"""

assert oversample_ratio >= 1

assert importance_sample_ratio <= 1 and importance_sample_ratio >= 0

num_boxes = coarse_logits.shape[0]

num_sampled = int(num_points * oversample_ratio)

point_coords = torch.rand(num_boxes, num_sampled, 2, device=coarse_logits.device)

point_logits = point_sample(coarse_logits, point_coords, align_corners=False)

# It is crucial to calculate uncertainty based on the sampled prediction value for the points.

# Calculating uncertainties of the coarse predictions first and sampling them for points leads

# to incorrect results.

# To illustrate this: assume uncertainty_func(logits)=-abs(logits), a sampled point between

# two coarse predictions with -1 and 1 logits has 0 logits, and therefore 0 uncertainty value.

# However, if we calculate uncertainties for the coarse predictions first,

# both will have -1 uncertainty, and the sampled point will get -1 uncertainty.

point_uncertainties = uncertainty_func(point_logits)

num_uncertain_points = int(importance_sample_ratio * num_points)

num_random_points = num_points - num_uncertain_points

idx = torch.topk(point_uncertainties[:, 0, :], k=num_uncertain_points, dim=1)[1]

shift = num_sampled * torch.arange(num_boxes, dtype=torch.long, device=coarse_logits.device)

idx += shift[:, None]

point_coords = point_coords.view(-1, 2)[idx.view(-1), :].view(

num_boxes, num_uncertain_points, 2

)

if num_random_points > 0:

point_coords = cat(

[

point_coords,

torch.rand(num_boxes, num_random_points, 2, device=coarse_logits.device),

],

dim=1,

)

return point_coords

def get_uncertain_point_coords_on_grid(uncertainty_map, num_points):

"""

Find `num_points` most uncertain points from `uncertainty_map` grid.

Args:

uncertainty_map (Tensor): A tensor of shape (N, 1, H, W) that contains uncertainty

values for a set of points on a regular H x W grid.

num_points (int): The number of points P to select.

Returns:

point_indices (Tensor): A tensor of shape (N, P) that contains indices from

[0, H x W) of the most uncertain points.

point_coords (Tensor): A tensor of shape (N, P, 2) that contains [0, 1] x [0, 1] normalized

coordinates of the most uncertain points from the H x W grid.

"""

R, _, H, W = uncertainty_map.shape

h_step = 1.0 / float(H)

w_step = 1.0 / float(W)

num_points = min(H * W, num_points)

point_indices = torch.topk(uncertainty_map.view(R, H * W), k=num_points, dim=1)[1]

point_coords = torch.zeros(R, num_points, 2, dtype=torch.float, device=uncertainty_map.device)

point_coords[:, :, 0] = w_step / 2.0 + (point_indices % W).to(torch.float) * w_step

point_coords[:, :, 1] = h_step / 2.0 + (point_indices // W).to(torch.float) * h_step

return point_indices, point_coords

def point_sample_fine_grained_features(features_list, feature_scales, boxes, point_coords):

"""

Get features from feature maps in `features_list` that correspond to specific point coordinates

inside each bounding box from `boxes`.

Args:

features_list (list[Tensor]): A list of feature map tensors to get features from.

feature_scales (list[float]): A list of scales for tensors in `features_list`.

boxes (list[Boxes]): A list of I Boxes objects that contain R_1 + ... + R_I = R boxes all

together.

point_coords (Tensor): A tensor of shape (R, P, 2) that contains

[0, 1] x [0, 1] box-normalized coordinates of the P sampled points.

Returns:

point_features (Tensor): A tensor of shape (R, C, P) that contains features sampled

from all features maps in feature_list for P sampled points for all R boxes in `boxes`.

point_coords_wrt_image (Tensor): A tensor of shape (R, P, 2) that contains image-level

coordinates of P points.

"""

cat_boxes = Boxes.cat(boxes)

num_boxes = [len(b) for b in boxes]

point_coords_wrt_image = get_point_coords_wrt_image(cat_boxes.tensor, point_coords)

split_point_coords_wrt_image = torch.split(point_coords_wrt_image, num_boxes)

point_features = []

for idx_img, point_coords_wrt_image_per_image in enumerate(split_point_coords_wrt_image):

point_features_per_image = []

for idx_feature, feature_map in enumerate(features_list):

h, w = feature_map.shape[-2:]

scale = torch.tensor([w, h], device=feature_map.device) / feature_scales[idx_feature]

point_coords_scaled = point_coords_wrt_image_per_image / scale

point_features_per_image.append(

point_sample(

feature_map[idx_img].unsqueeze(0),

point_coords_scaled.unsqueeze(0),

align_corners=False,

)

.squeeze(0)

.transpose(1, 0)

)

point_features.append(cat(point_features_per_image, dim=1))

return cat(point_features, dim=0), point_coords_wrt_image

def get_point_coords_wrt_image(boxes_coords, point_coords):

"""

Convert box-normalized [0, 1] x [0, 1] point cooordinates to image-level coordinates.

Args:

boxes_coords (Tensor): A tensor of shape (R, 4) that contains bounding boxes.

coordinates.

point_coords (Tensor): A tensor of shape (R, P, 2) that contains

[0, 1] x [0, 1] box-normalized coordinates of the P sampled points.

Returns:

point_coords_wrt_image (Tensor): A tensor of shape (R, P, 2) that contains

image-normalized coordinates of P sampled points.

"""

with torch.no_grad():

point_coords_wrt_image = point_coords.clone()

point_coords_wrt_image[:, :, 0] = point_coords_wrt_image[:, :, 0] * (

boxes_coords[:, None, 2] - boxes_coords[:, None, 0]

)

point_coords_wrt_image[:, :, 1] = point_coords_wrt_image[:, :, 1] * (

boxes_coords[:, None, 3] - boxes_coords[:, None, 1]

)

point_coords_wrt_image[:, :, 0] += boxes_coords[:, None, 0]

point_coords_wrt_image[:, :, 1] += boxes_coords[:, None, 1]

return point_coords_wrt_imageroi_heads.py:

import numpy as np

import torch

from detectron2.layers import ShapeSpec, cat, interpolate

from detectron2.modeling import ROI_HEADS_REGISTRY, StandardROIHeads

from detectron2.modeling.roi_heads.mask_head import (

build_mask_head,

mask_rcnn_inference,

mask_rcnn_loss,

)

from detectron2.modeling.roi_heads.roi_heads import select_foreground_proposals

from .point_features import (

generate_regular_grid_point_coords,

get_uncertain_point_coords_on_grid,

get_uncertain_point_coords_with_randomness,

point_sample,

point_sample_fine_grained_features,

)

from .point_head import build_point_head, roi_mask_point_loss

def calculate_uncertainty(logits, classes):

"""

We estimate uncerainty as L1 distance between 0.0 and the logit prediction in 'logits' for the

foreground class in `classes`.

Args:

logits (Tensor): A tensor of shape (R, C, ...) or (R, 1, ...) for class-specific or

class-agnostic, where R is the total number of predicted masks in all images and C is

the number of foreground classes. The values are logits.

classes (list): A list of length R that contains either predicted of ground truth class

for eash predicted mask.

Returns:

scores (Tensor): A tensor of shape (R, 1, ...) that contains uncertainty scores with

the most uncertain locations having the highest uncertainty score.

"""

if logits.shape[1] == 1:

gt_class_logits = logits.clone()

else:

gt_class_logits = logits[

torch.arange(logits.shape[0], device=logits.device), classes

].unsqueeze(1)

return -(torch.abs(gt_class_logits))

@ROI_HEADS_REGISTRY.register()

class PointRendROIHeads(StandardROIHeads):

"""

The RoI heads class for PointRend instance segmentation models.

In this class we redefine the mask head of `StandardROIHeads` leaving all other heads intact.

To avoid namespace conflict with other heads we use names starting from `mask_` for all

variables that correspond to the mask head in the class's namespace.

"""

def __init__(self, cfg, input_shape):

# TODO use explicit args style

super().__init__(cfg, input_shape)

self._init_mask_head(cfg, input_shape)

def _init_mask_head(self, cfg, input_shape):

# fmt: off

self.mask_on = cfg.MODEL.MASK_ON

if not self.mask_on:

return

self.mask_coarse_in_features = cfg.MODEL.ROI_MASK_HEAD.IN_FEATURES

self.mask_coarse_side_size = cfg.MODEL.ROI_MASK_HEAD.POOLER_RESOLUTION

self._feature_scales = {k: 1.0 / v.stride for k, v in input_shape.items()}

# fmt: on

in_channels = np.sum([input_shape[f].channels for f in self.mask_coarse_in_features])

self.mask_coarse_head = build_mask_head(

cfg,

ShapeSpec(

channels=in_channels,

width=self.mask_coarse_side_size,

height=self.mask_coarse_side_size,

),

)

self._init_point_head(cfg, input_shape)

def _init_point_head(self, cfg, input_shape):

# fmt: off

self.mask_point_on = cfg.MODEL.ROI_MASK_HEAD.POINT_HEAD_ON

if not self.mask_point_on:

return

assert cfg.MODEL.ROI_HEADS.NUM_CLASSES == cfg.MODEL.POINT_HEAD.NUM_CLASSES

self.mask_point_in_features = cfg.MODEL.POINT_HEAD.IN_FEATURES

self.mask_point_train_num_points = cfg.MODEL.POINT_HEAD.TRAIN_NUM_POINTS

self.mask_point_oversample_ratio = cfg.MODEL.POINT_HEAD.OVERSAMPLE_RATIO

self.mask_point_importance_sample_ratio = cfg.MODEL.POINT_HEAD.IMPORTANCE_SAMPLE_RATIO

# next two parameters are use in the adaptive subdivions inference procedure

self.mask_point_subdivision_steps = cfg.MODEL.POINT_HEAD.SUBDIVISION_STEPS

self.mask_point_subdivision_num_points = cfg.MODEL.POINT_HEAD.SUBDIVISION_NUM_POINTS

# fmt: on

in_channels = np.sum([input_shape[f].channels for f in self.mask_point_in_features])

self.mask_point_head = build_point_head(

cfg, ShapeSpec(channels=in_channels, width=1, height=1)

)

def _forward_mask(self, features, instances):

"""

Forward logic of the mask prediction branch.

Args:

features (dict[str, Tensor]): #level input features for mask prediction

instances (list[Instances]): the per-image instances to train/predict masks.

In training, they can be the proposals.

In inference, they can be the predicted boxes.

Returns:

In training, a dict of losses.

In inference, update `instances` with new fields "pred_masks" and return it.

"""

if not self.mask_on:

return {} if self.training else instances

if self.training:

proposals, _ = select_foreground_proposals(instances, self.num_classes)

proposal_boxes = [x.proposal_boxes for x in proposals]

mask_coarse_logits = self._forward_mask_coarse(features, proposal_boxes)

losses = {"loss_mask": mask_rcnn_loss(mask_coarse_logits, proposals)}

losses.update(self._forward_mask_point(features, mask_coarse_logits, proposals))

return losses

else:

pred_boxes = [x.pred_boxes for x in instances]

mask_coarse_logits = self._forward_mask_coarse(features, pred_boxes)

mask_logits = self._forward_mask_point(features, mask_coarse_logits, instances)

mask_rcnn_inference(mask_logits, instances)

return instances

def _forward_mask_coarse(self, features, boxes):

"""

Forward logic of the coarse mask head.

"""

point_coords = generate_regular_grid_point_coords(

np.sum(len(x) for x in boxes), self.mask_coarse_side_size, boxes[0].device

)

mask_coarse_features_list = [features[k] for k in self.mask_coarse_in_features]

features_scales = [self._feature_scales[k] for k in self.mask_coarse_in_features]

# For regular grids of points, this function is equivalent to `len(features_list)' calls

# of `ROIAlign` (with `SAMPLING_RATIO=2`), and concat the results.

mask_features, _ = point_sample_fine_grained_features(

mask_coarse_features_list, features_scales, boxes, point_coords

)

return self.mask_coarse_head(mask_features)

def _forward_mask_point(self, features, mask_coarse_logits, instances):

"""

Forward logic of the mask point head.

"""

if not self.mask_point_on:

return {} if self.training else mask_coarse_logits

mask_features_list = [features[k] for k in self.mask_point_in_features]

features_scales = [self._feature_scales[k] for k in self.mask_point_in_features]

if self.training:

proposal_boxes = [x.proposal_boxes for x in instances]

gt_classes = cat([x.gt_classes for x in instances])

with torch.no_grad():

point_coords = get_uncertain_point_coords_with_randomness(

mask_coarse_logits,

lambda logits: calculate_uncertainty(logits, gt_classes),

self.mask_point_train_num_points,

self.mask_point_oversample_ratio,

self.mask_point_importance_sample_ratio,

)

fine_grained_features, point_coords_wrt_image = point_sample_fine_grained_features(

mask_features_list, features_scales, proposal_boxes, point_coords

)

coarse_features = point_sample(mask_coarse_logits, point_coords, align_corners=False)

point_logits = self.mask_point_head(fine_grained_features, coarse_features)

return {

"loss_mask_point": roi_mask_point_loss(

point_logits, instances, point_coords_wrt_image

)

}

else:

pred_boxes = [x.pred_boxes for x in instances]

pred_classes = cat([x.pred_classes for x in instances])

# The subdivision code will fail with the empty list of boxes

if len(pred_classes) == 0:

return mask_coarse_logits

mask_logits = mask_coarse_logits.clone()

for subdivions_step in range(self.mask_point_subdivision_steps):

mask_logits = interpolate(

mask_logits, scale_factor=2, mode="bilinear", align_corners=False

)

# If `mask_point_subdivision_num_points` is larger or equal to the

# resolution of the next step, then we can skip this step

H, W = mask_logits.shape[-2:]

if (

self.mask_point_subdivision_num_points >= 4 * H * W

and subdivions_step < self.mask_point_subdivision_steps - 1

):

continue

uncertainty_map = calculate_uncertainty(mask_logits, pred_classes)

point_indices, point_coords = get_uncertain_point_coords_on_grid(

uncertainty_map, self.mask_point_subdivision_num_points

)

fine_grained_features, _ = point_sample_fine_grained_features(

mask_features_list, features_scales, pred_boxes, point_coords

)

coarse_features = point_sample(

mask_coarse_logits, point_coords, align_corners=False

)

point_logits = self.mask_point_head(fine_grained_features, coarse_features)

# put mask point predictions to the right places on the upsampled grid.

R, C, H, W = mask_logits.shape

point_indices = point_indices.unsqueeze(1).expand(-1, C, -1)

mask_logits = (

mask_logits.reshape(R, C, H * W)

.scatter_(2, point_indices, point_logits)

.view(R, C, H, W)

)

return mask_logits4. PointRend config

如:rcnn_R_50_FPN.yaml

MODEL:

META_ARCHITECTURE: "GeneralizedRCNN"

BACKBONE:

NAME: "build_resnet_fpn_backbone"

RESNETS:

OUT_FEATURES: ["res2", "res3", "res4", "res5"]

FPN:

IN_FEATURES: ["res2", "res3", "res4", "res5"]

ANCHOR_GENERATOR:

SIZES: [[32], [64], [128], [256], [512]] # One size for each in feature map

ASPECT_RATIOS: [[0.5, 1.0, 2.0]] # Three aspect ratios (same for all in feature maps)

RPN:

IN_FEATURES: ["p2", "p3", "p4", "p5", "p6"]

PRE_NMS_TOPK_TRAIN: 2000 # Per FPN level

PRE_NMS_TOPK_TEST: 1000 # Per FPN level

# Detectron1 uses 2000 proposals per-batch,

# (See "modeling/rpn/rpn_outputs.py" for details of this legacy issue)

# which is approximately 1000 proposals per-image since the default batch size for FPN is 2.

POST_NMS_TOPK_TRAIN: 1000

POST_NMS_TOPK_TEST: 1000

ROI_HEADS:

NAME: "PointRendROIHeads"

IN_FEATURES: ["p2", "p3", "p4", "p5"]

ROI_BOX_HEAD:

NAME: "FastRCNNConvFCHead"

NUM_FC: 2

POOLER_RESOLUTION: 7

TRAIN_ON_PRED_BOXES: True

ROI_MASK_HEAD:

NAME: "CoarseMaskHead"

FC_DIM: 1024

NUM_FC: 2

OUTPUT_SIDE_RESOLUTION: 7

IN_FEATURES: ["p2"]

POINT_HEAD_ON: True

POINT_HEAD:

FC_DIM: 256

NUM_FC: 3

IN_FEATURES: ["p2"]

DATASETS:

TRAIN: ("coco_2017_train",)

TEST: ("coco_2017_val",)

SOLVER:

IMS_PER_BATCH: 16

BASE_LR: 0.02

STEPS: (60000, 80000)

MAX_ITER: 90000

INPUT:

# PointRend for instance segmenation does not work with "polygon" mask_format.

MASK_FORMAT: "bitmask"

MIN_SIZE_TRAIN: (640, 672, 704, 736, 768, 800)

VERSION: 2

12 comments

可以用detectron2的 PointRend训练coco数据集吗

可以的,pointrend 只是 head 端的mask处理

请教下,作者下载的哪一版本的detectron2呢

Tag v0.3

作者大大,我发现官方Tag v0.3的pointrend的预训练模型没法下载了⌇●﹏●⌇ 你那有保存吗,有偿,求帮助啊

好像没有,最新的不可用了吗?

不知道是不是版本差异的原因,用最新版的模型的话,不报错但是没效果

损失函数正常吗?训练精度呢?

怎么可视化没看明白,新人

具体是指哪一部分?

feature_map[idx_img].unsqueeze(0) 理解不了

可以串一下前后代码,比如 point_sample 函数要求的输入.