detectron2 自定义数据集的训练.

以气球分割数据集(ballon segmentation dataset) 为例,介绍 detectron2 模型在定制数据集上的训练. 该数据集仅有一个类别:气球.

1. 数据准备

[1] - 下载数据集:

wget https://github.com/matterport/Mask_RCNN/releases/download/v2.1/balloon_dataset.zip unzip balloon_dataset.zip

2. 数据集注册

根据 detectron2 custom dataset tutorial 中的说明,需要先注册 ballon 数据集到 detectron2.

主要包括两步:

[1] - 注册数据集(Register datasets),告诉 detectron2 如何获得数据集.

[2] - 注册数据集的元数据(Register metadata,可选).

2.1. COCO 格式数据

detectron2.data.datasets.load_coco_json(json_file, image_root, dataset_name=None, extra_annotation_keys=None)

from detectron2.data.datasets import register_coco_instances

register_coco_instances("my_dataset_train", {}, "json_annotation_train.json", "path/to/image/dir")

register_coco_instances("my_dataset_val", {}, "json_annotation_val.json", "path/to/image/dir")2.2. 非 COCO 格式数据

2.2.1. Register datasets

注册数据集,需要定义一个将数据集加载进 detectron2 标准格式的函数,并将该函数注册到 detectron2 DatasetCatalog. 即:

def my_dataset_function():

'''

something

'''

return list[dict]

from detectron2.data import DatasetCatalog

DatasetCatalog.register("my_dataset", my_dataset_function)其中,dict 即为一张图像及对应的标注,根据 Standard Dataset Dicts 中的说明,主要包含如下字段:

图片信息字段:

file_name:图片所在的绝对路径height,width:图片的长和宽image_id(str or int):图片唯一标识idannotations(list[dict]):每个dict就是一个instance的标注信息,如box和mask

instance 信息字段:

bbox(list[float]):物体标注框,4个浮点数,xyxy 或 xywh次序bbox_mode(int):bbox的格式,BoxMode.XYXY_ABS或者BoxMode.XYWH_ABScategory_id(int): 类别标签,[0, num_categories-1]范围内的整数,detectron2会把num_categories设置为背景类(background).segmentation(list[list[float]] or dict): instance 分割的标注信息.

[1] - 如果为 list[list[float]],则表示 polygons 列表,其中每个多边形表示一个目标物体的连通区域(connected commonent). 每个 list[float] 是一个 [x1, y1, ..., xn, yn] 格式的多边形. xs 和 ys 表示以像素为单位的绝对坐标.

[2] - 如果为 dict,则表示以 COCO compressed RLE 格式的朱像素的分割mask(per-pixles segmentation mask). 该 dict 需要有两个字段 size 和 counts. 采用 pycocotools.mask.encode(np.asarray(mask, order="F")) 可以将值为 0 和1 的 uint8 分割 mask 转换为该 dict 格式. 此时,如果采用默认的 dataloader,cfg.INPUT.MASK_FORMAT 必须设为 bitmask.

iscrowd0 (default) or 1. instance 标注是否为 COCO 的crowd region. 如果不知道其含义,不建议使用该字段.

2.2.2. Register metadata

每个数据集都有对应的 metadata,通过 MetadataCatalog.get(dataset_name).some_metadata 关联起来.

Metadata 是 key-value 映射,其包含了整个数据集的共享信息,往往用于解释数据集,比如,类目数量、类别颜色、文件根目录 等等. 其有助于数据增强、评估、可视化、日志等.

如果通过 DatasetCatalog.register 注册的数据集,则也可以通过 MetadataCatalog.get(dataset_name).some_key = some_value 来新增对应的 metadata.

例如:

from detectron2.data import MetadataCatalog

MetadataCatalog.get("my_dataset").thing_classes = ["person", "dog"]detectron2 的 builtin 中包含的 metadata 字段有:

thing_classes(list[str]): 用于 instance detection 和 instance segmentation 任务. 每个 instance/thing 类目的类别名列表.thing_colors(list[tuple(r, g, b)]): 预定义的每个 thing 类别的颜色,用于可视化. 如果未指定,则采用随机颜色.stuff_classes(list[str]): 用于 semantic segmentation 和 panoptic segmentation 任务. 每个 stuff 类目的类别名列表.stuff_colors(list[tuple(r, g, b)]): 预定义的每个 stuff 类别的颜色,用于可视化. 如果未指定,则采用随机颜色.

更多可参考 “Metadata” for Datasets.

2.2.3. 实例 - Ballon 数据集

比如 Ballon 数据集的原格式,

[1] - 首先,需要定义一个将数据集加载进 detectron2 标准格式的函数.

import os

import numpy as np

import json

from detectron2.structures import BoxMode

def get_balloon_dicts(img_dir):

json_file = os.path.join(img_dir, "via_region_data.json")

with open(json_file) as f:

imgs_anns = json.load(f)

dataset_dicts = []

for _, v in imgs_anns.items():

record = {}

filename = os.path.join(img_dir, v["filename"])

height, width = cv2.imread(filename).shape[:2]

record["file_name"] = filename

record["height"] = height

record["width"] = width

annos = v["regions"]

objs = []

for _, anno in annos.items():

assert not anno["region_attributes"]

anno = anno["shape_attributes"]

px = anno["all_points_x"]

py = anno["all_points_y"]

poly = [(x + 0.5, y + 0.5) for x, y in zip(px, py)]

poly = [p for x in poly for p in x]

obj = {

"bbox": [np.min(px), np.min(py), np.max(px), np.max(py)],

"bbox_mode": BoxMode.XYXY_ABS,

"segmentation": [poly],

"category_id": 0,

"iscrowd": 0

}

objs.append(obj)

record["annotations"] = objs

dataset_dicts.append(record)

return dataset_dicts[2] - 注册数据集元数据

from detectron2.data import DatasetCatalog, MetadataCatalog

for d in ["train", "val"]:

DatasetCatalog.register("balloon/" + d, lambda d=d: get_balloon_dicts("balloon/" + d))

MetadataCatalog.get("balloon/" + d).set(thing_classes=["balloon"])

#

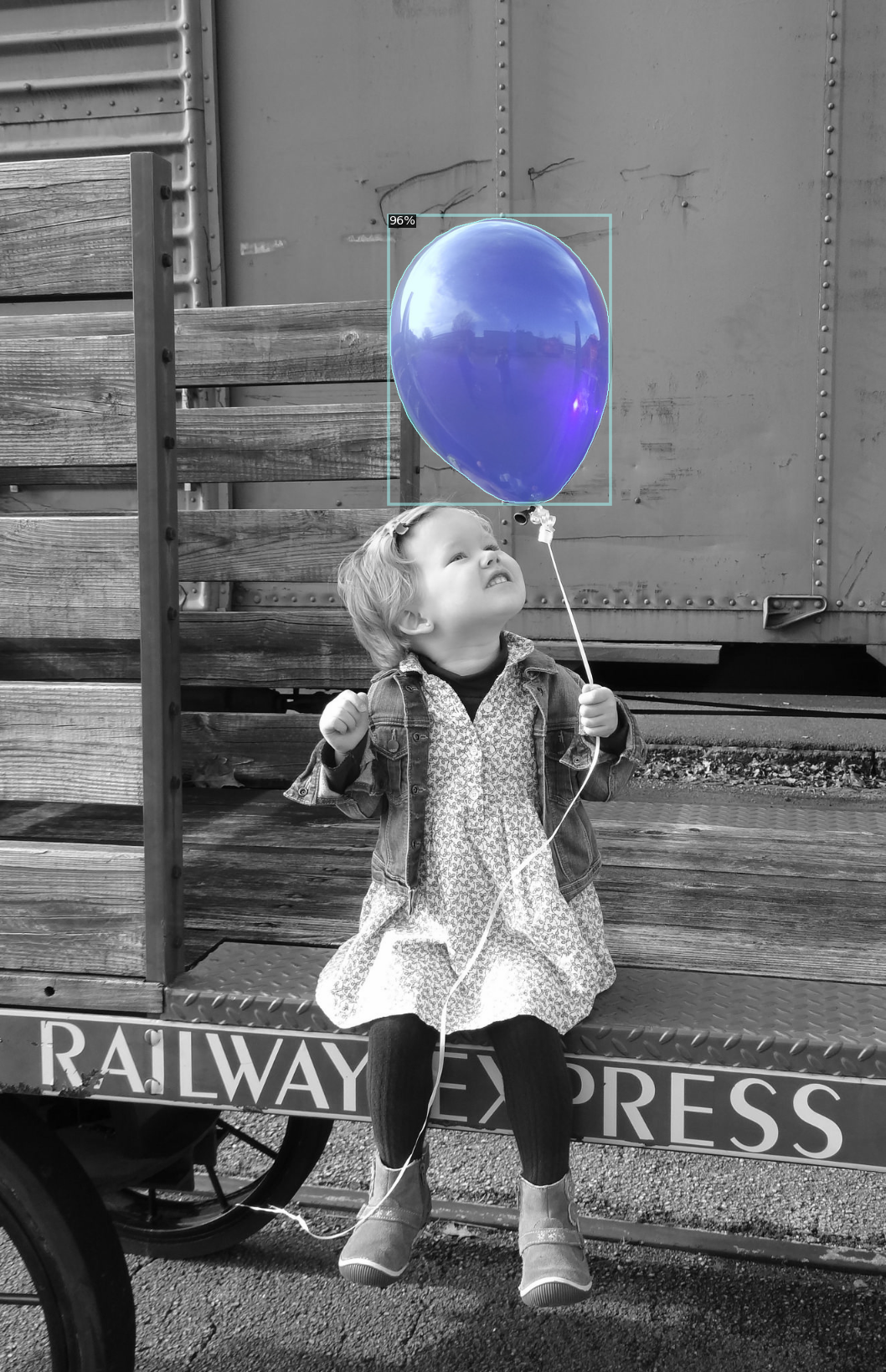

balloon_metadata = MetadataCatalog.get("balloon/train")[3] - 验证数据加载是否正确 - 随机可视化训练数据集的图片标注:

import random

dataset_dicts = get_balloon_dicts("balloon/train")

for d in random.sample(dataset_dicts, 3):

img = cv2.imread(d["file_name"])

visualizer = Visualizer(img[:, :, ::-1], metadata=balloon_metadata, scale=0.5)

vis = visualizer.draw_dataset_dict(d)

plt.imshow(vis.get_image()[:, :, ::-1])

plt.show()如图:

3. 数据集使用

当数据集注册后,即可使用 cfg.DATASETS.{TRAIN,TEST} 中的数据集名,比如 my_dataset.

此外,可能还需要修改的地方有:

MODEL.ROI_HEADS.NUM_CLASSES和MODEL.RETINANET.NUM_CLASSES, 分别为 R-CNN 和 RetinaNet 模型的 thing 类目的数量.MODEL.ROI_KEYPOINT_HEAD.NUM_KEYPOINTS为 Keypoint R-CNN 的 keypoints 数量.MODEL.SEM_SEG_HEAD.NUM_CLASSES为 Semantic FPN 和 Panoptic FPN 的 stuff 类目的数量.

更多可参考 Update the Config for New Datasets.

采用 detectron2 的默认 dataloader 来进行数据加载.

detectron2.data 模块中包含了build_detection_train_loader和build_detection_test_loader两个方法来分别加载训练和测试数据集.

如,训练数据的 dataloader:

from detectron2.engine import DefaultTrainer

from detectron2.config import get_cfg

from detectron2.data import build_detection_train_loader

cfg = get_cfg()

# 这里用COCO数据集的mask rcnn R50_FPN模型参数

cfg.merge_from_file(model_zoo.get_config_file("COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x.yaml"))

cfg.DATASETS.TRAIN = ("balloon_train",)

train_loader = build_detection_train_loader(cfg, mapper=None)4. 模型训练

采用 COCO 预训练的 R50-FPN Mask R-CNN 模型进行微调. 在 Colab K80 GPU 上大约 6 分钟训练 300 次迭代.

from detectron2.engine import DefaultTrainer

from detectron2.config import get_cfg

cfg = get_cfg()

cfg.merge_from_file("./detectron2_repo/configs/COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x.yaml")

cfg.DATASETS.TRAIN = ("balloon/train",)

cfg.DATASETS.TEST = () # 没有测试集

cfg.DATALOADER.NUM_WORKERS = 2

cfg.MODEL.WEIGHTS = "detectron2://COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x/137849600/model_final_f10217.pkl" # 模型微调

cfg.SOLVER.IMS_PER_BATCH = 2

cfg.SOLVER.BASE_LR = 0.00025

cfg.SOLVER.MAX_ITER = 300

cfg.MODEL.ROI_HEADS.BATCH_SIZE_PER_IMAGE = 128 # faster, and good enough for this toy dataset

cfg.MODEL.ROI_HEADS.NUM_CLASSES = 1 #仅有一类(ballon)

os.makedirs(cfg.OUTPUT_DIR, exist_ok=True)

trainer = DefaultTrainer(cfg)

trainer.resume_or_load(resume=False)

trainer.train()输出信息如:

WARNING [10/15 06:15:59 d2.config.compat]: Config './detectron2_repo/configs/COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x.yaml' has no VERSION. Assuming it to be compatible with latest v2. [10/15 06:16:02 d2.engine.defaults]: Model: GeneralizedRCNN( (backbone): FPN( (fpn_lateral2): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1)) (fpn_output2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (fpn_lateral3): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1)) (fpn_output3): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (fpn_lateral4): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1)) (fpn_output4): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (fpn_lateral5): Conv2d(2048, 256, kernel_size=(1, 1), stride=(1, 1)) (fpn_output5): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (top_block): LastLevelMaxPool() (bottom_up): ResNet( (stem): BasicStem( (conv1): Conv2d( 3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False (norm): FrozenBatchNorm2d(num_features=64, eps=1e-05) ) ) (res2): Sequential( (0): BottleneckBlock( (shortcut): Conv2d( 64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=256, eps=1e-05) ) (conv1): Conv2d( 64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=64, eps=1e-05) ) (conv2): Conv2d( 64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=64, eps=1e-05) ) (conv3): Conv2d( 64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=256, eps=1e-05) ) ) (1): BottleneckBlock( (conv1): Conv2d( 256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=64, eps=1e-05) ) (conv2): Conv2d( 64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=64, eps=1e-05) ) (conv3): Conv2d( 64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=256, eps=1e-05) ) ) (2): BottleneckBlock( (conv1): Conv2d( 256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=64, eps=1e-05) ) (conv2): Conv2d( 64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=64, eps=1e-05) ) (conv3): Conv2d( 64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=256, eps=1e-05) ) ) ) (res3): Sequential( (0): BottleneckBlock( (shortcut): Conv2d( 256, 512, kernel_size=(1, 1), stride=(2, 2), bias=False (norm): FrozenBatchNorm2d(num_features=512, eps=1e-05) ) (conv1): Conv2d( 256, 128, kernel_size=(1, 1), stride=(2, 2), bias=False (norm): FrozenBatchNorm2d(num_features=128, eps=1e-05) ) (conv2): Conv2d( 128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=128, eps=1e-05) ) (conv3): Conv2d( 128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=512, eps=1e-05) ) ) (1): BottleneckBlock( (conv1): Conv2d( 512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=128, eps=1e-05) ) (conv2): Conv2d( 128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=128, eps=1e-05) ) (conv3): Conv2d( 128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=512, eps=1e-05) ) ) (2): BottleneckBlock( (conv1): Conv2d( 512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=128, eps=1e-05) ) (conv2): Conv2d( 128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=128, eps=1e-05) ) (conv3): Conv2d( 128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=512, eps=1e-05) ) ) (3): BottleneckBlock( (conv1): Conv2d( 512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=128, eps=1e-05) ) (conv2): Conv2d( 128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=128, eps=1e-05) ) (conv3): Conv2d( 128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=512, eps=1e-05) ) ) ) (res4): Sequential( (0): BottleneckBlock( (shortcut): Conv2d( 512, 1024, kernel_size=(1, 1), stride=(2, 2), bias=False (norm): FrozenBatchNorm2d(num_features=1024, eps=1e-05) ) (conv1): Conv2d( 512, 256, kernel_size=(1, 1), stride=(2, 2), bias=False (norm): FrozenBatchNorm2d(num_features=256, eps=1e-05) ) (conv2): Conv2d( 256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=256, eps=1e-05) ) (conv3): Conv2d( 256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=1024, eps=1e-05) ) ) (1): BottleneckBlock( (conv1): Conv2d( 1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=256, eps=1e-05) ) (conv2): Conv2d( 256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=256, eps=1e-05) ) (conv3): Conv2d( 256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=1024, eps=1e-05) ) ) (2): BottleneckBlock( (conv1): Conv2d( 1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=256, eps=1e-05) ) (conv2): Conv2d( 256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=256, eps=1e-05) ) (conv3): Conv2d( 256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=1024, eps=1e-05) ) ) (3): BottleneckBlock( (conv1): Conv2d( 1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=256, eps=1e-05) ) (conv2): Conv2d( 256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=256, eps=1e-05) ) (conv3): Conv2d( 256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=1024, eps=1e-05) ) ) (4): BottleneckBlock( (conv1): Conv2d( 1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=256, eps=1e-05) ) (conv2): Conv2d( 256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=256, eps=1e-05) ) (conv3): Conv2d( 256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=1024, eps=1e-05) ) ) (5): BottleneckBlock( (conv1): Conv2d( 1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=256, eps=1e-05) ) (conv2): Conv2d( 256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=256, eps=1e-05) ) (conv3): Conv2d( 256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=1024, eps=1e-05) ) ) ) (res5): Sequential( (0): BottleneckBlock( (shortcut): Conv2d( 1024, 2048, kernel_size=(1, 1), stride=(2, 2), bias=False (norm): FrozenBatchNorm2d(num_features=2048, eps=1e-05) ) (conv1): Conv2d( 1024, 512, kernel_size=(1, 1), stride=(2, 2), bias=False (norm): FrozenBatchNorm2d(num_features=512, eps=1e-05) ) (conv2): Conv2d( 512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=512, eps=1e-05) ) (conv3): Conv2d( 512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=2048, eps=1e-05) ) ) (1): BottleneckBlock( (conv1): Conv2d( 2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=512, eps=1e-05) ) (conv2): Conv2d( 512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=512, eps=1e-05) ) (conv3): Conv2d( 512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=2048, eps=1e-05) ) ) (2): BottleneckBlock( (conv1): Conv2d( 2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=512, eps=1e-05) ) (conv2): Conv2d( 512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=512, eps=1e-05) ) (conv3): Conv2d( 512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False (norm): FrozenBatchNorm2d(num_features=2048, eps=1e-05) ) ) ) ) ) (proposal_generator): RPN( (anchor_generator): DefaultAnchorGenerator( (cell_anchors): BufferList() ) (rpn_head): StandardRPNHead( (conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (objectness_logits): Conv2d(256, 3, kernel_size=(1, 1), stride=(1, 1)) (anchor_deltas): Conv2d(256, 12, kernel_size=(1, 1), stride=(1, 1)) ) ) (roi_heads): StandardROIHeads( (box_pooler): ROIPooler( (level_poolers): ModuleList( (0): ROIAlign(output_size=(7, 7), spatial_scale=0.25, sampling_ratio=0, aligned=True) (1): ROIAlign(output_size=(7, 7), spatial_scale=0.125, sampling_ratio=0, aligned=True) (2): ROIAlign(output_size=(7, 7), spatial_scale=0.0625, sampling_ratio=0, aligned=True) (3): ROIAlign(output_size=(7, 7), spatial_scale=0.03125, sampling_ratio=0, aligned=True) ) ) (box_head): FastRCNNConvFCHead( (fc1): Linear(in_features=12544, out_features=1024, bias=True) (fc2): Linear(in_features=1024, out_features=1024, bias=True) ) (box_predictor): FastRCNNOutputLayers( (cls_score): Linear(in_features=1024, out_features=2, bias=True) (bbox_pred): Linear(in_features=1024, out_features=4, bias=True) ) (mask_pooler): ROIPooler( (level_poolers): ModuleList( (0): ROIAlign(output_size=(14, 14), spatial_scale=0.25, sampling_ratio=0, aligned=True) (1): ROIAlign(output_size=(14, 14), spatial_scale=0.125, sampling_ratio=0, aligned=True) (2): ROIAlign(output_size=(14, 14), spatial_scale=0.0625, sampling_ratio=0, aligned=True) (3): ROIAlign(output_size=(14, 14), spatial_scale=0.03125, sampling_ratio=0, aligned=True) ) ) (mask_head): MaskRCNNConvUpsampleHead( (mask_fcn1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (mask_fcn2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (mask_fcn3): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (mask_fcn4): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (deconv): ConvTranspose2d(256, 256, kernel_size=(2, 2), stride=(2, 2)) (predictor): Conv2d(256, 1, kernel_size=(1, 1), stride=(1, 1)) ) ) ) [10/15 06:16:04 d2.data.build]: Removed 0 images with no usable annotations. 61 images left. [10/15 06:16:04 d2.data.build]: Distribution of training instances among all 1 categories: | category | #instances | |:----------:|:-------------| | balloon | 255 | | | | [10/15 06:16:04 d2.data.detection_utils]: TransformGens used in training: [ResizeShortestEdge(short_edge_length=(640, 672, 704, 736, 768, 800), max_size=1333, sample_style='choice'), RandomFlip()] [10/15 06:16:04 d2.data.build]: Using training sampler TrainingSampler model_final_f10217.pkl: 178MB [00:05, 32.0MB/s] 'roi_heads.box_predictor.cls_score.weight' has shape (81, 1024) in the checkpoint but (2, 1024) in the model! Skipped. 'roi_heads.box_predictor.cls_score.bias' has shape (81,) in the checkpoint but (2,) in the model! Skipped. 'roi_heads.box_predictor.bbox_pred.weight' has shape (320, 1024) in the checkpoint but (4, 1024) in the model! Skipped. 'roi_heads.box_predictor.bbox_pred.bias' has shape (320,) in the checkpoint but (4,) in the model! Skipped. 'roi_heads.mask_head.predictor.weight' has shape (80, 256, 1, 1) in the checkpoint but (1, 256, 1, 1) in the model! Skipped. 'roi_heads.mask_head.predictor.bias' has shape (80,) in the checkpoint but (1,) in the model! Skipped. [10/15 06:16:14 d2.engine.train_loop]: Starting training from iteration 0 [10/15 06:16:40 d2.utils.events]: eta: 0:06:11 iter: 19 total_loss: 2.034 loss_cls: 0.675 loss_box_reg: 0.601 loss_mask: 0.694 loss_rpn_cls: 0.040 loss_rpn_loc: 0.008 time: 1.3195 data_time: 0.0048 lr: 0.000005 max_mem: 2548M [10/15 06:17:09 d2.utils.events]: eta: 0:06:03 iter: 39 total_loss: 1.980 loss_cls: 0.645 loss_box_reg: 0.624 loss_mask: 0.668 loss_rpn_cls: 0.020 loss_rpn_loc: 0.005 time: 1.3679 data_time: 0.0049 lr: 0.000010 max_mem: 2548M [10/15 06:17:35 d2.utils.events]: eta: 0:05:25 iter: 59 total_loss: 1.890 loss_cls: 0.570 loss_box_reg: 0.664 loss_mask: 0.612 loss_rpn_cls: 0.020 loss_rpn_loc: 0.006 time: 1.3569 data_time: 0.0042 lr: 0.000015 max_mem: 2548M [10/15 06:18:02 d2.utils.events]: eta: 0:04:58 iter: 79 total_loss: 1.692 loss_cls: 0.497 loss_box_reg: 0.642 loss_mask: 0.545 loss_rpn_cls: 0.051 loss_rpn_loc: 0.007 time: 1.3498 data_time: 0.0041 lr: 0.000020 max_mem: 2548M [10/15 06:18:30 d2.utils.events]: eta: 0:04:34 iter: 99 total_loss: 1.665 loss_cls: 0.446 loss_box_reg: 0.680 loss_mask: 0.475 loss_rpn_cls: 0.019 loss_rpn_loc: 0.007 time: 1.3566 data_time: 0.0040 lr: 0.000025 max_mem: 2548M [10/15 06:18:56 d2.utils.events]: eta: 0:04:05 iter: 119 total_loss: 1.454 loss_cls: 0.414 loss_box_reg: 0.634 loss_mask: 0.417 loss_rpn_cls: 0.037 loss_rpn_loc: 0.010 time: 1.3515 data_time: 0.0040 lr: 0.000030 max_mem: 2548M [10/15 06:19:23 d2.utils.events]: eta: 0:03:38 iter: 139 total_loss: 1.428 loss_cls: 0.369 loss_box_reg: 0.641 loss_mask: 0.382 loss_rpn_cls: 0.028 loss_rpn_loc: 0.009 time: 1.3515 data_time: 0.0044 lr: 0.000035 max_mem: 2669M [10/15 06:19:50 d2.utils.events]: eta: 0:03:10 iter: 159 total_loss: 1.295 loss_cls: 0.314 loss_box_reg: 0.606 loss_mask: 0.327 loss_rpn_cls: 0.022 loss_rpn_loc: 0.006 time: 1.3487 data_time: 0.0042 lr: 0.000040 max_mem: 2669M [10/15 06:20:18 d2.utils.events]: eta: 0:02:44 iter: 179 total_loss: 1.173 loss_cls: 0.284 loss_box_reg: 0.638 loss_mask: 0.276 loss_rpn_cls: 0.012 loss_rpn_loc: 0.005 time: 1.3549 data_time: 0.0042 lr: 0.000045 max_mem: 2669M [10/15 06:20:43 d2.utils.events]: eta: 0:02:15 iter: 199 total_loss: 1.243 loss_cls: 0.280 loss_box_reg: 0.643 loss_mask: 0.243 loss_rpn_cls: 0.017 loss_rpn_loc: 0.007 time: 1.3461 data_time: 0.0042 lr: 0.000050 max_mem: 2669M [10/15 06:21:09 d2.utils.events]: eta: 0:01:48 iter: 219 total_loss: 1.111 loss_cls: 0.226 loss_box_reg: 0.542 loss_mask: 0.223 loss_rpn_cls: 0.022 loss_rpn_loc: 0.005 time: 1.3423 data_time: 0.0044 lr: 0.000055 max_mem: 2669M [10/15 06:21:37 d2.utils.events]: eta: 0:01:21 iter: 239 total_loss: 1.112 loss_cls: 0.220 loss_box_reg: 0.623 loss_mask: 0.210 loss_rpn_cls: 0.028 loss_rpn_loc: 0.006 time: 1.3454 data_time: 0.0042 lr: 0.000060 max_mem: 2669M [10/15 06:22:04 d2.utils.events]: eta: 0:00:54 iter: 259 total_loss: 0.995 loss_cls: 0.176 loss_box_reg: 0.653 loss_mask: 0.164 loss_rpn_cls: 0.011 loss_rpn_loc: 0.008 time: 1.3461 data_time: 0.0047 lr: 0.000065 max_mem: 2669M [10/15 06:22:31 d2.utils.events]: eta: 0:00:28 iter: 279 total_loss: 0.865 loss_cls: 0.156 loss_box_reg: 0.494 loss_mask: 0.137 loss_rpn_cls: 0.014 loss_rpn_loc: 0.006 time: 1.3460 data_time: 0.0045 lr: 0.000070 max_mem: 2758M [10/15 06:23:00 d2.utils.events]: eta: 0:00:01 iter: 299 total_loss: 0.869 loss_cls: 0.159 loss_box_reg: 0.534 loss_mask: 0.150 loss_rpn_cls: 0.030 loss_rpn_loc: 0.012 time: 1.3476 data_time: 0.0047 lr: 0.000075 max_mem: 2758M [10/15 06:23:01 d2.engine.hooks]: Overall training speed: 297 iterations in 0:06:41 (1.3521 s / it) [10/15 06:23:01 d2.engine.hooks]: Total training time: 0:06:44 (0:00:02 on hooks) OrderedDict()

在 tensorboard 可视化训练过程:

load_ext tensorboard tensorboard --logdir output

5. 模型测试

模型训练完成后,在 ballon 验证数据集上进行模型推断.

#

cfg.MODEL.WEIGHTS = os.path.join(cfg.OUTPUT_DIR, "model_final.pth")

cfg.MODEL.ROI_HEADS.SCORE_THRESH_TEST = 0.7 #测试的阈值

cfg.DATASETS.TEST = ("balloon/val", )

predictor = DefaultPredictor(cfg)

#随机选部分图片,可视化

from detectron2.utils.visualizer import ColorMode

dataset_dicts = get_balloon_dicts("balloon/val")

for d in random.sample(dataset_dicts, 3):

im = cv2.imread(d["file_name"])

outputs = predictor(im)

v = Visualizer(im[:, :, ::-1],

metadata=balloon_metadata,

scale=0.8,

instance_mode=ColorMode.IMAGE_BW # 去除非气球区域的像素颜色.

)

v = v.draw_instance_predictions(outputs["instances"].to("cpu"))

plt.show(v.get_image()[:, :, ::-1])

plt.show()输出如:

6. 模型评估

计算 COCO API 中的 AP metric.

from detectron2.evaluation import COCOEvaluator, inference_on_dataset

from detectron2.data import build_detection_test_loader

evaluator = COCOEvaluator("balloon_val", cfg, False, output_dir="./output/")

val_loader = build_detection_test_loader(cfg, "balloon_val")

inference_on_dataset(trainer.model, val_loader, evaluator)输出如:

[11/15 04:10:32 d2.evaluation.evaluator]: Start inference on 13 images [11/15 04:10:46 d2.evaluation.evaluator]: Total inference time: 0:00:05 (0.625000 s / img per device, on 1 devices) [11/15 04:10:46 d2.evaluation.evaluator]: Total inference pure compute time: 0:00:03 (0.437190 s / img per device, on 1 devices) [11/15 04:10:46 d2.evaluation.coco_evaluation]: Preparing results for COCO format ... [11/15 04:10:46 d2.evaluation.coco_evaluation]: Saving results to ./output/coco_instances_results.json [11/15 04:10:46 d2.evaluation.coco_evaluation]: Evaluating predictions ... Loading and preparing results... DONE (t=0.00s) creating index... index created! Running per image evaluation... Evaluate annotation type *bbox* DONE (t=0.13s). Accumulating evaluation results... DONE (t=0.02s). Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.681 Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.849 Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.828 Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.011 Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.572 Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.816 Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.228 Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.714 Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.750 Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.133 Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.671 Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.857 [11/15 04:10:46 d2.evaluation.coco_evaluation]: Evaluation results for bbox: | AP | AP50 | AP75 | APs | APm | APl | |:------:|:------:|:------:|:-----:|:------:|:------:| | 68.133 | 84.928 | 82.807 | 1.106 | 57.231 | 81.589 | Loading and preparing results... DONE (t=0.02s) creating index... index created! Running per image evaluation... Evaluate annotation type *segm* DONE (t=0.15s). Accumulating evaluation results... DONE (t=0.02s). Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.770 Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.846 Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.840 Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.008 Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.589 Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.936 Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.248 Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.780 Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.820 Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.233 Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.682 Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.957 [11/15 04:10:46 d2.evaluation.coco_evaluation]: Evaluation results for segm: | AP | AP50 | AP75 | APs | APm | APl | |:------:|:------:|:------:|:-----:|:------:|:------:| | 76.976 | 84.647 | 84.017 | 0.763 | 58.887 | 93.632 | OrderedDict([('bbox', {'AP': 68.13251528146942, 'AP50': 84.9277873853965, 'AP75': 82.8068039080166, 'APl': 81.58885128967246, 'APm': 57.23126698500541, 'APs': 1.1056105610561056}), ('segm', {'AP': 76.97562111397131, 'AP50': 84.64734364022362, 'AP75': 84.01711775272273, 'APl': 93.63197968598362, 'APm': 58.887208805837645, 'APs': 0.763201320132013})])

7. 参考

[1] - Colab - Detectron2 Tutorial.ipynb

One comment

你好,我注意到您采用 的是COCO 预训练的 R50-FPN Mask R-CNN 模型,对其进行微调,但是测试结果中识别不到人了,但是这个原始模型是能识别coco数据集的80类物体,想知道,微调之后是只能输出气球这一类吗?