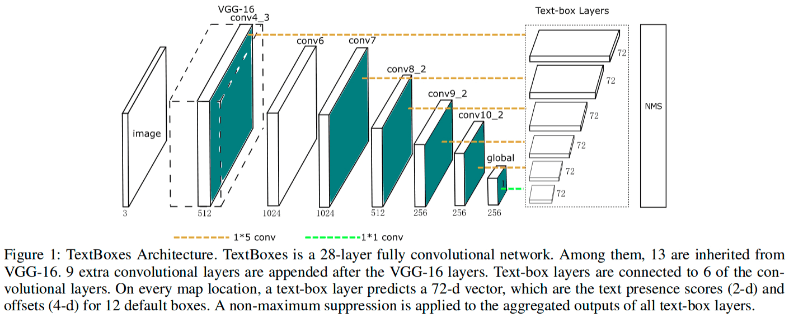

Paper - TextBoxes: A Fast Text Detector with a Single Deep Neural Network-AAAI2017

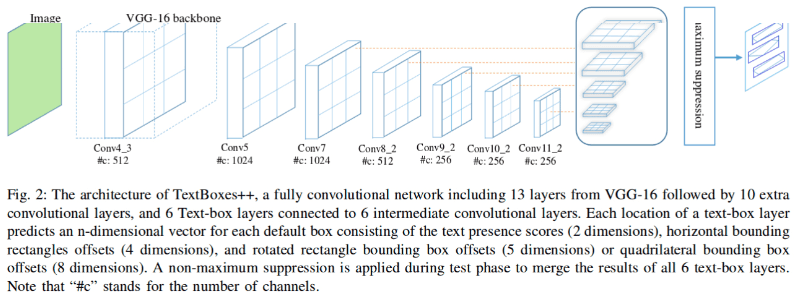

Paper - TextBoxes++: A Single-Shot Oriented Scene Text Detector-2018

TextBoxes 和 TextBoxes++ 是基于 SSD 的基础上进行的改进. 这里主要简单测试 TextBoxes 和 TextBoxes++ 的文字检测部分.

1. 网络结构

1.1. TextBoxes

1.2. TextBoxes++

2. 测试Demo

模型下载:

TextBoxes:

TextBoxes++:

- deploy.prototxt

- model trained on ICDAR 2015 Incidental Text - 百度网盘

- model trained on ICDAR 2015 Incidental Text - Dropbox

2.1. TextBoxes

#!/usr/bin/python3

import numpy as np

import matplotlib.pyplot as plt

import sys

from nms import nms

import cv2

caffe_root = '/path/to/TextBoxes/'

sys.path.insert(0, caffe_root+'python')

import caffe

caffe.set_device(0)

caffe.set_mode_gpu()

class general_textboxes_detector(object):

def __init__(self, use_multi_scale=False):

self.net_height = 768

self.net_width = 768

prototxt = './icdar13/deploy.prototxt'

caffemodel = './icdar13/TextBoxes_icdar13.caffemodel'

self.net = caffe.Net(prototxt, caffemodel, caffe.TEST)

self.det_score_threshold = 0.2

self.overlap_threshold = 0.2

if use_multi_scale:

# self.scales = ((300, 300), (700, 700),

# (700, 500), (700, 300),

# (1600, 1600))

self.scales = ((300, 300), (700, 700),

(700, 500), (700, 300))

else:

self.scales = ((700, 700),)

def get_transformer(self, net_height, net_width):

transformer = caffe.io.Transformer(

{'data': (1, 3, net_height, net_width)})

transformer.set_transpose('data', (2, 0, 1))

transformer.set_mean('data', np.array([104, 117, 123]))

transformer.set_raw_scale('data', 255)

transformer.set_channel_swap('data', (2, 1, 0))

return transformer

def extract_detections(self, detections, img_height, img_width):

dt_results = []

# Parse the outputs.

det_label = detections[0, 0, :, 1]

det_conf = detections[0, 0, :, 2]

det_xmin = detections[0, 0, :, 3]

det_ymin = detections[0, 0, :, 4]

det_xmax = detections[0, 0, :, 5]

det_ymax = detections[0, 0, :, 6]

top_indices = [i for i, conf in enumerate(det_conf)

if conf >= self.det_score_threshold]

top_conf = det_conf[top_indices]

top_xmin = det_xmin[top_indices]

top_ymin = det_ymin[top_indices]

top_xmax = det_xmax[top_indices]

top_ymax = det_ymax[top_indices]

for i in range(top_conf.shape[0]):

xmin = int(round(top_xmin[i] * img_width))

ymin = int(round(top_ymin[i] * img_height))

xmax = int(round(top_xmax[i] * img_width))

ymax = int(round(top_ymax[i] * img_height))

xmin = max(1, xmin)

ymin = max(1, ymin)

xmax = min(img_width - 1, xmax)

ymax = min(img_height - 1, ymax)

score = top_conf[i]

dt_result = [xmin, ymin, xmax, ymin, xmax, ymax, xmin, ymax, score]

dt_results.append(dt_result)

return dt_results

def apply_quad_nms(self, bboxes):

dt_results = sorted(bboxes, key=lambda x: -float(x[8]))

nms_flag = nms(dt_results, self.overlap_threshold)

results = []

for k, dt in enumerate(dt_results):

if nms_flag[k]:

if dt not in results:

results.append(dt)

return results

def predict(self, img_file):

img = caffe.io.load_image(img_file)

img_height, img_width, channels = img.shape

scales_results = []

for scale in self.scales:

img_resize_height, img_resize_width = scale[0], scale[1]

print("[INFO]Img resize height: {}, Img resize width: {}"

.format(img_resize_height, img_resize_width))

self.net.blobs['data'].reshape(1,

3,

img_resize_height,

img_resize_width)

transformer = self.get_transformer(

img_resize_height, img_resize_width)

transformed_image = transformer.preprocess('data', img)

self.net.blobs['data'].data[...] = transformed_image

# Forward pass.

detections = self.net.forward()['detection_out']

scale_results = self.extract_detections(

detections, img_height, img_width)

scales_results.extend(scale_results)

# apply non-maximum suppression

results = self.apply_quad_nms(scales_results)

return results

def vis_res(self, img_file, results):

img_cv2 = cv2.imread(img_file)

plt.figure(figsize=(10, 8))

plt.imshow(img_cv2[:, :, ::-1])

currentAxis = plt.gca()

for result in results:

score = result[-1]

x1 = result[0]

y1 = result[1]

x2 = result[2]

y2 = result[3]

x3 = result[4]

y3 = result[5]

x4 = result[6]

y4 = result[7]

quad = np.array([[x1, y1],

[x2, y2],

[x3, y3],

[x4, y4]])

color_quad = 'r'

currentAxis.add_patch(

plt.Polygon(quad,

fill=False,

edgecolor=color_quad,

linewidth=2))

plt.axis('off')

plt.show()

def analyze():

general_textboxes_detector_model = \

general_textboxes_detector(use_multi_scale=True)

img_file = 'test.jpg'

results = general_textboxes_detector_model.predict(img_file)

general_textboxes_detector_model.vis_res(img_file, results)

print('[INFO]Detection Done.')

if __name__ == '__main__':

analyze()如:

2.2. TextBoxes++

#!/usr/bin/python3

import cv2

import numpy as np

import matplotlib.pyplot as plt

from nms import nms

import sys

caffe_root = '/path/to/TextBoxes_plusplus/'

sys.path.insert(0, caffe_root + 'python')

import caffe

caffe.set_device(0)

caffe.set_mode_gpu()

class general_textboxes_detector(object):

def __init__(self):

self.net_height = 768

self.net_width = 768

prototxt = './icdar15/deploy.prototxt'

caffemodel = './icdar15/model_icdar15.caffemodel'

self.net = caffe.Net(prototxt, caffemodel, caffe.TEST)

self.net.blobs['data'].reshape(1,

3,

self.net_height,

self.net_width)

self.transformer = caffe.io.Transformer(

{'data': (1, 3, self.net_height, self.net_width)})

self.transformer.set_transpose('data', (2, 0, 1))

self.transformer.set_mean('data', np.array([104, 117, 123]))

self.transformer.set_raw_scale('data', 255)

self.transformer.set_channel_swap('data', (2, 1, 0))

self.det_score_threshold = 0.2

self.overlap_threshold = 0.2

def extract_detections(self, detections, img_height, img_width):

det_conf = detections[0, 0, :, 2]

det_x1 = detections[0, 0, :, 7]

det_y1 = detections[0, 0, :, 8]

det_x2 = detections[0, 0, :, 9]

det_y2 = detections[0, 0, :, 10]

det_x3 = detections[0, 0, :, 11]

det_y3 = detections[0, 0, :, 12]

det_x4 = detections[0, 0, :, 13]

det_y4 = detections[0, 0, :, 14]

# Get detections with confidence higher than 0.6.

top_indices = [i for i, conf in enumerate(det_conf)

if conf >= self.det_score_threshold]

top_conf = det_conf[top_indices]

top_x1 = det_x1[top_indices]

top_y1 = det_y1[top_indices]

top_x2 = det_x2[top_indices]

top_y2 = det_y2[top_indices]

top_x3 = det_x3[top_indices]

top_y3 = det_y3[top_indices]

top_x4 = det_x4[top_indices]

top_y4 = det_y4[top_indices]

bboxes = []

for i in range(top_conf.shape[0]):

x1 = int(round(top_x1[i] * img_width))

y1 = int(round(top_y1[i] * img_height))

x2 = int(round(top_x2[i] * img_width))

y2 = int(round(top_y2[i] * img_height))

x3 = int(round(top_x3[i] * img_width))

y3 = int(round(top_y3[i] * img_height))

x4 = int(round(top_x4[i] * img_width))

y4 = int(round(top_y4[i] * img_height))

x1 = max(1, min(x1, img_width - 1))

x2 = max(1, min(x2, img_width - 1))

x3 = max(1, min(x3, img_width - 1))

x4 = max(1, min(x4, img_width - 1))

y1 = max(1, min(y1, img_height - 1))

y2 = max(1, min(y2, img_height - 1))

y3 = max(1, min(y3, img_height - 1))

y4 = max(1, min(y4, img_height - 1))

score = top_conf[i]

bbox = [x1, y1, x2, y2, x3, y3, x4, y4, score]

bboxes.append(bbox)

return bboxes

def apply_quad_nms(self, bboxes):

dt_lines = sorted(bboxes, key=lambda x: -float(x[8]))

nms_flag = nms(dt_lines, self.overlap_threshold)

results = []

for k, dt in enumerate(dt_lines):

if nms_flag[k]:

if dt not in results:

results.append(dt)

return results

def predict(self, img_file):

img = caffe.io.load_image(img_file)

transformed_img = self.transformer.preprocess('data', img)

self.net.blobs['data'].data[...] = transformed_img

img_height, img_width, channels = img.shape

detections = self.net.forward()['detection_out']

bboxes = self.extract_detections(detections, img_height, img_width)

# apply non-maximum suppression

results = self.apply_quad_nms(bboxes)

return results

def vis_res(self, img_file, results):

img_cv2 = cv2.imread(img_file)

plt.figure(figsize=(10, 8))

plt.imshow(img_cv2[:, :, ::-1])

currentAxis = plt.gca()

for result in results:

score = result[-1]

x1 = result[0]

y1 = result[1]

x2 = result[2]

y2 = result[3]

x3 = result[4]

y3 = result[5]

x4 = result[6]

y4 = result[7]

quad = np.array([[x1, y1],

[x2, y2],

[x3, y3],

[x4, y4]])

color_quad = 'r'

currentAxis.add_patch(

plt.Polygon(quad,

fill=False,

edgecolor=color_quad,

linewidth=2))

plt.axis('off')

plt.show()

def analyze():

general_textboxes_detector_model = general_textboxes_detector()

img_file = "test.jpg"

results = general_textboxes_detector_model.predict(img_file)

general_textboxes_detector_model.vis_res(img_file, results)

print('[INFO]Detection Done.')

if __name__ == '__main__':

analyze()如:

相比于 TextBoxes,这张图片来看效果更好.

2.3. Textboxes NMS 函数

import shapely

from shapely.geometry import Polygon,MultiPoint

def polygon_from_list(line):

"""

Create a shapely polygon object from gt or dt line.

"""

#polygon_points = [float(o) for o in line.split(',')[:8]]

polygon_points = np.array(line).reshape(4, 2)

polygon = Polygon(polygon_points).convex_hull

return polygon

def polygon_iou(list1, list2):

"""

两个 shapely 多边形间的 IoU.

"""

polygon_points1 = np.array(list1).reshape(4, 2)

poly1 = Polygon(polygon_points1).convex_hull

polygon_points2 = np.array(list2).reshape(4, 2)

poly2 = Polygon(polygon_points2).convex_hull

union_poly = np.concatenate((polygon_points1,polygon_points2))

if not poly1.intersects(poly2):

# this test is fast and can accelerate calculation

iou = 0

else:

try:

inter_area = poly1.intersection(poly2).area

#union_area = poly1.area + poly2.area - inter_area

union_area = MultiPoint(union_poly).convex_hull.area

if union_area == 0:

return 0

iou = float(inter_area) / union_area

except shapely.geos.TopologicalError:

print('shapely.geos.TopologicalError occured, iou set to 0')

iou = 0

return iou

def nms(boxes,overlap):

rec_scores = [b[-1] for b in boxes]

indices = sorted(range(len(rec_scores)),

key=lambda k: -rec_scores[k])

box_num = len(boxes)

nms_flag = [True]*box_num

for i in range(box_num):

ii = indices[i]

if not nms_flag[ii]:

continue

for j in range(box_num):

jj = indices[j]

if j == i:

continue

if not nms_flag[jj]:

continue

box1 = boxes[ii]

box2 = boxes[jj]

box1_score = rec_scores[ii]

box2_score = rec_scores[jj]

# str1 = box1[9]

# str2 = box2[9]

box_i = [box1[0],box1[1],box1[4],box1[5]]

box_j = [box2[0],box2[1],box2[4],box2[5]]

poly1 = polygon_from_list(box1[0:8])

poly2 = polygon_from_list(box2[0:8])

iou = polygon_iou(box1[0:8],box2[0:8])

thresh = overlap

if iou > thresh:

if box1_score > box2_score:

nms_flag[jj] = False

if box1_score == box2_score and \

poly1.area > poly2.area:

nms_flag[jj] = False

if box1_score == box2_score and \

poly1.area<=poly2.area:

nms_flag[ii] = False

break

'''

if abs((box_i[3]-box_i[1])-(box_j[3]-box_j[1])) <

((box_i[3]-box_i[1])+(box_j[3]-box_j[1]))/2:

if abs(box_i[3]-box_j[3])+abs(box_i[1]-box_j[1])<

(max(box_i[3],box_j[3])-min(box_i[1],box_j[1]))/3:

if box_i[0]<=box_j[0] and \

(box_i[2]+min(box_i[3]-box_i[1],box_j[3]-box_j[1])>=box_j[2]):

nms_flag[jj] = False

'''

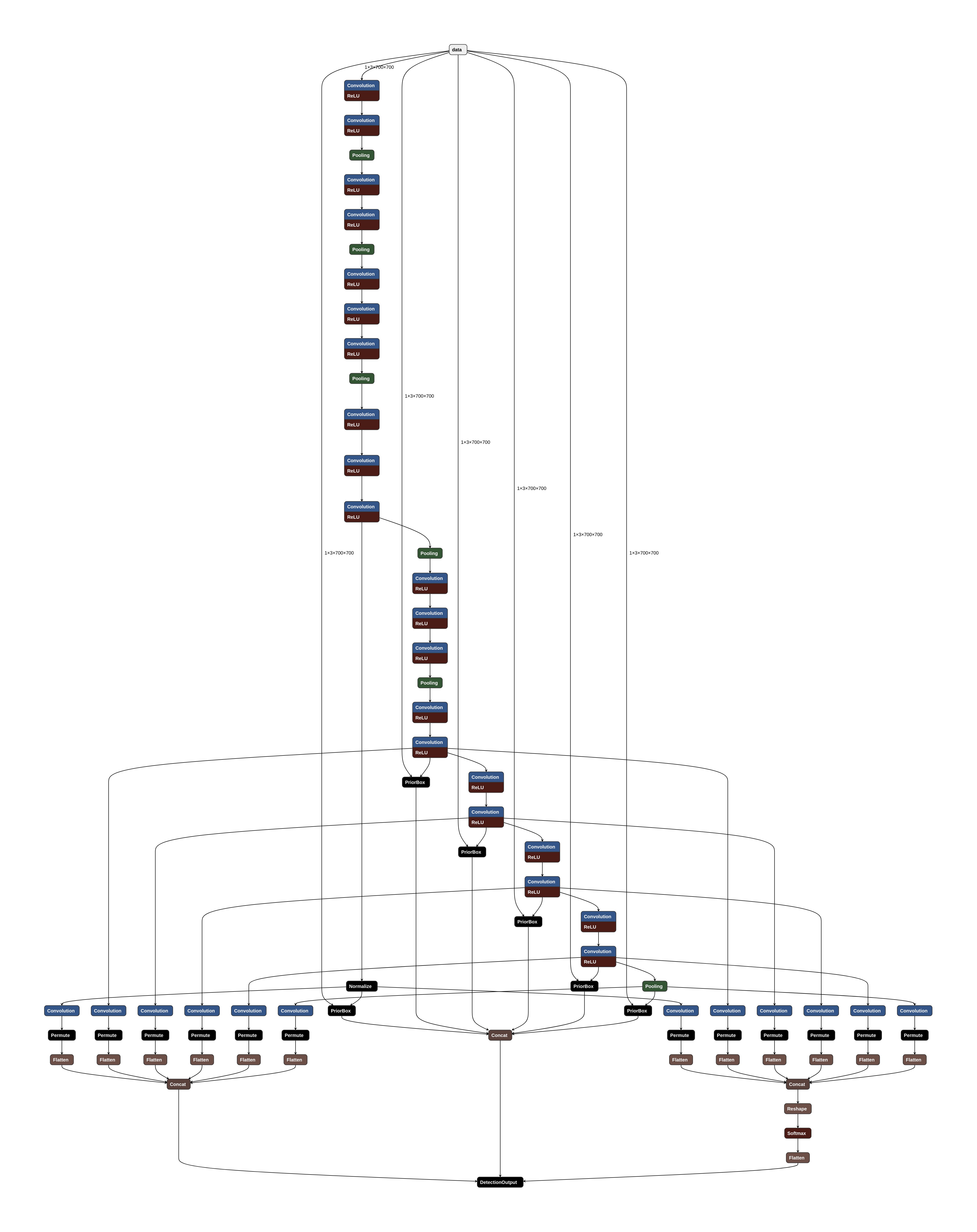

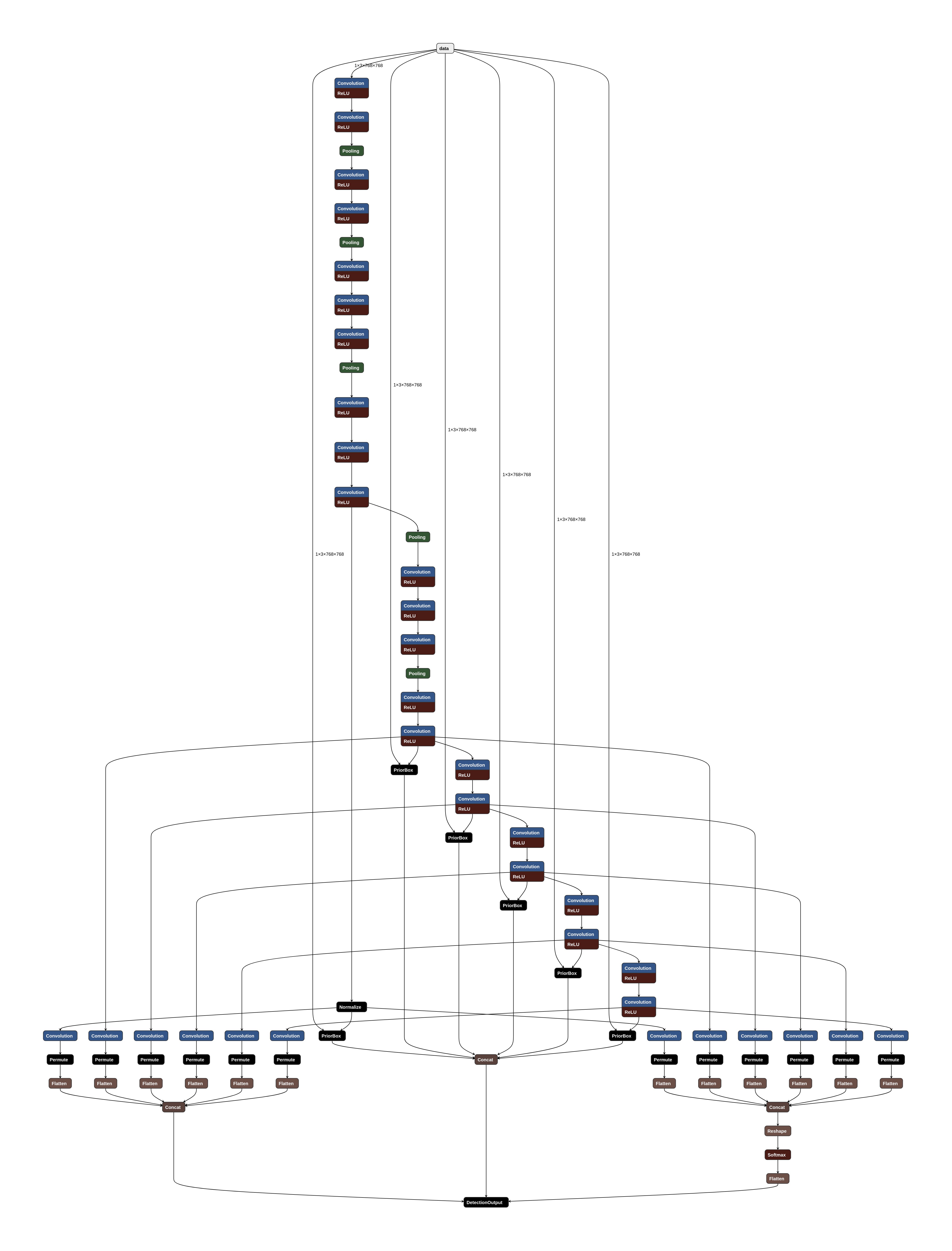

return nms_flag3. 网络 deploy 结构可视化

利用 Netron 导入 deploy.prototxt 的网络结构.

3.1. TextBoxes

点击放大.

3.1. TextBoxes++

点击放大.