论文:Feature Pyramid Networks for Object Detection - CVPR2017

作者:Tsung-Yi Lin, Piotr Dollar, Ross Girshick, Kaiming He, Bharath Hariharan, Serge Belongie

团队:FAIR,Cornell University and Cornell Tech

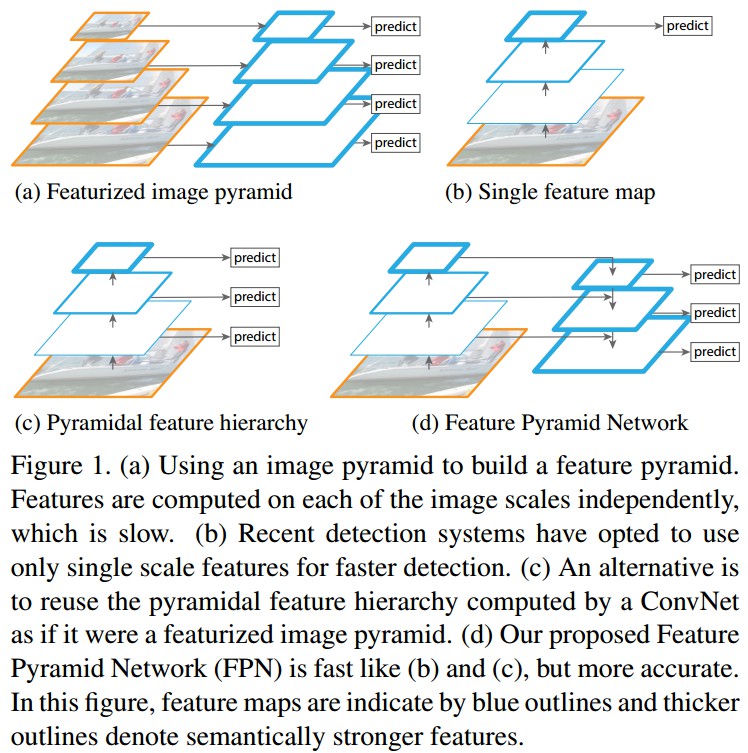

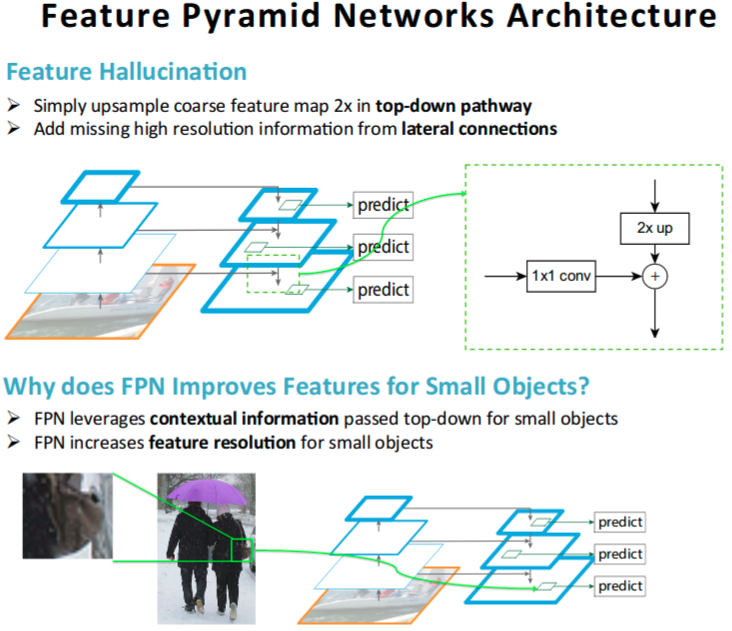

论文里提出一种新的结构,即特征金字塔网络(FPN),用于以包含横向连接(lateral connections) 的 top-down 结构,来构建多尺度的高层语义特征. 如图 1(d).

对于不同大小(不同尺度)的物体的识别是计算机视觉任务中所面临的基本挑战. 对此,图像特征金字塔(Featurized image pyramids)是一种常用的解决方案. 如图1(a). 其主要是对原始图像构建金字塔,并提取每一层的不同特征,然后对提取的特征分别进行预测. 该过程需要的计算量比较大,内存需求高;但检测精度较好.

在基于深度学习的识别任务中,图像特征提取通常是由深度卷积网络计算得到的,能够表示图像的高层语义特征,同时对尺度变化具有更好的鲁棒性;并在单个输入尺度计算的特征进行识别. 如图 1(b).

虽然单个尺度的特征具有较好的鲁棒性,但仍然需要金字塔结构来得到更高的精度. 在 ImageNet 和 COCO 竞赛的前几名结果中,都采用了对图像特征金字塔进行多尺度测试. 但由于内存限制,图像金字塔的深度网络的 end-to-end 训练是不可行的.

不过,图像金字塔不是唯一的多尺度特征表示的计算方式. 深度卷积逐层的计算了特征分层(feature hierarchy),基于下采样层(subsampling layers),特征分层包含了潜在的多尺度、金字塔结构. 虽然网络的特征分层能够得到不同空间分辨率的特征图,但是,对于不同深度(depths) 的网络层的影响,导致特征语义差距较大. 高分辨率的特征图具有低层特征,影响了其对于目标检测的特征表示能力.

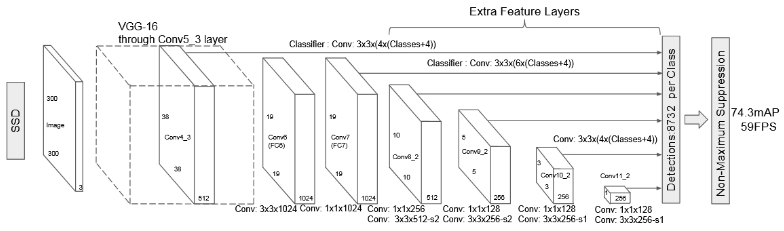

SSD 首先尝试了采用卷积网络的金字塔特征分层,如下图和图1(c).

SSD 的金字塔结构,重用了在前向计算中不同网络层计算输出的多尺度特征图,因此未增加计算代价. 为了避免使用低层特征,SSD 从VGGNets 的 Conv4_3 层开始,又新增了一些层,以分别提取每层特征. 但,SSD 并未对高分辨率的低层特征并没有利用,进而影响了对小物体目标的检测.

(1.a) - 采用图像金字塔来构建其特征金字塔. 每个不同的图像尺度上都需要单独计算特征,速度较慢.

(1.b) - 深度网络目标检测方法中仅使用单一尺寸的特征,以更快的进行检测.

(1.c) - 重用卷积网络计算的金字塔特征分层,类似于 (1.a).

(1.d) - 提出 Feature Pyramid Network(FPN),速度和(1.b),(1.c) 一样快,但是更精确.

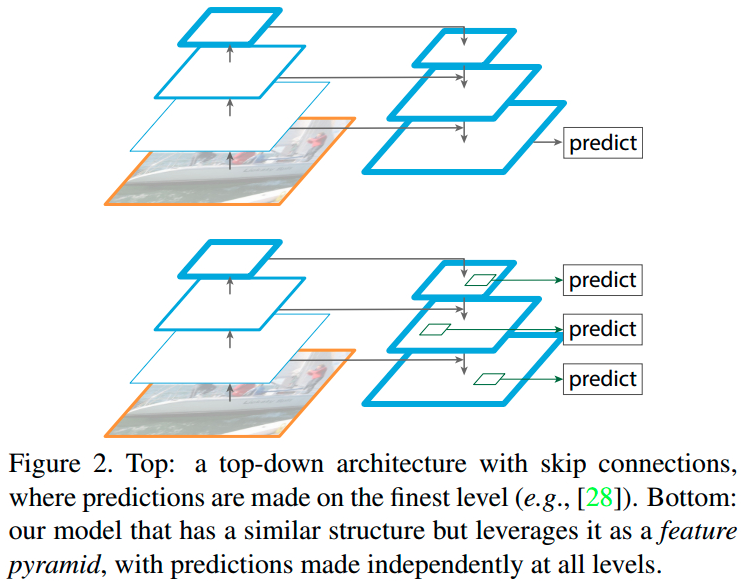

此外,top-down 结构和跳跃连接(skip connections) 也是比较常用的结构,如图2(top),其通常是输出更精细分辨率的高层特征图,以进行预测.

FPN 采用了该结构作为特征金字塔,分别采用了每一网络层进行预测,如图2(bottom).

1. FPN

FPN 的目标是,利用卷积网络的金字塔特征分层,其具有从低层到高层的语义;并构建具有高层语义的特征金字塔.

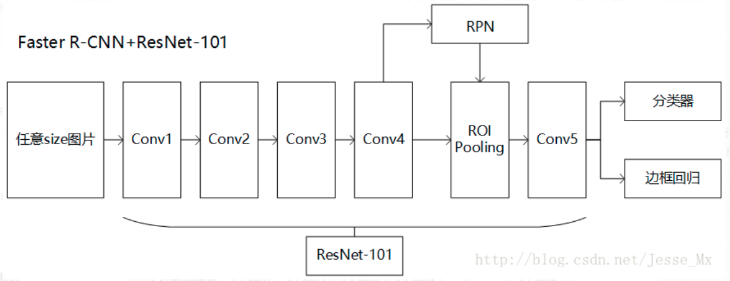

FPN 具有通用性,这里关注于滑窗提取器(sliding window proposers,RPN) 和基于区域的检测器(region-based detectors,Fast R-CNN).

FPN 采用任意尺寸的单尺度图片作为输入,以全卷积的方式在多个层次输出成比例大小的特征图. 该过程不受 backbone 网络结构的影响,这里采用了 ResNets.

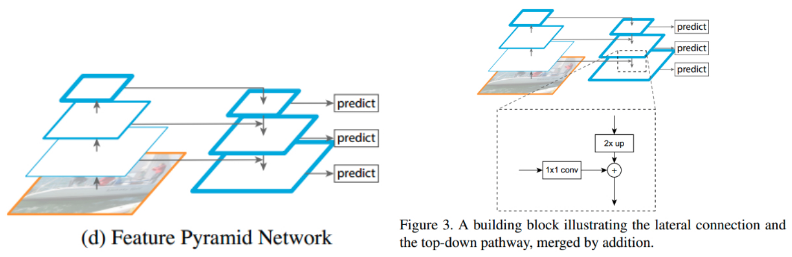

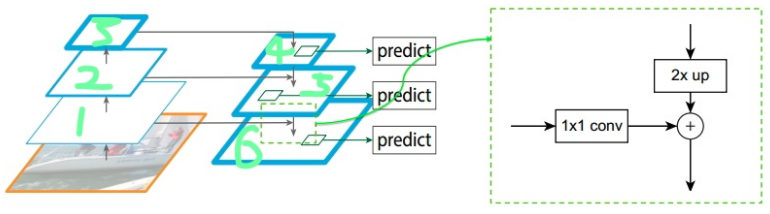

FPN 金字塔的构建涉及了 bottom-up pathway,top-down pathway 和横向连接(lateral connections). 如图 3.

[1] - Bottom-up pathway

由下而上的路径,是 backbone 卷积网络的前向计算,其计算输出了包含不同尺度(尺度因子为 2 )的特征图的特征分层.

通常很多网络层会输出相同尺寸的特征图,这里将这些网络层称为相同的网络 stage. 对于这里的特征金字塔,将每个 stage 定义为一个金字塔层次(pyramid level). 选取每个 stage 的最后一层的输出作为特征图的参考集,以丰富所创建的金字塔. 这种选取方式是自然合理的,因为每个 stage 的最深层具有最强的特征.

具体而言,对于 ResNets,选取每个 stage 的最后一个残差模块(residual block) 输出的特征激活值,对于 conv2, conv3, conv4 和 conv5 的输出,分别记为:$\lbrace C_2, C_3, C_4, C_5 \rbrace$. 其关于输入图片的步长分别为 $\lbrace 4, 8, 16, 32 \rbrace$. 由于 conv1 具有较大的内存占用,故未将 conv1 层整合进金字塔.

[2] - Top-down pathway 和 lateral connections

由下而上的路径,通过上采样操作,得到更高金字塔层的更高分辨率的特征. 然后通过横向连接,将增强特征. 每一个横向连接,合并了 bottom-up pathway 和 top-down pathway 的相同空间尺寸的特征图. bottom-up 特征图具有较低层的语义,但由于其被下采样的次数较少,其激活值具有更精确的定位能力.

如图3,为 top-down 特征图的构建模块. 对于粗粒度分辨率的特征图,首先上采样其空间分辨率 2x 倍(简单起见,采用的是最近邻上采样nearest neighbor upsampling). 然后,采用逐元素相加操作(element-wise addition),合并上采样后的特征图和对应的 bottom-up 特征图(采用了 1x1 卷积来减少通道维度数).

迭代该过程,以生成最终的最精细的特征图. 开始迭代时,对 $C_5$ 后接 1x1 卷积以得到最粗粒度分辨率的特征图;最后,对每个合并后的特征图添加 3x3 卷积操作,以生成最终的特征图,该操作可以缓解上采样的混叠效应( aliasing effect). 最终得到的特征图集为 $\lbrace P_2, P_3, P_4, P_5 \rbrace$,分别对应于 $\lbrace C_2, C_3, C_4, C_5 \rbrace $,其 分别具有相同的空间尺寸.

类似于特征图像金字塔,金字塔的所有层共享分类层(回归层),论文作者固定了所有特征图的特征维度(即,通道数d). 这里 $d=256$. 也就是所有额外的卷积层(如 $P_2$) 都具有 256-d 通道的输出. 这些额外的卷积层中没有非线性层.

2. 基于 FPN 的 RPN

RPN 是一个滑窗(sliding-window) 类别无关(class-agnostic) 的目标检测器.

原始的 RPN 设计中,采用密集的 3x3 滑窗,对网络输出的单个尺度的卷积特征图(13x13x256)进行操作的子网络,进行目标/非目标二值分类和边界框回归. 其实现是,采用一个 3x3 卷积层后接两个 1x1 卷积分支的方式,一个分支用于分类,另一个分支用于回归. 这在网络中记为 network head. 目标/非目标和边界框回归目标都是根据一系列参考框(reference boxes,即 anchors)来定义的. anchors 是根据多种预先定义的尺度(scales) 和长宽比(aspect ratios) 来设定的,以覆盖不同形状的目标物体.

基于 FPN 的 RPN 的设计,是将单个尺度的特征图替换为 FPN,进行多尺度处理. 对特征金字塔的每一层采用相同的 network head(3x3 卷积 + 两个 1x1 卷积分支.). 由于network head 是密集的对所有金字塔层的所有位置进行滑窗,因此,不需要对特定的金字塔层设定多尺度 anchors. 这里采用的方案是,对金字塔每一层特征图仅采用一个尺度(scale) 的 anchor. 具体地,$\lbrace P_2, P_3, P_4, P_5, P_6 \rbrace$ 分别对应的 anchor 尺度为 $\lbrace 32^2, 64^2, 128^2, 256^2, 512^2 \rbrace$. 目标物体不可能都是正方形的,所以还是采用了三种比例:$\lbrace 1:2, 1:1, 2:1 \rbrace$. 因此,金字塔结构中共有 3x5=15 种 anchors.

From: http://www.cnblogs.com/hellcat/p/9811301.html

3. 基于 FPN 的 Faster R-CNN

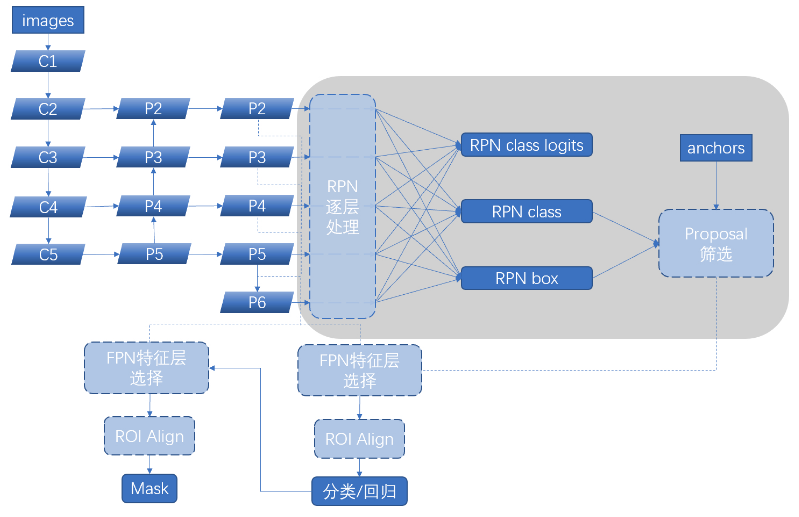

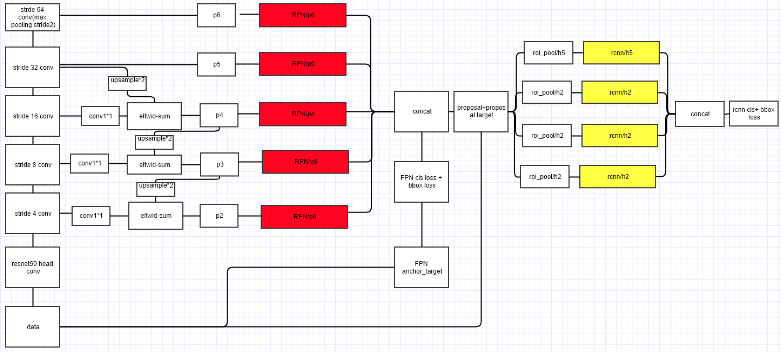

图. FPN整体架构. 网络层1, 2, 3 对应 bottom-up 网络路径,如 ResNets 网络;网络层 4, 5, 6 对应的 top-down 网络路径,即 FPN 的核心部分.

[1] - 给定输入图片,对图片进行预处理操作;

[2] - 图片预处理后,送入 backbone 网络(如 ResNets),构建 bottom-up 网络;

[3] - 如上图,构建对应的 top-down 网络,即,对网络层4(上图中青色标注) 进行上采样操作(2x 倍);并采用 1x1 卷积对网络层 2 的输出进行降维处理,然后对二者进行逐元素相加操作;最后,采用 3x3 卷积操作.

[4] - 对上图中的 4, 5, 6 网络层分别进行 RPN 操作,即,对 [3] 中最后的 3x3 卷积输出分为两个分支,分别接 1x1 卷积,以进行分类和回归操作.

[5] - 对于 [4] 中得到的候选 ROI,分别输入到 4, 5, 6 网络层,进行 RoI Pooling 操作(固定为 7x7 的特征).

[6] - 在 [5] 后连接两个 1024 通道的全连接网络层,其后再接两个分支,分别对应分类层和回归层.

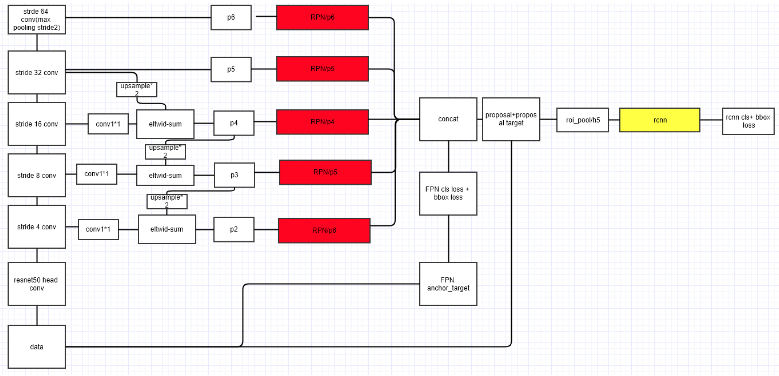

2.1. megred rcnn 结构

From: https://github.com/unsky/FPN

2.2. shared rcnn 结构

From: https://github.com/unsky/FPN

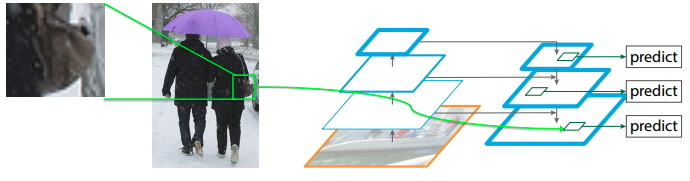

2.3. 为什么 FPN 能够很好的处理小目标

From https://blog.csdn.net/WZZ18191171661/article/details/79494534

如上图所示,FPN能够很好地处理小目标的主要原因是:

- FPN可以利用经过 top-down 模型后的那些上下文信息(高层语义信息);

- 对于小目标而言,FPN 增加了特征映射的分辨率(即在更大的feature map上面进行操作,这样可以获得更多关于小目标的有用信息),如图中所示;

4. Detectron 中的 FPN 实现

"""

Functions for using a Feature Pyramid Network (FPN).

"""

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

from __future__ import unicode_literals

import collections

import numpy as np

from detectron.core.config import cfg

from detectron.modeling.generate_anchors import generate_anchors

from detectron.utils.c2 import const_fill

from detectron.utils.c2 import gauss_fill

from detectron.utils.net import get_group_gn

import detectron.modeling.ResNet as ResNet

import detectron.utils.blob as blob_utils

import detectron.utils.boxes as box_utils

# backbone 网络中的最低和最高金字塔层.

# FPN 中,假设所有的网络都有 5 个 spatial reductions,缩减因子为 2x.

# Level 1 对应于输入图片,因此其不影响使用.

LOWEST_BACKBONE_LVL = 2 # E.g., "conv2"-like level

HIGHEST_BACKBONE_LVL = 5 # E.g., "conv5"-like level

# ------------------------------------------------------------------- #

# FPN with ResNet

# ------------------------------------------------------------------- #

def add_fpn_ResNet50_conv5_body(model):

return add_fpn_onto_conv_body(

model,

ResNet.add_ResNet50_conv5_body,

fpn_level_info_ResNet50_conv5

)

def add_fpn_ResNet50_conv5_P2only_body(model):

return add_fpn_onto_conv_body(

model,

ResNet.add_ResNet50_conv5_body,

fpn_level_info_ResNet50_conv5,

P2only=True

)

def add_fpn_ResNet101_conv5_body(model):

return add_fpn_onto_conv_body(

model,

ResNet.add_ResNet101_conv5_body,

fpn_level_info_ResNet101_conv5

)

def add_fpn_ResNet101_conv5_P2only_body(model):

return add_fpn_onto_conv_body(

model,

ResNet.add_ResNet101_conv5_body,

fpn_level_info_ResNet101_conv5,

P2only=True

)

def add_fpn_ResNet152_conv5_body(model):

return add_fpn_onto_conv_body(

model,

ResNet.add_ResNet152_conv5_body,

fpn_level_info_ResNet152_conv5

)

def add_fpn_ResNet152_conv5_P2only_body(model):

return add_fpn_onto_conv_body(

model,

ResNet.add_ResNet152_conv5_body,

fpn_level_info_ResNet152_conv5,

P2only=True

)

# ------------------------------------------------------------------- #

# 用于将 FPN 整合进 backbone 网络结构中的函数

# ------------------------------------------------------------------- #

def add_fpn_onto_conv_body(

model, conv_body_func, fpn_level_info_func, P2only=False

):

"""

添加指定的 conv body 网络到模型;

然后,添加 FPN levels 到模型.

"""

# Note: blobs_conv is in revsersed order: [fpn5, fpn4, fpn3, fpn2]

# similarly for dims_conv: [2048, 1024, 512, 256]

# similarly for spatial_scales_fpn: [1/32, 1/16, 1/8, 1/4]

conv_body_func(model) # backbone 网络

blobs_fpn, dim_fpn, spatial_scales_fpn = add_fpn(

model, fpn_level_info_func()

) # fpn 层

if P2only:

# 仅使用 FPN 最精细层(finest level)特征图

return blobs_fpn[-1], dim_fpn, spatial_scales_fpn[-1]

else:

# 使用 FPN 的所有层(levels)特征图

return blobs_fpn, dim_fpn, spatial_scales_fpn

def add_fpn(model, fpn_level_info):

"""

基于论文中所述的模型,添加 FPN 连接.

"""

# FPN levels 的构建是从 backbone 网路的最高层/粗糙层(通常是 conv5)开始的.

# 首先,往下构建,递归地构建越来越低/精细分辨率的 FPN levels;

# 然后,往上构建,构建比开始 level 越来越高/越粗糙分辨率的 FPN levels.

fpn_dim = cfg.FPN.DIM

min_level, max_level = get_min_max_levels() # 最低和最高 FPN levels.

# 计算待生成 FPN levels 的 backbone stages 数量,

# 从最粗糙的 backbone stage (通常是 conv5-like level)开始.

# 例如,如果 backbone level 定义了 4 个 stages:conv5, conv4, conv3, conv2,

# 以及 min_level=2, 则添加 FPN 截止的 backbone stages 为 4 - (2 - 2) = 4.

num_backbone_stages = (

len(fpn_level_info.blobs) - (min_level - LOWEST_BACKBONE_LVL)

)

lateral_input_blobs = fpn_level_info.blobs[:num_backbone_stages]

output_blobs = [

'fpn_inner_{}'.format(s)

for s in fpn_level_info.blobs[:num_backbone_stages]

]

fpn_dim_lateral = fpn_level_info.dims # 横向连接维度

xavier_fill = ('XavierFill', {})

# 对于最粗糙的 backbone level:1x1 conv only seeds recursion

if cfg.FPN.USE_GN:

# use GroupNorm

c = model.ConvGN(

lateral_input_blobs[0],

output_blobs[0], # note: this is a prefix

dim_in=fpn_dim_lateral[0],

dim_out=fpn_dim,

group_gn=get_group_gn(fpn_dim),

kernel=1,

pad=0,

stride=1,

weight_init=xavier_fill,

bias_init=const_fill(0.0)

)

output_blobs[0] = c # rename it

else:

model.Conv(

lateral_input_blobs[0],

output_blobs[0],

dim_in=fpn_dim_lateral[0],

dim_out=fpn_dim,

kernel=1,

pad=0,

stride=1,

weight_init=xavier_fill,

bias_init=const_fill(0.0)

)

#

# Step 1: recursively build down starting from the coarsest backbone level

#

# For other levels add top-down and lateral connections

for i in range(num_backbone_stages - 1):

add_topdown_lateral_module(

model,

output_blobs[i], # top-down blob

lateral_input_blobs[i + 1], # lateral blob

output_blobs[i + 1], # next output blob

fpn_dim, # output dimension

fpn_dim_lateral[i + 1] # lateral input dimension

)

# Post-hoc scale-specific 3x3 convs

blobs_fpn = []

spatial_scales = []

for i in range(num_backbone_stages):

if cfg.FPN.USE_GN:

# use GroupNorm

fpn_blob = model.ConvGN(

output_blobs[i],

'fpn_{}'.format(fpn_level_info.blobs[i]),

dim_in=fpn_dim,

dim_out=fpn_dim,

group_gn=get_group_gn(fpn_dim),

kernel=3,

pad=1,

stride=1,

weight_init=xavier_fill,

bias_init=const_fill(0.0)

)

else:

fpn_blob = model.Conv(

output_blobs[i],

'fpn_{}'.format(fpn_level_info.blobs[i]),

dim_in=fpn_dim,

dim_out=fpn_dim,

kernel=3,

pad=1,

stride=1,

weight_init=xavier_fill,

bias_init=const_fill(0.0)

)

blobs_fpn += [fpn_blob]

spatial_scales += [fpn_level_info.spatial_scales[i]]

#

# Step 2: build up starting from the coarsest backbone level

#

# Check if we need the P6 feature map

if not cfg.FPN.EXTRA_CONV_LEVELS and max_level == HIGHEST_BACKBONE_LVL + 1:

# Original FPN P6 level implementation from our CVPR'17 FPN paper

P6_blob_in = blobs_fpn[0]

P6_name = P6_blob_in + '_subsampled_2x'

# Use max pooling to simulate stride 2 subsampling

P6_blob = model.MaxPool(P6_blob_in, P6_name, kernel=1, pad=0, stride=2)

blobs_fpn.insert(0, P6_blob)

spatial_scales.insert(0, spatial_scales[0] * 0.5)

# Coarser FPN levels introduced for RetinaNet

if cfg.FPN.EXTRA_CONV_LEVELS and max_level > HIGHEST_BACKBONE_LVL:

fpn_blob = fpn_level_info.blobs[0]

dim_in = fpn_level_info.dims[0]

for i in range(HIGHEST_BACKBONE_LVL + 1, max_level + 1):

fpn_blob_in = fpn_blob

if i > HIGHEST_BACKBONE_LVL + 1:

fpn_blob_in = model.Relu(fpn_blob, fpn_blob + '_relu')

fpn_blob = model.Conv(

fpn_blob_in,

'fpn_' + str(i),

dim_in=dim_in,

dim_out=fpn_dim,

kernel=3,

pad=1,

stride=2,

weight_init=xavier_fill,

bias_init=const_fill(0.0)

)

dim_in = fpn_dim

blobs_fpn.insert(0, fpn_blob)

spatial_scales.insert(0, spatial_scales[0] * 0.5)

return blobs_fpn, fpn_dim, spatial_scales

def add_topdown_lateral_module(

model, fpn_top, fpn_lateral, fpn_bottom, dim_top, dim_lateral

):

"""

Add a top-down lateral module.

"""

# Lateral 1x1 conv

if cfg.FPN.USE_GN:

# use GroupNorm

lat = model.ConvGN(

fpn_lateral,

fpn_bottom + '_lateral',

dim_in=dim_lateral,

dim_out=dim_top,

group_gn=get_group_gn(dim_top),

kernel=1,

pad=0,

stride=1,

weight_init=(

const_fill(0.0) if cfg.FPN.ZERO_INIT_LATERAL

else ('XavierFill', {})),

bias_init=const_fill(0.0)

)

else:

lat = model.Conv(

fpn_lateral,

fpn_bottom + '_lateral',

dim_in=dim_lateral,

dim_out=dim_top,

kernel=1,

pad=0,

stride=1,

weight_init=(

const_fill(0.0)

if cfg.FPN.ZERO_INIT_LATERAL else ('XavierFill', {})

),

bias_init=const_fill(0.0)

)

# Top-down 2x upsampling

td = model.net.UpsampleNearest(fpn_top, fpn_bottom + '_topdown', scale=2)

# Sum lateral and top-down

model.net.Sum([lat, td], fpn_bottom)

def get_min_max_levels():

"""

The min and max FPN levels required for supporting RPN and/or RoI

transform operations on multiple FPN levels.

"""

min_level = LOWEST_BACKBONE_LVL

max_level = HIGHEST_BACKBONE_LVL

if cfg.FPN.MULTILEVEL_RPN and not cfg.FPN.MULTILEVEL_ROIS:

max_level = cfg.FPN.RPN_MAX_LEVEL

min_level = cfg.FPN.RPN_MIN_LEVEL

if not cfg.FPN.MULTILEVEL_RPN and cfg.FPN.MULTILEVEL_ROIS:

max_level = cfg.FPN.ROI_MAX_LEVEL

min_level = cfg.FPN.ROI_MIN_LEVEL

if cfg.FPN.MULTILEVEL_RPN and cfg.FPN.MULTILEVEL_ROIS:

max_level = max(cfg.FPN.RPN_MAX_LEVEL, cfg.FPN.ROI_MAX_LEVEL)

min_level = min(cfg.FPN.RPN_MIN_LEVEL, cfg.FPN.ROI_MIN_LEVEL)

return min_level, max_level

# ------------------------------------------------------------------- #

# RPN with an FPN backbone

# ------------------------------------------------------------------- #

def add_fpn_rpn_outputs(model, blobs_in, dim_in, spatial_scales):

"""

Add RPN on FPN specific outputs.

"""

num_anchors = len(cfg.FPN.RPN_ASPECT_RATIOS)

dim_out = dim_in

k_max = cfg.FPN.RPN_MAX_LEVEL # coarsest level of pyramid

k_min = cfg.FPN.RPN_MIN_LEVEL # finest level of pyramid

assert len(blobs_in) == k_max - k_min + 1

for lvl in range(k_min, k_max + 1):

bl_in = blobs_in[k_max - lvl] # blobs_in is in reversed order

sc = spatial_scales[k_max - lvl] # in reversed order

slvl = str(lvl)

if lvl == k_min:

# Create conv ops with randomly initialized weights and

# zeroed biases for the first FPN level; these will be shared by

# all other FPN levels

# RPN hidden representation

conv_rpn_fpn = model.Conv(

bl_in,

'conv_rpn_fpn' + slvl,

dim_in,

dim_out,

kernel=3,

pad=1,

stride=1,

weight_init=gauss_fill(0.01),

bias_init=const_fill(0.0)

)

model.Relu(conv_rpn_fpn, conv_rpn_fpn)

# Proposal classification scores

rpn_cls_logits_fpn = model.Conv(

conv_rpn_fpn,

'rpn_cls_logits_fpn' + slvl,

dim_in,

num_anchors,

kernel=1,

pad=0,

stride=1,

weight_init=gauss_fill(0.01),

bias_init=const_fill(0.0)

)

# Proposal bbox regression deltas

rpn_bbox_pred_fpn = model.Conv(

conv_rpn_fpn,

'rpn_bbox_pred_fpn' + slvl,

dim_in,

4 * num_anchors,

kernel=1,

pad=0,

stride=1,

weight_init=gauss_fill(0.01),

bias_init=const_fill(0.0)

)

else:

# Share weights and biases

sk_min = str(k_min)

# RPN hidden representation

conv_rpn_fpn = model.ConvShared(

bl_in,

'conv_rpn_fpn' + slvl,

dim_in,

dim_out,

kernel=3,

pad=1,

stride=1,

weight='conv_rpn_fpn' + sk_min + '_w',

bias='conv_rpn_fpn' + sk_min + '_b'

)

model.Relu(conv_rpn_fpn, conv_rpn_fpn)

# Proposal classification scores

rpn_cls_logits_fpn = model.ConvShared(

conv_rpn_fpn,

'rpn_cls_logits_fpn' + slvl,

dim_in,

num_anchors,

kernel=1,

pad=0,

stride=1,

weight='rpn_cls_logits_fpn' + sk_min + '_w',

bias='rpn_cls_logits_fpn' + sk_min + '_b'

)

# Proposal bbox regression deltas

rpn_bbox_pred_fpn = model.ConvShared(

conv_rpn_fpn,

'rpn_bbox_pred_fpn' + slvl,

dim_in,

4 * num_anchors,

kernel=1,

pad=0,

stride=1,

weight='rpn_bbox_pred_fpn' + sk_min + '_w',

bias='rpn_bbox_pred_fpn' + sk_min + '_b'

)

if not model.train or cfg.MODEL.FASTER_RCNN:

# Proposals are needed during:

# 1) inference (== not model.train) for RPN only and Faster R-CNN

# OR

# 2) training for Faster R-CNN

# Otherwise (== training for RPN only), proposals are not needed

lvl_anchors = generate_anchors(

stride=2.**lvl,

sizes=(cfg.FPN.RPN_ANCHOR_START_SIZE * 2.**(lvl - k_min), ),

aspect_ratios=cfg.FPN.RPN_ASPECT_RATIOS

)

rpn_cls_probs_fpn = model.net.Sigmoid(

rpn_cls_logits_fpn, 'rpn_cls_probs_fpn' + slvl

)

model.GenerateProposals(

[rpn_cls_probs_fpn, rpn_bbox_pred_fpn, 'im_info'],

['rpn_rois_fpn' + slvl, 'rpn_roi_probs_fpn' + slvl],

anchors=lvl_anchors,

spatial_scale=sc

)

def add_fpn_rpn_losses(model):

"""

Add RPN on FPN specific losses.

"""

loss_gradients = {}

for lvl in range(cfg.FPN.RPN_MIN_LEVEL, cfg.FPN.RPN_MAX_LEVEL + 1):

slvl = str(lvl)

# Spatially narrow the full-sized RPN label arrays to match the feature map shape

model.net.SpatialNarrowAs(

['rpn_labels_int32_wide_fpn' + slvl, 'rpn_cls_logits_fpn' + slvl],

'rpn_labels_int32_fpn' + slvl

)

for key in ('targets', 'inside_weights', 'outside_weights'):

model.net.SpatialNarrowAs(

[

'rpn_bbox_' + key + '_wide_fpn' + slvl,

'rpn_bbox_pred_fpn' + slvl

],

'rpn_bbox_' + key + '_fpn' + slvl

)

loss_rpn_cls_fpn = model.net.SigmoidCrossEntropyLoss(

['rpn_cls_logits_fpn' + slvl, 'rpn_labels_int32_fpn' + slvl],

'loss_rpn_cls_fpn' + slvl,

normalize=0,

scale=(

model.GetLossScale() / cfg.TRAIN.RPN_BATCH_SIZE_PER_IM /

cfg.TRAIN.IMS_PER_BATCH

)

)

# Normalization by (1) RPN_BATCH_SIZE_PER_IM and (2) IMS_PER_BATCH is

# handled by (1) setting bbox outside weights and (2) SmoothL1Loss

# normalizes by IMS_PER_BATCH

loss_rpn_bbox_fpn = model.net.SmoothL1Loss(

[

'rpn_bbox_pred_fpn' + slvl,

'rpn_bbox_targets_fpn' + slvl,

'rpn_bbox_inside_weights_fpn' + slvl,

'rpn_bbox_outside_weights_fpn' + slvl

],

'loss_rpn_bbox_fpn' + slvl,

beta=1. / 9.,

scale=model.GetLossScale(),

)

loss_gradients.update(

blob_utils.

get_loss_gradients(model, [loss_rpn_cls_fpn, loss_rpn_bbox_fpn])

)

model.AddLosses(['loss_rpn_cls_fpn' + slvl,

'loss_rpn_bbox_fpn' + slvl])

return loss_gradients

# ------------------------------------------------------------------- #

# Helper functions for working with multilevel FPN RoIs

# ------------------------------------------------------------------- #

def map_rois_to_fpn_levels(rois, k_min, k_max):

"""

Determine which FPN level each RoI in a set of RoIs should map to based

on the heuristic in the FPN paper.

"""

# Compute level ids

s = np.sqrt(box_utils.boxes_area(rois))

s0 = cfg.FPN.ROI_CANONICAL_SCALE # default: 224

lvl0 = cfg.FPN.ROI_CANONICAL_LEVEL # default: 4

# Eqn.(1) in FPN paper

target_lvls = np.floor(lvl0 + np.log2(s / s0 + 1e-6))

target_lvls = np.clip(target_lvls, k_min, k_max)

return target_lvls

def add_multilevel_roi_blobs(

blobs, blob_prefix, rois, target_lvls, lvl_min, lvl_max

):

"""

Add RoI blobs for multiple FPN levels to the blobs dict.

blobs: a dict mapping from blob name to numpy ndarray

blob_prefix: name prefix to use for the FPN blobs

rois: the source rois as a 2D numpy array of shape (N, 5) where each row is

an roi and the columns encode (batch_idx, x1, y1, x2, y2)

target_lvls: numpy array of shape (N, ) indicating which FPN level each roi

in rois should be assigned to

lvl_min: the finest (highest resolution) FPN level (e.g., 2)

lvl_max: the coarest (lowest resolution) FPN level (e.g., 6)

"""

rois_idx_order = np.empty((0, ))

rois_stacked = np.zeros((0, 5), dtype=np.float32) # for assert

for lvl in range(lvl_min, lvl_max + 1):

idx_lvl = np.where(target_lvls == lvl)[0]

blobs[blob_prefix + '_fpn' + str(lvl)] = rois[idx_lvl, :]

rois_idx_order = np.concatenate((rois_idx_order, idx_lvl))

rois_stacked = np.vstack(

[rois_stacked, blobs[blob_prefix + '_fpn' + str(lvl)]]

)

rois_idx_restore = np.argsort(rois_idx_order).astype(np.int32, copy=False)

blobs[blob_prefix + '_idx_restore_int32'] = rois_idx_restore

# Sanity check that restore order is correct

assert (rois_stacked[rois_idx_restore] == rois).all()

# ------------------------------------------------------------------ #

# FPN level info for stages 5, 4, 3, 2 for select models (more can be added)

# ------------------------------------------------------------------ #

FpnLevelInfo = collections.namedtuple(

'FpnLevelInfo',

['blobs', 'dims', 'spatial_scales']

)

def fpn_level_info_ResNet50_conv5():

return FpnLevelInfo(

blobs=('res5_2_sum', 'res4_5_sum', 'res3_3_sum', 'res2_2_sum'),

dims=(2048, 1024, 512, 256),

spatial_scales=(1. / 32., 1. / 16., 1. / 8., 1. / 4.)

)

def fpn_level_info_ResNet101_conv5():

return FpnLevelInfo(

blobs=('res5_2_sum', 'res4_22_sum', 'res3_3_sum', 'res2_2_sum'),

dims=(2048, 1024, 512, 256),

spatial_scales=(1. / 32., 1. / 16., 1. / 8., 1. / 4.)

)

def fpn_level_info_ResNet152_conv5():

return FpnLevelInfo(

blobs=('res5_2_sum', 'res4_35_sum', 'res3_7_sum', 'res2_2_sum'),

dims=(2048, 1024, 512, 256),

spatial_scales=(1. / 32., 1. / 16., 1. / 8., 1. / 4.)

)5. 相关文献

[1] - 『计算机视觉』FPN:feature pyramid networks for object detection

[2] - 『计算机视觉』Mask-RCNN_推断网络其一:总览

[3] - FPN 详解

6 comments

是不是 这三个 predict 分支 经过 ROI Pooling后,然后再经过全连接层,都会连接到一起啊?

您好,我对于FPN网络 自顶向下的结构中输出三个预测不太理解,按照文章所讲述,相加到最后一层,效果不是会更好吗?那何必再输出三个预测结果啊?

相加到最后一层是能够得到由粗到细的特征图的特征融合,不过,个人觉得三个预测的原因之一是,在不同分辨率的特征图上处理,能够有助于对不同尺度大小的目标进行检测.

对,一张图片中物体有大有小,这个我还想问问,最后这三个预测分支是不是经过 RPN、RoI Pooling 后再经过全连接层,会把这三个分支结合成一个分支,最后再进行 分类和 边框回归

megred rcnn 和 shared rcnn 两种结构吧,代码里有关于 FPN Loss 添加的部分. 数据大致前向计算过程是你说的这样. 参考一下 FPN 实现,能够容易理解些.

谢谢,虽然我现在还对这两种结构不太了解,后面我会去看一下