1. 评测指标定义

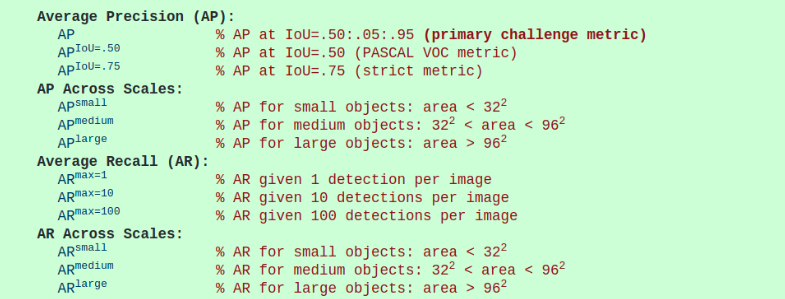

COCO 提供了 12 种用于衡量目标检测器性能的评价指标.

[1] - 除非特别说明,$AP$ 和 $AR$ 一般是在多个 IoU(Intersection over Union) 值间取平均值. 具体地,采用了 10 个 IoU阈值 - 0.50:0.05:0.95. 对比于传统的只计算单个 IoU 阈值(0.50)的指标(对应于这里的指标 $AP^{IoU=0.50}$),这是一种突破. 对多个 IoU 阈值求平均,能够使得目标检测器具有更好的定位位置.

[2] - $AP$ 是对所有类别的求平均值. 这在传统上被称为平均准确度(mAP, mean average precision). 这里并未区分 $AP$ 和 $mAP$(类似的,$AR$ 和$mAR$),假定从上下文中具有清晰的差异. 即:如,$AP^{50} = mAP^{50}$,$AP^{75} = mAP^{75}$,... 但,$AP^{50}$ 一定大于 $AP^{75}$.

[3] - $AP$ (所有 10 个 IoU 阈值和全部 80 个类别的平均值) 作为最终 COCO竞赛胜者的标准. 在考虑目标检测器再 COCO 上的性能时,这是单个最重要的评价度量指标.

[4] - COCO数据集中小目标物体数量比大目标物体更多. 具体地,标注的约有 41% 的目标物体是都很小的(small, 面积< 32x32=1024),约有 34% 的目标物体是中等的(medium, 1024=32x32 < 面积 < 96x96=9216),约有 24% 的目标物体是大的(large, 面积 > 96x96=9216). 面积(area) 是指 segmentation mask 中像素的数量.

[5] - $AR$ 是指每张图片中,在给定固定数量的检测结果中的最大召回(maximum recall),在所有 IoUs 和全部类别上求平均值. $AR$ 与 proposal evaluation 中所使用的相同,但这里 $AR$ 是按类别计算的.

[6] - 所有的评测指标允许每张图片(在全部的类别中)最多 100 个 top-scoring 检测结果进行计算.

[7] - 边界框(bounding boxes)的检测和segmentation mask 的所有评测指标是一致的,除了 IoU 的计算. 边界框的 IoU 计算是关于 boxes的 ,而 segmentation mask 的 IoU 计算是关于 masks 的.

2. 评测指标实现 - cocoeval

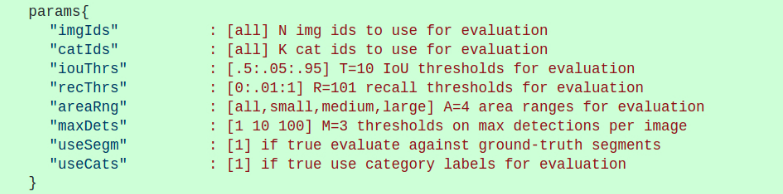

评测参数如 :(括号里的默认值,一般不需要修改.)

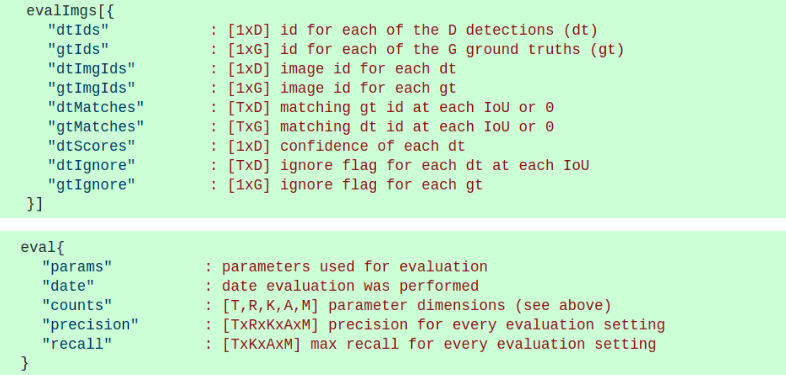

通过调用 evaluate() 函数和 accumulate() 函数来运行,以计算得到衡量检测质量的两个数据结构(data structures).

这两个数据结构分别是 evalImages 和 eval,其分别每张图片的检测质量和整个数据集上的聚合检测质量.

数据结构 evalImages 共有 KxA 个元素,每个元素表示一个评测设置;而数据结构 eval 将这些信息组合为 precision 和 recall 数组. 具体如下:

Python 中的定义如:

__author__ = 'tsungyi'

import numpy as np

import datetime

import time

from collections import defaultdict

from . import mask as maskUtils

import copy

class COCOeval:

# COCO 数据集的检测评估接口.

# The usage for CocoEval is as follows:

# cocoGt=..., cocoDt=... # load dataset and results

# E = CocoEval(cocoGt,cocoDt); # initialize CocoEval object

# E.params.recThrs = ...; # set parameters as desired

# E.evaluate(); # run per image evaluation

# E.accumulate(); # accumulate per image results

# E.summarize(); # display summary metrics of results

# For example usage see evalDemo.m and http://mscoco.org/.

#

# The evaluation parameters are as follows (defaults in brackets):

# imgIds - [all] N img ids to use for evaluation

# catIds - [all] K cat ids to use for evaluation

# iouThrs - [.5:.05:.95] T=10 IoU thresholds for evaluation

# recThrs - [0:.01:1] R=101 recall thresholds for evaluation

# areaRng - [...] A=4 object area ranges for evaluation

# maxDets - [1 10 100] M=3 thresholds on max detections per image

# iouType - ['segm'] set iouType to 'segm', 'bbox' or 'keypoints'

# iouType replaced the now DEPRECATED useSegm parameter.

# useCats - [1] if true use category labels for evaluation

# Note: if useCats=0 category labels are ignored as in proposal scoring.

# Note: multiple areaRngs [Ax2] and maxDets [Mx1] can be specified.

#

# evaluate(): evaluates detections on every image and every category and

# concats the results into the "evalImgs" with fields:

# dtIds - [1xD] id for each of the D detections (dt)

# gtIds - [1xG] id for each of the G ground truths (gt)

# dtMatches - [TxD] matching gt id at each IoU or 0

# gtMatches - [TxG] matching dt id at each IoU or 0

# dtScores - [1xD] confidence of each dt

# gtIgnore - [1xG] ignore flag for each gt

# dtIgnore - [TxD] ignore flag for each dt at each IoU

#

# accumulate(): accumulates the per-image, per-category evaluation

# results in "evalImgs" into the dictionary "eval" with fields:

# params - parameters used for evaluation

# date - date evaluation was performed

# counts - [T,R,K,A,M] parameter dimensions (see above)

# precision - [TxRxKxAxM] precision for every evaluation setting

# recall - [TxKxAxM] max recall for every evaluation setting

# Note: precision and recall==-1 for settings with no gt objects.

#

# See also coco, mask, pycocoDemo, pycocoEvalDemo

#

def __init__(self, cocoGt=None, cocoDt=None, iouType='segm'):

'''

Initialize CocoEval using coco APIs for gt and dt

:param cocoGt: coco object with ground truth annotations

:param cocoDt: coco object with detection results

:return: None

'''

if not iouType:

print('iouType not specified. use default iouType segm')

self.cocoGt = cocoGt # ground truth COCO API

self.cocoDt = cocoDt # detections COCO API

self.params = {} # evaluation parameters

self.evalImgs = defaultdict(list) # per-image per-category evaluation results [KxAxI] elements

self.eval = {} # accumulated evaluation results

self._gts = defaultdict(list) # gt for evaluation

self._dts = defaultdict(list) # dt for evaluation

self.params = Params(iouType=iouType) # parameters

self._paramsEval = {} # parameters for evaluation

self.stats = [] # result summarization

self.ious = {} # ious between all gts and dts

if not cocoGt is None:

self.params.imgIds = sorted(cocoGt.getImgIds())

self.params.catIds = sorted(cocoGt.getCatIds())

def _prepare(self):

'''

Prepare ._gts and ._dts for evaluation based on params

:return: None

'''

def _toMask(anns, coco):

# modify ann['segmentation'] by reference

for ann in anns:

rle = coco.annToRLE(ann)

ann['segmentation'] = rle

p = self.params

if p.useCats:

gts=self.cocoGt.loadAnns(self.cocoGt.getAnnIds(imgIds=p.imgIds, catIds=p.catIds))

dts=self.cocoDt.loadAnns(self.cocoDt.getAnnIds(imgIds=p.imgIds, catIds=p.catIds))

else:

gts=self.cocoGt.loadAnns(self.cocoGt.getAnnIds(imgIds=p.imgIds))

dts=self.cocoDt.loadAnns(self.cocoDt.getAnnIds(imgIds=p.imgIds))

# convert ground truth to mask if iouType == 'segm'

if p.iouType == 'segm':

_toMask(gts, self.cocoGt)

_toMask(dts, self.cocoDt)

# set ignore flag

for gt in gts:

gt['ignore'] = gt['ignore'] if 'ignore' in gt else 0

gt['ignore'] = 'iscrowd' in gt and gt['iscrowd']

if p.iouType == 'keypoints':

gt['ignore'] = (gt['num_keypoints'] == 0) or gt['ignore']

self._gts = defaultdict(list) # gt for evaluation

self._dts = defaultdict(list) # dt for evaluation

for gt in gts:

self._gts[gt['image_id'], gt['category_id']].append(gt)

for dt in dts:

self._dts[dt['image_id'], dt['category_id']].append(dt)

self.evalImgs = defaultdict(list) # per-image per-category evaluation results

self.eval = {} # accumulated evaluation results

def evaluate(self):

'''

Run per image evaluation on given images and store results (a list of dict) in self.evalImgs

:return: None

'''

tic = time.time()

print('Running per image evaluation...')

p = self.params

# add backward compatibility if useSegm is specified in params

if not p.useSegm is None:

p.iouType = 'segm' if p.useSegm == 1 else 'bbox'

print('useSegm (deprecated) is not None. Running {} evaluation'.format(p.iouType))

print('Evaluate annotation type *{}*'.format(p.iouType))

p.imgIds = list(np.unique(p.imgIds))

if p.useCats:

p.catIds = list(np.unique(p.catIds))

p.maxDets = sorted(p.maxDets)

self.params=p

self._prepare()

# loop through images, area range, max detection number

catIds = p.catIds if p.useCats else [-1]

if p.iouType == 'segm' or p.iouType == 'bbox':

computeIoU = self.computeIoU

elif p.iouType == 'keypoints':

computeIoU = self.computeOks

self.ious = {(imgId, catId): computeIoU(imgId, catId) \

for imgId in p.imgIds

for catId in catIds}

evaluateImg = self.evaluateImg

maxDet = p.maxDets[-1]

self.evalImgs = [evaluateImg(imgId, catId, areaRng, maxDet)

for catId in catIds

for areaRng in p.areaRng

for imgId in p.imgIds

]

self._paramsEval = copy.deepcopy(self.params)

toc = time.time()

print('DONE (t={:0.2f}s).'.format(toc-tic))

def computeIoU(self, imgId, catId):

p = self.params

if p.useCats:

gt = self._gts[imgId,catId]

dt = self._dts[imgId,catId]

else:

gt = [_ for cId in p.catIds for _ in self._gts[imgId,cId]]

dt = [_ for cId in p.catIds for _ in self._dts[imgId,cId]]

if len(gt) == 0 and len(dt) ==0:

return []

inds = np.argsort([-d['score'] for d in dt], kind='mergesort')

dt = [dt[i] for i in inds]

if len(dt) > p.maxDets[-1]:

dt=dt[0:p.maxDets[-1]]

if p.iouType == 'segm':

g = [g['segmentation'] for g in gt]

d = [d['segmentation'] for d in dt]

elif p.iouType == 'bbox':

g = [g['bbox'] for g in gt]

d = [d['bbox'] for d in dt]

else:

raise Exception('unknown iouType for iou computation')

# compute iou between each dt and gt region

iscrowd = [int(o['iscrowd']) for o in gt]

ious = maskUtils.iou(d,g,iscrowd)

return ious

def computeOks(self, imgId, catId):

p = self.params

# dimention here should be Nxm

gts = self._gts[imgId, catId]

dts = self._dts[imgId, catId]

inds = np.argsort([-d['score'] for d in dts], kind='mergesort')

dts = [dts[i] for i in inds]

if len(dts) > p.maxDets[-1]:

dts = dts[0:p.maxDets[-1]]

# if len(gts) == 0 and len(dts) == 0:

if len(gts) == 0 or len(dts) == 0:

return []

ious = np.zeros((len(dts), len(gts)))

sigmas = np.array([.26, .25, .25, .35, .35, .79, .79, .72, .72, .62,.62, 1.07, 1.07, .87, .87, .89, .89])/10.0

vars = (sigmas * 2)**2

k = len(sigmas)

# compute oks between each detection and ground truth object

for j, gt in enumerate(gts):

# create bounds for ignore regions(double the gt bbox)

g = np.array(gt['keypoints'])

xg = g[0::3]; yg = g[1::3]; vg = g[2::3]

k1 = np.count_nonzero(vg > 0)

bb = gt['bbox']

x0 = bb[0] - bb[2]; x1 = bb[0] + bb[2] * 2

y0 = bb[1] - bb[3]; y1 = bb[1] + bb[3] * 2

for i, dt in enumerate(dts):

d = np.array(dt['keypoints'])

xd = d[0::3]; yd = d[1::3]

if k1>0:

# measure the per-keypoint distance if keypoints visible

dx = xd - xg

dy = yd - yg

else:

# measure minimum distance to keypoints in (x0,y0) & (x1,y1)

z = np.zeros((k))

dx = np.max((z, x0-xd),axis=0)+np.max((z, xd-x1),axis=0)

dy = np.max((z, y0-yd),axis=0)+np.max((z, yd-y1),axis=0)

e = (dx**2 + dy**2) / vars / (gt['area']+np.spacing(1)) / 2

if k1 > 0:

e=e[vg > 0]

ious[i, j] = np.sum(np.exp(-e)) / e.shape[0]

return ious

def evaluateImg(self, imgId, catId, aRng, maxDet):

'''

perform evaluation for single category and image

:return: dict (single image results)

'''

p = self.params

if p.useCats:

gt = self._gts[imgId,catId]

dt = self._dts[imgId,catId]

else:

gt = [_ for cId in p.catIds for _ in self._gts[imgId,cId]]

dt = [_ for cId in p.catIds for _ in self._dts[imgId,cId]]

if len(gt) == 0 and len(dt) ==0:

return None

for g in gt:

if g['ignore'] or (g['area']<aRng[0] or g['area']>aRng[1]):

g['_ignore'] = 1

else:

g['_ignore'] = 0

# sort dt highest score first, sort gt ignore last

gtind = np.argsort([g['_ignore'] for g in gt], kind='mergesort')

gt = [gt[i] for i in gtind]

dtind = np.argsort([-d['score'] for d in dt], kind='mergesort')

dt = [dt[i] for i in dtind[0:maxDet]]

iscrowd = [int(o['iscrowd']) for o in gt]

# load computed ious

ious = self.ious[imgId, catId][:, gtind] if len(self.ious[imgId, catId]) > 0 else self.ious[imgId, catId]

T = len(p.iouThrs)

G = len(gt)

D = len(dt)

gtm = np.zeros((T,G))

dtm = np.zeros((T,D))

gtIg = np.array([g['_ignore'] for g in gt])

dtIg = np.zeros((T,D))

if not len(ious)==0:

for tind, t in enumerate(p.iouThrs):

for dind, d in enumerate(dt):

# information about best match so far (m=-1 -> unmatched)

iou = min([t,1-1e-10])

m = -1

for gind, g in enumerate(gt):

# if this gt already matched, and not a crowd, continue

if gtm[tind,gind]>0 and not iscrowd[gind]:

continue

# if dt matched to reg gt, and on ignore gt, stop

if m>-1 and gtIg[m]==0 and gtIg[gind]==1:

break

# continue to next gt unless better match made

if ious[dind,gind] < iou:

continue

# if match successful and best so far, store appropriately

iou=ious[dind,gind]

m=gind

# if match made store id of match for both dt and gt

if m ==-1:

continue

dtIg[tind,dind] = gtIg[m]

dtm[tind,dind] = gt[m]['id']

gtm[tind,m] = d['id']

# set unmatched detections outside of area range to ignore

a = np.array([d['area']<aRng[0] or d['area']>aRng[1] for d in dt]).reshape((1, len(dt)))

dtIg = np.logical_or(dtIg, np.logical_and(dtm==0, np.repeat(a,T,0)))

# store results for given image and category

return {

'image_id': imgId,

'category_id': catId,

'aRng': aRng,

'maxDet': maxDet,

'dtIds': [d['id'] for d in dt],

'gtIds': [g['id'] for g in gt],

'dtMatches': dtm,

'gtMatches': gtm,

'dtScores': [d['score'] for d in dt],

'gtIgnore': gtIg,

'dtIgnore': dtIg,

}

def accumulate(self, p = None):

'''

Accumulate per image evaluation results and store the result in self.eval

:param p: input params for evaluation

:return: None

'''

print('Accumulating evaluation results...')

tic = time.time()

if not self.evalImgs:

print('Please run evaluate() first')

# allows input customized parameters

if p is None:

p = self.params

p.catIds = p.catIds if p.useCats == 1 else [-1]

T = len(p.iouThrs)

R = len(p.recThrs)

K = len(p.catIds) if p.useCats else 1

A = len(p.areaRng)

M = len(p.maxDets)

precision = -np.ones((T,R,K,A,M)) # -1 for the precision of absent categories

recall = -np.ones((T,K,A,M))

scores = -np.ones((T,R,K,A,M))

# create dictionary for future indexing

_pe = self._paramsEval

catIds = _pe.catIds if _pe.useCats else [-1]

setK = set(catIds)

setA = set(map(tuple, _pe.areaRng))

setM = set(_pe.maxDets)

setI = set(_pe.imgIds)

# get inds to evaluate

k_list = [n for n, k in enumerate(p.catIds) if k in setK]

m_list = [m for n, m in enumerate(p.maxDets) if m in setM]

a_list = [n for n, a in enumerate(map(lambda x: tuple(x), p.areaRng)) if a in setA]

i_list = [n for n, i in enumerate(p.imgIds) if i in setI]

I0 = len(_pe.imgIds)

A0 = len(_pe.areaRng)

# retrieve E at each category, area range, and max number of detections

for k, k0 in enumerate(k_list):

Nk = k0*A0*I0

for a, a0 in enumerate(a_list):

Na = a0*I0

for m, maxDet in enumerate(m_list):

E = [self.evalImgs[Nk + Na + i] for i in i_list]

E = [e for e in E if not e is None]

if len(E) == 0:

continue

dtScores = np.concatenate([e['dtScores'][0:maxDet] for e in E])

# different sorting method generates slightly different results.

# mergesort is used to be consistent as Matlab implementation.

inds = np.argsort(-dtScores, kind='mergesort')

dtScoresSorted = dtScores[inds]

dtm = np.concatenate([e['dtMatches'][:,0:maxDet] for e in E], axis=1)[:,inds]

dtIg = np.concatenate([e['dtIgnore'][:,0:maxDet] for e in E], axis=1)[:,inds]

gtIg = np.concatenate([e['gtIgnore'] for e in E])

npig = np.count_nonzero(gtIg==0 )

if npig == 0:

continue

tps = np.logical_and( dtm, np.logical_not(dtIg) )

fps = np.logical_and(np.logical_not(dtm), np.logical_not(dtIg) )

tp_sum = np.cumsum(tps, axis=1).astype(dtype=np.float)

fp_sum = np.cumsum(fps, axis=1).astype(dtype=np.float)

for t, (tp, fp) in enumerate(zip(tp_sum, fp_sum)):

tp = np.array(tp)

fp = np.array(fp)

nd = len(tp)

rc = tp / npig

pr = tp / (fp+tp+np.spacing(1))

q = np.zeros((R,))

ss = np.zeros((R,))

if nd:

recall[t,k,a,m] = rc[-1]

else:

recall[t,k,a,m] = 0

# numpy is slow without cython optimization for accessing elements

# use python array gets significant speed improvement

pr = pr.tolist(); q = q.tolist()

for i in range(nd-1, 0, -1):

if pr[i] > pr[i-1]:

pr[i-1] = pr[i]

inds = np.searchsorted(rc, p.recThrs, side='left')

try:

for ri, pi in enumerate(inds):

q[ri] = pr[pi]

ss[ri] = dtScoresSorted[pi]

except:

pass

precision[t,:,k,a,m] = np.array(q)

scores[t,:,k,a,m] = np.array(ss)

self.eval = {

'params': p,

'counts': [T, R, K, A, M],

'date': datetime.datetime.now().strftime('%Y-%m-%d %H:%M:%S'),

'precision': precision,

'recall': recall,

'scores': scores,

}

toc = time.time()

print('DONE (t={:0.2f}s).'.format( toc-tic))

def summarize(self):

'''

Compute and display summary metrics for evaluation results.

Note this functin can *only* be applied on the default parameter setting

'''

def _summarize( ap=1, iouThr=None, areaRng='all', maxDets=100 ):

p = self.params

iStr = ' {:<18} {} @[ IoU={:<9} | area={:>6s} | maxDets={:>3d} ] = {:0.3f}'

titleStr = 'Average Precision' if ap == 1 else 'Average Recall'

typeStr = '(AP)' if ap==1 else '(AR)'

iouStr = '{:0.2f}:{:0.2f}'.format(p.iouThrs[0], p.iouThrs[-1]) \

if iouThr is None else '{:0.2f}'.format(iouThr)

aind = [i for i, aRng in enumerate(p.areaRngLbl) if aRng == areaRng]

mind = [i for i, mDet in enumerate(p.maxDets) if mDet == maxDets]

if ap == 1:

# dimension of precision: [TxRxKxAxM]

s = self.eval['precision']

# IoU

if iouThr is not None:

t = np.where(iouThr == p.iouThrs)[0]

s = s[t]

s = s[:,:,:,aind,mind]

else:

# dimension of recall: [TxKxAxM]

s = self.eval['recall']

if iouThr is not None:

t = np.where(iouThr == p.iouThrs)[0]

s = s[t]

s = s[:,:,aind,mind]

if len(s[s>-1])==0:

mean_s = -1

else:

mean_s = np.mean(s[s>-1])

print(iStr.format(titleStr, typeStr, iouStr, areaRng, maxDets, mean_s))

return mean_s

def _summarizeDets():

stats = np.zeros((12,))

stats[0] = _summarize(1)

stats[1] = _summarize(1, iouThr=.5, maxDets=self.params.maxDets[2])

stats[2] = _summarize(1, iouThr=.75, maxDets=self.params.maxDets[2])

stats[3] = _summarize(1, areaRng='small', maxDets=self.params.maxDets[2])

stats[4] = _summarize(1, areaRng='medium', maxDets=self.params.maxDets[2])

stats[5] = _summarize(1, areaRng='large', maxDets=self.params.maxDets[2])

stats[6] = _summarize(0, maxDets=self.params.maxDets[0])

stats[7] = _summarize(0, maxDets=self.params.maxDets[1])

stats[8] = _summarize(0, maxDets=self.params.maxDets[2])

stats[9] = _summarize(0, areaRng='small', maxDets=self.params.maxDets[2])

stats[10] = _summarize(0, areaRng='medium', maxDets=self.params.maxDets[2])

stats[11] = _summarize(0, areaRng='large', maxDets=self.params.maxDets[2])

return stats

def _summarizeKps():

stats = np.zeros((10,))

stats[0] = _summarize(1, maxDets=20)

stats[1] = _summarize(1, maxDets=20, iouThr=.5)

stats[2] = _summarize(1, maxDets=20, iouThr=.75)

stats[3] = _summarize(1, maxDets=20, areaRng='medium')

stats[4] = _summarize(1, maxDets=20, areaRng='large')

stats[5] = _summarize(0, maxDets=20)

stats[6] = _summarize(0, maxDets=20, iouThr=.5)

stats[7] = _summarize(0, maxDets=20, iouThr=.75)

stats[8] = _summarize(0, maxDets=20, areaRng='medium')

stats[9] = _summarize(0, maxDets=20, areaRng='large')

return stats

if not self.eval:

raise Exception('Please run accumulate() first')

iouType = self.params.iouType

if iouType == 'segm' or iouType == 'bbox':

summarize = _summarizeDets

elif iouType == 'keypoints':

summarize = _summarizeKps

self.stats = summarize()

def __str__(self):

self.summarize()

class Params:

'''

Params for coco evaluation api

'''

def setDetParams(self):

self.imgIds = []

self.catIds = []

# np.arange causes trouble. the data point on arange is slightly larger than the true value

self.iouThrs = np.linspace(.5, 0.95, np.round((0.95 - .5) / .05) + 1, endpoint=True)

self.recThrs = np.linspace(.0, 1.00, np.round((1.00 - .0) / .01) + 1, endpoint=True)

self.maxDets = [1, 10, 100]

self.areaRng = [[0 ** 2, 1e5 ** 2], [0 ** 2, 32 ** 2], [32 ** 2, 96 ** 2], [96 ** 2, 1e5 ** 2]]

self.areaRngLbl = ['all', 'small', 'medium', 'large']

self.useCats = 1

def setKpParams(self):

self.imgIds = []

self.catIds = []

# np.arange causes trouble. the data point on arange is slightly larger than the true value

self.iouThrs = np.linspace(.5, 0.95, np.round((0.95 - .5) / .05) + 1, endpoint=True)

self.recThrs = np.linspace(.0, 1.00, np.round((1.00 - .0) / .01) + 1, endpoint=True)

self.maxDets = [20]

self.areaRng = [[0 ** 2, 1e5 ** 2], [32 ** 2, 96 ** 2], [96 ** 2, 1e5 ** 2]]

self.areaRngLbl = ['all', 'medium', 'large']

self.useCats = 1

def __init__(self, iouType='segm'):

if iouType == 'segm' or iouType == 'bbox':

self.setDetParams()

elif iouType == 'keypoints':

self.setKpParams()

else:

raise Exception('iouType not supported')

self.iouType = iouType

# useSegm is deprecated

self.useSegm = None3. 评测指标示例 - pycocoEvalDemo

import matplotlib.pyplot as plt

from pycocotools.coco import COCO

from pycocotools.cocoeval import COCOeval

import numpy as np

import skimage.io as io

import pylab

pylab.rcParams['figure.figsize'] = (10.0, 8.0)

annType = ['segm','bbox','keypoints']

annType = annType[1] # specify type here - bbox 类型

prefix = 'person_keypoints' if annType=='keypoints' else 'instances'

print 'Running demo for *%s* results.'%(annType)

#initialize COCO ground truth api

dataDir='../'

dataType='val2014'

annFile = '%s/annotations/%s_%s.json'%(dataDir,prefix,dataType)

cocoGt=COCO(annFile)

#initialize COCO detections api

resFile='%s/results/%s_%s_fake%s100_results.json'

resFile = resFile%(dataDir, prefix, dataType, annType)

cocoDt=cocoGt.loadRes(resFile)

imgIds=sorted(cocoGt.getImgIds())

imgIds=imgIds[0:100]

imgId = imgIds[np.random.randint(100)]

# running evaluation

cocoEval = COCOeval(cocoGt,cocoDt,annType)

cocoEval.params.imgIds = imgIds

cocoEval.evaluate()

cocoEval.accumulate()

cocoEval.summarize()输出结果如:

Running per image evaluation...

DONE (t=0.46s).

Accumulating evaluation results...

DONE (t=0.38s).

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.505

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.697

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.573

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.586

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.519

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.501

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.387

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.594

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.595

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.640

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.566

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.5644. COCO 类

COCO 格式数据集的类:

__author__ = 'tylin'

__version__ = '2.0'

# API用于将 COCO 标注数据集 annotations 直接加载到 Python 字典.

# 还提供了其它辅助函数.

# 该 API 同时支持 *instance* 和 *caption* 的标注数据.

# 但,并未定义 *caption* 的全部函数(如,categories 暂未定义).

# API 中包含的函数如下:

# 其中,"ann"=annotation, "cat"=category, and "img"=image.

# COCO - COCO api class that loads COCO annotation file and prepare data structures.

# decodeMask - Decode binary mask M encoded via run-length encoding.

# encodeMask - Encode binary mask M using run-length encoding.

# getAnnIds - Get ann ids that satisfy given filter conditions.

# getCatIds - Get cat ids that satisfy given filter conditions.

# getImgIds - Get img ids that satisfy given filter conditions.

# loadAnns - Load anns with the specified ids.

# loadCats - Load cats with the specified ids.

# loadImgs - Load imgs with the specified ids.

# annToMask - Convert segmentation in an annotation to binary mask.

# showAnns - Display the specified annotations.

# loadRes - Load algorithm results and create API for accessing them.

# download - Download COCO images from mscoco.org server.

import json

import time

import matplotlib.pyplot as plt

from matplotlib.collections import PatchCollection

from matplotlib.patches import Polygon

import numpy as np

import copy

import itertools

from . import mask as maskUtils

import os

from collections import defaultdict

import sys

PYTHON_VERSION = sys.version_info[0]

if PYTHON_VERSION == 2:

from urllib import urlretrieve

elif PYTHON_VERSION == 3:

from urllib.request import urlretrieve

def _isArrayLike(obj):

return hasattr(obj, '__iter__') and hasattr(obj, '__len__')

class COCO:

def __init__(self, annotation_file=None):

"""

Constructor of Microsoft COCO helper class for reading and visualizing annotations.

:param annotation_file (str): location of annotation file

:param image_folder (str): location to the folder that hosts images.

:return:

"""

# load dataset

self.dataset,self.anns,self.cats,self.imgs = dict(),dict(),dict(),dict()

self.imgToAnns, self.catToImgs = defaultdict(list), defaultdict(list)

if not annotation_file == None:

print('loading annotations into memory...')

tic = time.time()

dataset = json.load(open(annotation_file, 'r'))

assert type(dataset)==dict, 'annotation file format {} not supported'.format(type(dataset))

print('Done (t={:0.2f}s)'.format(time.time()- tic))

self.dataset = dataset

self.createIndex()

def createIndex(self):

# create index

print('creating index...')

anns, cats, imgs = {}, {}, {}

imgToAnns,catToImgs = defaultdict(list),defaultdict(list)

if 'annotations' in self.dataset:

for ann in self.dataset['annotations']:

imgToAnns[ann['image_id']].append(ann)

anns[ann['id']] = ann

if 'images' in self.dataset:

for img in self.dataset['images']:

imgs[img['id']] = img

if 'categories' in self.dataset:

for cat in self.dataset['categories']:

cats[cat['id']] = cat

if 'annotations' in self.dataset and 'categories' in self.dataset:

for ann in self.dataset['annotations']:

catToImgs[ann['category_id']].append(ann['image_id'])

print('index created!')

# create class members

self.anns = anns

self.imgToAnns = imgToAnns

self.catToImgs = catToImgs

self.imgs = imgs

self.cats = cats

def info(self):

"""

Print information about the annotation file.

:return:

"""

for key, value in self.dataset['info'].items():

print('{}: {}'.format(key, value))

def getAnnIds(self, imgIds=[], catIds=[], areaRng=[], iscrowd=None):

"""

Get ann ids that satisfy given filter conditions. default skips that filter

:param imgIds (int array) : get anns for given imgs

catIds (int array) : get anns for given cats

areaRng (float array) : get anns for given area range (e.g. [0 inf])

iscrowd (boolean) : get anns for given crowd label (False or True)

:return: ids (int array) : integer array of ann ids

"""

imgIds = imgIds if _isArrayLike(imgIds) else [imgIds]

catIds = catIds if _isArrayLike(catIds) else [catIds]

if len(imgIds) == len(catIds) == len(areaRng) == 0:

anns = self.dataset['annotations']

else:

if not len(imgIds) == 0:

lists = [self.imgToAnns[imgId] for imgId in imgIds if imgId in self.imgToAnns]

anns = list(itertools.chain.from_iterable(lists))

else:

anns = self.dataset['annotations']

anns = anns if len(catIds) == 0 else [ann for ann in anns if ann['category_id'] in catIds]

anns = anns if len(areaRng) == 0 else [ann for ann in anns if ann['area'] > areaRng[0] and ann['area'] < areaRng[1]]

if not iscrowd == None:

ids = [ann['id'] for ann in anns if ann['iscrowd'] == iscrowd]

else:

ids = [ann['id'] for ann in anns]

return ids

def getCatIds(self, catNms=[], supNms=[], catIds=[]):

"""

filtering parameters. default skips that filter.

:param catNms (str array) : get cats for given cat names

:param supNms (str array) : get cats for given supercategory names

:param catIds (int array) : get cats for given cat ids

:return: ids (int array) : integer array of cat ids

"""

catNms = catNms if _isArrayLike(catNms) else [catNms]

supNms = supNms if _isArrayLike(supNms) else [supNms]

catIds = catIds if _isArrayLike(catIds) else [catIds]

if len(catNms) == len(supNms) == len(catIds) == 0:

cats = self.dataset['categories']

else:

cats = self.dataset['categories']

cats = cats if len(catNms) == 0 else [cat for cat in cats if cat['name'] in catNms]

cats = cats if len(supNms) == 0 else [cat for cat in cats if cat['supercategory'] in supNms]

cats = cats if len(catIds) == 0 else [cat for cat in cats if cat['id'] in catIds]

ids = [cat['id'] for cat in cats]

return ids

def getImgIds(self, imgIds=[], catIds=[]):

'''

Get img ids that satisfy given filter conditions.

:param imgIds (int array) : get imgs for given ids

:param catIds (int array) : get imgs with all given cats

:return: ids (int array) : integer array of img ids

'''

imgIds = imgIds if _isArrayLike(imgIds) else [imgIds]

catIds = catIds if _isArrayLike(catIds) else [catIds]

if len(imgIds) == len(catIds) == 0:

ids = self.imgs.keys()

else:

ids = set(imgIds)

for i, catId in enumerate(catIds):

if i == 0 and len(ids) == 0:

ids = set(self.catToImgs[catId])

else:

ids &= set(self.catToImgs[catId])

return list(ids)

def loadAnns(self, ids=[]):

"""

Load anns with the specified ids.

:param ids (int array) : integer ids specifying anns

:return: anns (object array) : loaded ann objects

"""

if _isArrayLike(ids):

return [self.anns[id] for id in ids]

elif type(ids) == int:

return [self.anns[ids]]

def loadCats(self, ids=[]):

"""

Load cats with the specified ids.

:param ids (int array) : integer ids specifying cats

:return: cats (object array) : loaded cat objects

"""

if _isArrayLike(ids):

return [self.cats[id] for id in ids]

elif type(ids) == int:

return [self.cats[ids]]

def loadImgs(self, ids=[]):

"""

Load anns with the specified ids.

:param ids (int array) : integer ids specifying img

:return: imgs (object array) : loaded img objects

"""

if _isArrayLike(ids):

return [self.imgs[id] for id in ids]

elif type(ids) == int:

return [self.imgs[ids]]

def showAnns(self, anns):

"""

Display the specified annotations.

:param anns (array of object): annotations to display

:return: None

"""

if len(anns) == 0:

return 0

if 'segmentation' in anns[0] or 'keypoints' in anns[0]:

datasetType = 'instances'

elif 'caption' in anns[0]:

datasetType = 'captions'

else:

raise Exception('datasetType not supported')

if datasetType == 'instances':

ax = plt.gca()

ax.set_autoscale_on(False)

polygons = []

color = []

for ann in anns:

c = (np.random.random((1, 3))*0.6+0.4).tolist()[0]

if 'segmentation' in ann:

if type(ann['segmentation']) == list:

# polygon

for seg in ann['segmentation']:

poly = np.array(seg).reshape((int(len(seg)/2), 2))

polygons.append(Polygon(poly))

color.append(c)

else:

# mask

t = self.imgs[ann['image_id']]

if type(ann['segmentation']['counts']) == list:

rle = maskUtils.frPyObjects([ann['segmentation']], t['height'], t['width'])

else:

rle = [ann['segmentation']]

m = maskUtils.decode(rle)

img = np.ones( (m.shape[0], m.shape[1], 3) )

if ann['iscrowd'] == 1:

color_mask = np.array([2.0,166.0,101.0])/255

if ann['iscrowd'] == 0:

color_mask = np.random.random((1, 3)).tolist()[0]

for i in range(3):

img[:,:,i] = color_mask[i]

ax.imshow(np.dstack( (img, m*0.5) ))

if 'keypoints' in ann and type(ann['keypoints']) == list:

# turn skeleton into zero-based index

sks = np.array(self.loadCats(ann['category_id'])[0]['skeleton'])-1

kp = np.array(ann['keypoints'])

x = kp[0::3]

y = kp[1::3]

v = kp[2::3]

for sk in sks:

if np.all(v[sk]>0):

plt.plot(x[sk],y[sk], linewidth=3, color=c)

plt.plot(x[v>0], y[v>0],'o',markersize=8, markerfacecolor=c, markeredgecolor='k',markeredgewidth=2)

plt.plot(x[v>1], y[v>1],'o',markersize=8, markerfacecolor=c, markeredgecolor=c, markeredgewidth=2)

p = PatchCollection(polygons, facecolor=color, linewidths=0, alpha=0.4)

ax.add_collection(p)

p = PatchCollection(polygons, facecolor='none', edgecolors=color, linewidths=2)

ax.add_collection(p)

elif datasetType == 'captions':

for ann in anns:

print(ann['caption'])

def loadRes(self, resFile):

"""

Load result file and return a result api object.

:param resFile (str) : file name of result file

:return: res (obj) : result api object

"""

res = COCO()

res.dataset['images'] = [img for img in self.dataset['images']]

print('Loading and preparing results...')

tic = time.time()

if type(resFile) == str or type(resFile) == unicode:

anns = json.load(open(resFile))

elif type(resFile) == np.ndarray:

anns = self.loadNumpyAnnotations(resFile)

else:

anns = resFile

assert type(anns) == list, 'results in not an array of objects'

annsImgIds = [ann['image_id'] for ann in anns]

assert set(annsImgIds) == (set(annsImgIds) & set(self.getImgIds())), \

'Results do not correspond to current coco set'

if 'caption' in anns[0]:

imgIds = set([img['id'] for img in res.dataset['images']]) & set([ann['image_id'] for ann in anns])

res.dataset['images'] = [img for img in res.dataset['images'] if img['id'] in imgIds]

for id, ann in enumerate(anns):

ann['id'] = id+1

elif 'bbox' in anns[0] and not anns[0]['bbox'] == []:

res.dataset['categories'] = copy.deepcopy(self.dataset['categories'])

for id, ann in enumerate(anns):

bb = ann['bbox']

x1, x2, y1, y2 = [bb[0], bb[0]+bb[2], bb[1], bb[1]+bb[3]]

if not 'segmentation' in ann:

ann['segmentation'] = [[x1, y1, x1, y2, x2, y2, x2, y1]]

ann['area'] = bb[2]*bb[3]

ann['id'] = id+1

ann['iscrowd'] = 0

elif 'segmentation' in anns[0]:

res.dataset['categories'] = copy.deepcopy(self.dataset['categories'])

for id, ann in enumerate(anns):

# now only support compressed RLE format as segmentation results

ann['area'] = maskUtils.area(ann['segmentation'])

if not 'bbox' in ann:

ann['bbox'] = maskUtils.toBbox(ann['segmentation'])

ann['id'] = id+1

ann['iscrowd'] = 0

elif 'keypoints' in anns[0]:

res.dataset['categories'] = copy.deepcopy(self.dataset['categories'])

for id, ann in enumerate(anns):

s = ann['keypoints']

x = s[0::3]

y = s[1::3]

x0,x1,y0,y1 = np.min(x), np.max(x), np.min(y), np.max(y)

ann['area'] = (x1-x0)*(y1-y0)

ann['id'] = id + 1

ann['bbox'] = [x0,y0,x1-x0,y1-y0]

print('DONE (t={:0.2f}s)'.format(time.time()- tic))

res.dataset['annotations'] = anns

res.createIndex()

return res

def download(self, tarDir = None, imgIds = [] ):

'''

Download COCO images from mscoco.org server.

:param tarDir (str): COCO results directory name

imgIds (list): images to be downloaded

:return:

'''

if tarDir is None:

print('Please specify target directory')

return -1

if len(imgIds) == 0:

imgs = self.imgs.values()

else:

imgs = self.loadImgs(imgIds)

N = len(imgs)

if not os.path.exists(tarDir):

os.makedirs(tarDir)

for i, img in enumerate(imgs):

tic = time.time()

fname = os.path.join(tarDir, img['file_name'])

if not os.path.exists(fname):

urlretrieve(img['coco_url'], fname)

print('downloaded {}/{} images (t={:0.1f}s)'.format(i, N, time.time()- tic))

def loadNumpyAnnotations(self, data):

"""

Convert result data from a numpy array [Nx7] where each row contains {imageID,x1,y1,w,h,score,class}

:param data (numpy.ndarray)

:return: annotations (python nested list)

"""

print('Converting ndarray to lists...')

assert(type(data) == np.ndarray)

print(data.shape)

assert(data.shape[1] == 7)

N = data.shape[0]

ann = []

for i in range(N):

if i % 1000000 == 0:

print('{}/{}'.format(i,N))

ann += [{

'image_id' : int(data[i, 0]),

'bbox' : [ data[i, 1], data[i, 2], data[i, 3], data[i, 4] ],

'score' : data[i, 5],

'category_id': int(data[i, 6]),

}]

return ann

def annToRLE(self, ann):

"""

Convert annotation which can be polygons, uncompressed RLE to RLE.

:return: binary mask (numpy 2D array)

"""

t = self.imgs[ann['image_id']]

h, w = t['height'], t['width']

segm = ann['segmentation']

if type(segm) == list:

# polygon -- a single object might consist of multiple parts

# we merge all parts into one mask rle code

rles = maskUtils.frPyObjects(segm, h, w)

rle = maskUtils.merge(rles)

elif type(segm['counts']) == list:

# uncompressed RLE

rle = maskUtils.frPyObjects(segm, h, w)

else:

# rle

rle = ann['segmentation']

return rle

def annToMask(self, ann):

"""

Convert annotation which can be polygons, uncompressed RLE, or RLE to binary mask.

:return: binary mask (numpy 2D array)

"""

rle = self.annToRLE(ann)

m = maskUtils.decode(rle)

return m