Github 项目 - rafaelpadilla/Object-Detection-Metrics

该项目旨在将在很多目标检测问题中所采用的评测度量进行统一汇总. 虽然很多目标检测竞赛中都有其对应的精度度量方式,但还是会有共享的代码块,用于计算检测到的物体的准确度的.

该项目提供了流行的目标检测竞赛中所采用的精度度量方式的代码实现,且具有简单易用的特点. 另外,不需要将检测模型的输入格式进行复杂修改,避免转换到 XML 或 JSON 文件. 其简化了输入数据(即,GT boxes 和检测的 boxes),并汇总到同一项目进行使用. 此外,仔细的与官方提供的度量实现进行对比,以确保结果的完全一致.

1. 竞赛与度量

[1] - PASCAL VOC Challenge

PASCAL VOC 提供了 Matlab 脚本用于评估检测质量. 竞赛者可以采用该 Matlab 脚本在提交算法检测结果前,线下评估检测结果的精度.

官方提供的关于目标检测度量准则的描述:Detection Task

PASCAL VOC 当前所采用的度量方式为:Precision x Recall curve 和 Average Precision.

PASCAL VOC 的 Matlab 度量脚本从 XML 格式的文本中读取 GT boxes,如果需要将其用于其它数据集,则需要对代码进行修改. 即使已经有一些项目,如 Faster-RCNN 复现了 PASCAL VOC 的度量代码,但仍需要将检测的 boxes 转换为其给定的格式. 此外,Tensorflow 也给出了对应的复现代码.

[2] - COCO Detection Challenge

COCO 检测竞赛中采用不同的度量来衡量不同算法的目标检测精度.

COCO 提供了 12 种用于衡量目标检测器性能的评价指标.

COCO 提供了 Python 和 Matlab 代码,以便于竞赛者在提交结果前,快速验证其算法精度. 不过,其仍需要将检测结果转化为对应的格式,如:

[3] - Google Open Images Dataset V4 Competition

Open Images 竞赛也采用了对于 500 个物体类别的平均精度(mAP,mean Average Precision) 来度量目标检测精度.

[4] - ImageNet Object Localization Challenge

ImageNet 考虑到物体类别和 GT boxes 与检测的 boxes 之间的重叠区域,定义了关于每张图片的误差. 总误差计算的是关于所有测试数据集图片的所有最小误差的平均值.

具体为:

每张图片的误差(error)定义:

$$ e = min_i (min_j (max(d_{ij}, f_{ij}))) $$

其中,$i$ 为预测的物体类别和 boxes;$j$ 为 GT 物体类别和 boxes.

如果两个 boxes 的物体类别标签相同,则 $d=0$;否则 $d=1$.

如果两个 boxes 的重叠区域 >=50%,则 $f = 0$;否则 $f = 1$.

例如,给定一张图片,其包含两个 boxes - (g_0 和 g_1),而预测得到了 3 个 boxes - (p_0, p_1, 和 p_2),则对预测的 boxes 进行迭代,并检测其是否与任何一个 GT boxes 相匹配(matched). 如果有一个匹配,则对于该图片来说,其最小误差为 0;否则为 1.

相匹配的定义为:

(1)-如果预测 box 与GT box 具有相同的物体类别标签;

(2)-且预测 box 与 GT box 的重叠区域超过 50%.

实现的伪代码如:

min_error_list = []

for prediction_box in [p_0, p_1, p_2]:

max_error_list = []

for ground_truth_box in [g_0, g_1]:

if label(prediction_box) == label(ground_truth_box):

d = 0

else:

d = 1

if overlap(prediction_box, ground_truth_box) > 0.5:

f = 0

else:

f = 1

max_error_list.append(max(d,f)) # the first max

min_error_list.append(min(max_list)) # the first min

return min(min_error_list) # the second min注:对于每张图片来说,最小误差的值是 0 或 1.

总误差是根据测试数据集中所有图片的最小误差求均值得到的.

2. 重要定义

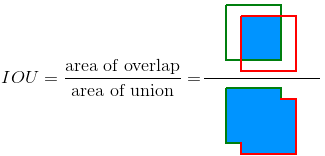

2.1. IoU

IoU(Intersection Over Union) 是基于 Jaccard Index,计算的是两个 boxes 的重叠程度. 其包含两个输入:GT box $B_{gt}$ 和预测 box $B_{p}$.

基于 IoU 可以进一步判断检测结果是有效的(True Positive) 还是无效的(False Positive).

IoU 的计算为预测 box 与 GT box 的重叠区域面积与二者并集面积的相除:

$$ IoU = \frac{area(B_{p} \bigcap B_{gt})}{area(B_{p} \bigcup B_{gt})} $$

如图示;

2.2. TP/FP/FN/TN

[1] - TP - True Positive, 正确的检测结果,如 $IoU \geq threshold$.

[2] - FP - False Positive, 错误的检测结果,如 $IoU \leq threshold$.

[3] - FN - False Negative, 没有检测到 GT.

[4] - TN - True Negative. 暂没有用到. 其意思是表示正确的错误检测. 目标检测任务中,存在很多可能的在图片中不应该被检测到的 boxes. 因此,TN 表示所有可能的 boxes,其正确的不被检测(一张图片中存在很多可能的 boxes). 这也是该度量并未被使用的原因.

注:$threshold$ 取决于度量中的设置,往往被设为 50%, 75% 或 95%.

2.3. 精度Precision

精度表示模型识别唯一相关目标物体的能力,其是正确的 positive 预测结果在所有检测结果中所占的比例:

$$ Precision = \frac{TP}{TP + FP} = \frac{TP}{all \ detections} $$

2.4. 召回率Recall

召回率表示模型识别所有相关结果(所有 GT boxes)的能力,其是 true positive 检测结果在所有 GT boxes 中所占的比例:

$$ Recall = \frac{TP}{TP + FN} = \frac{TP}{all \ groundtruths} $$

3. 度量Metrics

下面是在目标检测中常用的集中度量方式.

3.1. PR 曲线

PR曲线(Precision x Recall Curve) 是一种用于评估目标检测器性能的度量方式,其根据随着置信度的改变,画出每个目标物体类别的曲线.

对于特定物体类别的目标检测器,如果其精度能够在 recall 增加时,仍保持较高的位置,则认为其性能是较好的. 也就意味着,即使改变置信度阈值,precision 和 recall 的值仍很高.

如果目标检测器性能不够好,则需要增加检测结果的数量(增加 False Positives = 低精度),以检索到所有的 GT 物体(高 Recall). 这就是 PR 曲线往往起始于高精度值,而随着 Recall 的增加,精度值衰退的原因.

3.2. 平均精度

目标检测器性能评估的另一个方式是,计算 PR 曲线以下区域的面积(AUC, area under the curve (AUC) of the Precision x Recall curve). 由于 AP 曲线往往是曲折的,不同曲线的对比并不容易,因为这些多个曲线往往是存在频繁的互相交叉. 这也是平均精度(AP, Average Precision),数值化度量,能够有助于比较不同检测器性能的原因. 实际上,AP 计算的是对于所有 [0, 1]区间的 recall 值的精度值的求平均.

2010 年后,PASCAL VOC 竞赛所采用的 AP 计算已经发生改变. 现在,PASCAL VOC 竞赛采用的是针对所有数据点的插值,而非仅采用 11 个等间距点的插值( the interpolation performed by PASCAL VOC challenge uses all data points, rather than interpolating only 11 equally spaced points as stated in their paper). 为了复现 PASCAL VOC 的默认实现,这里的代码采用了其最新的方式,即针对所有数据点进行插值. 不过,还是给出了 11-point 插值方法的实现.

3.2.1. 11-point 插值

11-point 插值是尝试通过采用 11 个等间距的 recall 值(0, 0.1, 0.2, ...)来求 precision 的均值,以概括 PR 曲线的形状:

$$ AP = \frac{1}{11} \sum_{r \in \lbrace 0, 0.1, 0.2, ..., 1\rbrace } \rho _{interp(r)} $$

其中,

$$ \rho _{interp(r)} = max _{\hat{r}:\hat{r} \geq r} \rho(\hat{r}) $$

其中,$\rho (\hat{r})$ 是在 recall $\hat{r}$ 值时的 precision.

AP 并不是对观察到的每个点的 precision 进行计算,而是仅对在 11 个 recall 层次 $r$ 时的 precision 进行插值,其中,选择的是 recall 值大于 $r$ 的最大 precision.

3.2.2. all-points 插值

与 11-point 插值仅采用 11 个等间距数据点进行插值不同的是,也可以采用对所有数据集采用如下方式进行插值:

$$ \sum_{r=0}^{1} (r_{n+1} - r_{n}) \rho _{interp}(r_{n+1}) $$

其中,

$$ \rho_{interp}(r_{n+1}) = max _{\hat{r}:\hat{r} \geq r_{n+1}} \rho(\hat{r}) $$

其中,$\rho (\hat{r})$ 是在 recall $\hat{r}$ 值时的 precision.

AP 并不只是在少数几个数据点的 precision 进行计算,而是通过对每一 recall 层次的 precision 进行插值得到,其中,选择的是 recall 值 $r$ 大于等于 $r+1$ 的最大 precision.

3.2.3. 例示

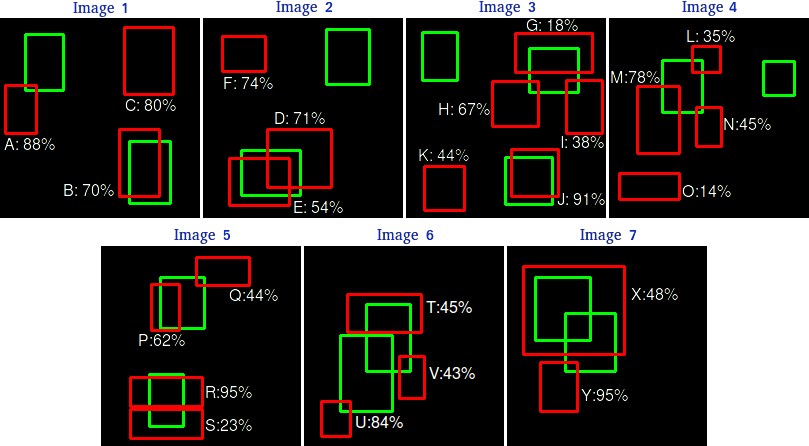

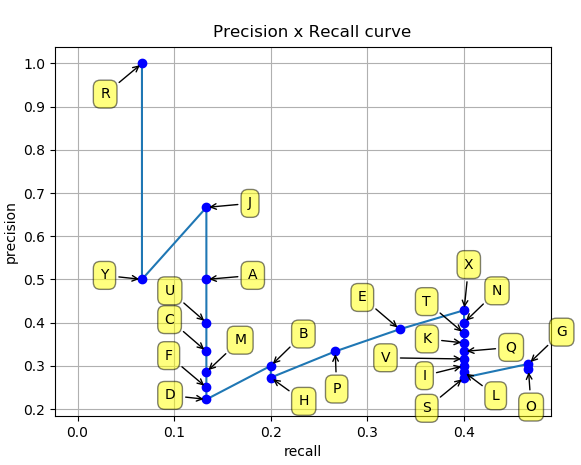

假设有 7 张图片,15 个 GT boxes(绿色框),24 个检测到的 boxes(红色框). 每个检测到物体包含一个置信度,并记为 (A, B, ..., Y).

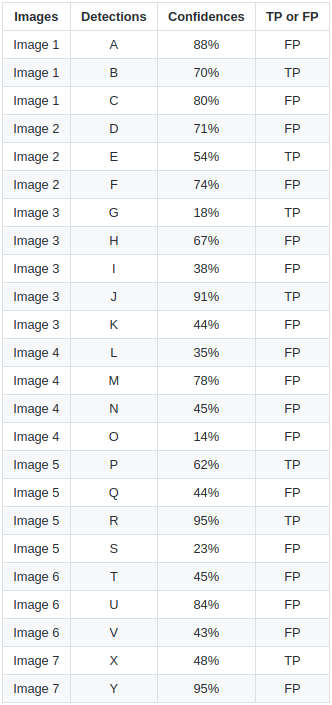

下表给出了每个 boxes 及其对应的置信度. 最后一列表示检测结果是 TP 还是 FP. 这里,如果 IoU >= 30%,则该检测 box 为 TP,否则为 FP.

根据计算的 precision 和 recall 值,画出 PR 曲线. 为此,首先需要根据置信度对检测结果进行排序;然后,计算每个累积检测结果的 precision 和 recall:

画出 PR 曲线如图:

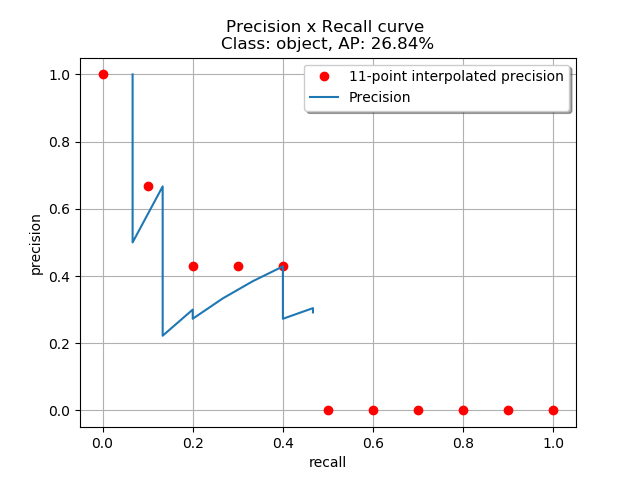

下面对比 11-point 插值和 all-point 插值.

[1] - 计算 11-point 插值

11-point 插值的思想是对 11 个 recall 层次(0, 0.1, ..., 1) 的 precisions 求均值. 插值的 precision 值是根据大于当前 recall 值的 recall 值的最大 precision 进行选取的:

基于 11-point 插值,

$AP = \frac{1}{11} \sum_{r \in \lbrace 0, 0.1,..., 1 \rbrace} \rho _{interp}(r)$

$AP = \frac{1}{11}(1 + 0.6666 + 0.4285 + 0.4285 + 0.4285 + 0 + 0 + 0 + 0 + 0 + 0)$

$AP = 0.2684$

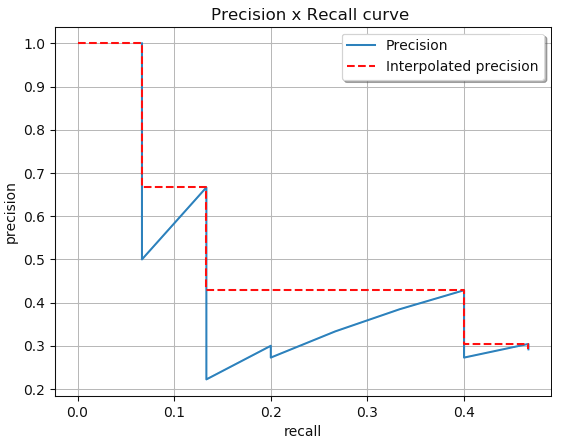

[2] - 计算 all-point 插值

通过对所有数据点进行插值,AP 可以理解为 PR 曲线的 AUC 的一种逼近. 主要是减少曲线中波动的影响.

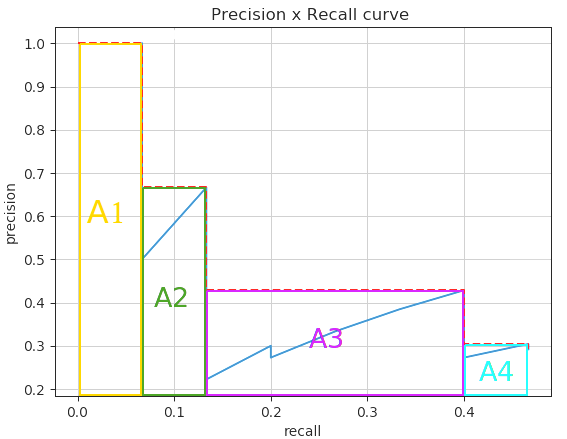

可以将 AUC 划分为 4 个区域(A1, A2, A3, A4):

计算总面积,得到 AP:

$AP = A1 + A2 + A3 + A4$

$A1 = (0.0666 - 0) \times 1 = 0.0666$

$A2 = (0.1333 - 0.0666) \times 0.0666 = 0.04446222$

$A3 = (0.4 - 0.1333) \times 0.4285 = 0.11428095$

$A4 = (0.4666 - 0.4) \times 0.3043 = 0.02026638$

$AP = 0.0666 + 0.0444622 + 0.11428095 + 0.02026638 = 0.24560955$

$AP = 0.2456$

可以看出,两种插值方法计算的结果有一些区别:0.2456 H和 0.2684.

[1] - 该项目里,默认采用与 PASCAL VOC 相同的在每个数据点进行插值. 如果想要采用 11-point 插值,可以修改函数参数:

method=MethodAveragePrecision.EveryPointInterpolation为method=MethodAveragePrecision.ElevenPointInterpolation.[2] - 示例2

4. 使用例示

服装函数 - util.py :

from enum import Enum

import cv2

class MethodAveragePrecision(Enum):

"""

Class representing if the coordinates are relative to the

image size or are absolute values.

Developed by: Rafael Padilla

Last modification: Apr 28 2018

"""

EveryPointInterpolation = 1

ElevenPointInterpolation = 2

class CoordinatesType(Enum):

"""

Class representing if the coordinates are relative to the

image size or are absolute values.

Developed by: Rafael Padilla

Last modification: Apr 28 2018

"""

Relative = 1

Absolute = 2

class BBType(Enum):

"""

Class representing if the bounding box is groundtruth or not.

Developed by: Rafael Padilla

Last modification: May 24 2018

"""

GroundTruth = 1

Detected = 2

class BBFormat(Enum):

"""

Class representing the format of a bounding box.

It can be (X,Y,width,height) => XYWH

or (X1,Y1,X2,Y2) => XYX2Y2

Developed by: Rafael Padilla

Last modification: May 24 2018

"""

XYWH = 1

XYX2Y2 = 2

# size => (width, height) of the image

# box => (X1, X2, Y1, Y2) of the bounding box

def convertToRelativeValues(size, box):

dw = 1. / (size[0])

dh = 1. / (size[1])

cx = (box[1] + box[0]) / 2.0

cy = (box[3] + box[2]) / 2.0

w = box[1] - box[0]

h = box[3] - box[2]

x = cx * dw

y = cy * dh

w = w * dw

h = h * dh

# x,y => (bounding_box_center)/width_of_the_image

# w => bounding_box_width / width_of_the_image

# h => bounding_box_height / height_of_the_image

return (x, y, w, h)

# size => (width, height) of the image

# box => (centerX, centerY, w, h) of the bounding box relative to the image

def convertToAbsoluteValues(size, box):

# w_box = round(size[0] * box[2])

# h_box = round(size[1] * box[3])

xIn = round(((2 * float(box[0]) - float(box[2])) * size[0] / 2))

yIn = round(((2 * float(box[1]) - float(box[3])) * size[1] / 2))

xEnd = xIn + round(float(box[2]) * size[0])

yEnd = yIn + round(float(box[3]) * size[1])

if xIn < 0:

xIn = 0

if yIn < 0:

yIn = 0

if xEnd >= size[0]:

xEnd = size[0] - 1

if yEnd >= size[1]:

yEnd = size[1] - 1

return (xIn, yIn, xEnd, yEnd)

def add_bb_into_image(image, bb, color=(255, 0, 0), thickness=2, label=None):

r = int(color[0])

g = int(color[1])

b = int(color[2])

font = cv2.FONT_HERSHEY_SIMPLEX

fontScale = 0.5

fontThickness = 1

x1, y1, x2, y2 = bb.getAbsoluteBoundingBox(BBFormat.XYX2Y2)

x1 = int(x1)

y1 = int(y1)

x2 = int(x2)

y2 = int(y2)

cv2.rectangle(image, (x1, y1), (x2, y2), (b, g, r), thickness)

# Add label

if label is not None:

# Get size of the text box

(tw, th) = cv2.getTextSize(label, font, fontScale, fontThickness)[0]

# Top-left coord of the textbox

(xin_bb, yin_bb) = (x1 + thickness, y1 - th + int(12.5 * fontScale))

# Checking position of the text top-left (outside or inside the bb)

if yin_bb - th <= 0: # if outside the image

yin_bb = y1 + th # put it inside the bb

r_Xin = x1 - int(thickness / 2)

r_Yin = y1 - th - int(thickness / 2)

# Draw filled rectangle to put the text in it

cv2.rectangle(image,

(r_Xin, r_Yin - thickness),

(r_Xin + tw + thickness * 3, r_Yin + th + int(12.5 * fontScale)),

(b, g, r),

-1)

cv2.putText(image, label, (xin_bb, yin_bb), font, fontScale, (0, 0, 0), fontThickness, cv2.LINE_AA)

return image4.1. BoundingBox.py

from utils import *

class BoundingBox:

def __init__(self,

imageName,

classId,

x,

y,

w,

h,

typeCoordinates=CoordinatesType.Absolute,

imgSize=None,

bbType=BBType.GroundTruth,

classConfidence=None,

format=BBFormat.XYWH):

"""Constructor.

Args:

imageName: String representing the image name.

classId: String value representing class id.

x: Float value representing the X upper-left coordinate of the bounding box.

y: Float value representing the Y upper-left coordinate of the bounding box.

w: Float value representing the width bounding box.

h: Float value representing the height bounding box.

typeCoordinates: (optional) Enum (Relative or Absolute) represents if the bounding box

coordinates (x,y,w,h) are absolute or relative to size of the image. Default:'Absolute'.

imgSize: (optional) 2D vector (width, height)=>(int, int) represents the size of the

image of the bounding box. If typeCoordinates is 'Relative', imgSize is required.

bbType: (optional) Enum (Groundtruth or Detection) identifies if the bounding box

represents a ground truth or a detection. If it is a detection, the classConfidence has

to be informed.

classConfidence: (optional) Float value representing the confidence of the detected

class. If detectionType is Detection, classConfidence needs to be informed.

format: (optional) Enum (BBFormat.XYWH or BBFormat.XYX2Y2) indicating the format of the

coordinates of the bounding boxes. BBFormat.XYWH: <left> <top> <width> <height>

BBFormat.XYX2Y2: <left> <top> <right> <bottom>.

"""

self._imageName = imageName

self._typeCoordinates = typeCoordinates

if typeCoordinates == CoordinatesType.Relative and imgSize is None:

raise IOError(

'Parameter \'imgSize\' is required. It is necessary to inform the image size.')

if bbType == BBType.Detected and classConfidence is None:

raise IOError(

'For bbType=\'Detection\', it is necessary to inform the classConfidence value.')

# if classConfidence != None and (classConfidence < 0 or classConfidence > 1):

# raise IOError('classConfidence value must be a real value between 0 and 1. Value: %f' %

# classConfidence)

self._classConfidence = classConfidence

self._bbType = bbType

self._classId = classId

self._format = format

# If relative coordinates, convert to absolute values

# For relative coords: (x,y,w,h)=(X_center/img_width , Y_center/img_height)

if (typeCoordinates == CoordinatesType.Relative):

(self._x, self._y, self._w, self._h) = convertToAbsoluteValues(imgSize, (x, y, w, h))

self._width_img = imgSize[0]

self._height_img = imgSize[1]

if format == BBFormat.XYWH:

self._x2 = self._w

self._y2 = self._h

self._w = self._x2 - self._x

self._h = self._y2 - self._y

else:

raise IOError(

'For relative coordinates, the format must be XYWH (x,y,width,height)')

# For absolute coords: (x,y,w,h)=real bb coords

else:

self._x = x

self._y = y

if format == BBFormat.XYWH:

self._w = w

self._h = h

self._x2 = self._x + self._w

self._y2 = self._y + self._h

else: # format == BBFormat.XYX2Y2: <left> <top> <right> <bottom>.

self._x2 = w

self._y2 = h

self._w = self._x2 - self._x

self._h = self._y2 - self._y

if imgSize is None:

self._width_img = None

self._height_img = None

else:

self._width_img = imgSize[0]

self._height_img = imgSize[1]

def getAbsoluteBoundingBox(self, format=BBFormat.XYWH):

if format == BBFormat.XYWH:

return (self._x, self._y, self._w, self._h)

elif format == BBFormat.XYX2Y2:

return (self._x, self._y, self._x2, self._y2)

def getRelativeBoundingBox(self, imgSize=None):

if imgSize is None and self._width_img is None and self._height_img is None:

raise IOError(

'Parameter \'imgSize\' is required. It is necessary to inform the image size.')

if imgSize is None:

return convertToRelativeValues((imgSize[0], imgSize[1]),

(self._x, self._y, self._w, self._h))

else:

return convertToRelativeValues((self._width_img, self._height_img),

(self._x, self._y, self._w, self._h))

def getImageName(self):

return self._imageName

def getConfidence(self):

return self._classConfidence

def getFormat(self):

return self._format

def getClassId(self):

return self._classId

def getImageSize(self):

return (self._width_img, self._height_img)

def getCoordinatesType(self):

return self._typeCoordinates

def getBBType(self):

return self._bbType

@staticmethod

def compare(det1, det2):

det1BB = det1.getAbsoluteBoundingBox()

det1ImgSize = det1.getImageSize()

det2BB = det2.getAbsoluteBoundingBox()

det2ImgSize = det2.getImageSize()

if det1.getClassId() == det2.getClassId() and \

det1.classConfidence == det2.classConfidenc() and \

det1BB[0] == det2BB[0] and \

det1BB[1] == det2BB[1] and \

det1BB[2] == det2BB[2] and \

det1BB[3] == det2BB[3] and \

det1ImgSize[0] == det1ImgSize[0] and \

det2ImgSize[1] == det2ImgSize[1]:

return True

return False

@staticmethod

def clone(boundingBox):

absBB = boundingBox.getAbsoluteBoundingBox(format=BBFormat.XYWH)

# return (self._x,self._y,self._x2,self._y2)

newBoundingBox = BoundingBox(

boundingBox.getImageName(),

boundingBox.getClassId(),

absBB[0],

absBB[1],

absBB[2],

absBB[3],

typeCoordinates=boundingBox.getCoordinatesType(),

imgSize=boundingBox.getImageSize(),

bbType=boundingBox.getBBType(),

classConfidence=boundingBox.getConfidence(),

format=BBFormat.XYWH)

return newBoundingBox4.2. BoundingBoxes.py

from BoundingBox import *

from utils import *

class BoundingBoxes:

def __init__(self):

self._boundingBoxes = []

def addBoundingBox(self, bb):

self._boundingBoxes.append(bb)

def removeBoundingBox(self, _boundingBox):

for d in self._boundingBoxes:

if BoundingBox.compare(d, _boundingBox):

del self._boundingBoxes[d]

return

def removeAllBoundingBoxes(self):

self._boundingBoxes = []

def getBoundingBoxes(self):

return self._boundingBoxes

def getBoundingBoxByClass(self, classId):

boundingBoxes = []

for d in self._boundingBoxes:

if d.getClassId() == classId: # get only specified bounding box type

boundingBoxes.append(d)

return boundingBoxes

def getClasses(self):

classes = []

for d in self._boundingBoxes:

c = d.getClassId()

if c not in classes:

classes.append(c)

return classes

def getBoundingBoxesByType(self, bbType):

# get only specified bb type

return [d for d in self._boundingBoxes if d.getBBType() == bbType]

def getBoundingBoxesByImageName(self, imageName):

# get only specified bb type

return [d for d in self._boundingBoxes if d.getImageName() == imageName]

def count(self, bbType=None):

if bbType is None: # Return all bounding boxes

return len(self._boundingBoxes)

count = 0

for d in self._boundingBoxes:

if d.getBBType() == bbType: # get only specified bb type

count += 1

return count

def clone(self):

newBoundingBoxes = BoundingBoxes()

for d in self._boundingBoxes:

det = BoundingBox.clone(d)

newBoundingBoxes.addBoundingBox(det)

return newBoundingBoxes

def drawAllBoundingBoxes(self, image, imageName):

bbxes = self.getBoundingBoxesByImageName(imageName)

for bb in bbxes:

if bb.getBBType() == BBType.GroundTruth: # if ground truth

image = add_bb_into_image(image, bb, color=(0, 255, 0)) # green

else: # if detection

image = add_bb_into_image(image, bb, color=(255, 0, 0)) # red

return image

# def drawAllBoundingBoxes(self, image):

# for gt in self.getBoundingBoxesByType(BBType.GroundTruth):

# image = add_bb_into_image(image, gt ,color=(0,255,0))

# for det in self.getBoundingBoxesByType(BBType.Detected):

# image = add_bb_into_image(image, det ,color=(255,0,0))

# return image4.3. Evaluator.py

import os

import sys

from collections import Counter

import matplotlib.pyplot as plt

import numpy as np

from BoundingBox import *

from BoundingBoxes import *

from utils import *

class Evaluator:

def GetPascalVOCMetrics(self,

boundingboxes,

IOUThreshold=0.5,

method=MethodAveragePrecision.EveryPointInterpolation):

"""Get the metrics used by the VOC Pascal 2012 challenge.

Get

Args:

boundingboxes: Object of the class BoundingBoxes representing ground truth and detected

bounding boxes;

IOUThreshold: IOU threshold indicating which detections will be considered TP or FP

(default value = 0.5);

method (default = EveryPointInterpolation): It can be calculated as the implementation

in the official PASCAL VOC toolkit (EveryPointInterpolation), or applying the 11-point

interpolatio as described in the paper "The PASCAL Visual Object Classes(VOC) Challenge"

or EveryPointInterpolation" (ElevenPointInterpolation);

Returns:

A list of dictionaries. Each dictionary contains information and metrics of each class.

The keys of each dictionary are:

dict['class']: class representing the current dictionary;

dict['precision']: array with the precision values;

dict['recall']: array with the recall values;

dict['AP']: average precision;

dict['interpolated precision']: interpolated precision values;

dict['interpolated recall']: interpolated recall values;

dict['total positives']: total number of ground truth positives;

dict['total TP']: total number of True Positive detections;

dict['total FP']: total number of False Negative detections;

"""

ret = [] # list containing metrics (precision, recall, average precision) of each class

# List with all ground truths (Ex: [imageName,class,confidence=1, (bb coordinates XYX2Y2)])

groundTruths = []

# List with all detections (Ex: [imageName,class,confidence,(bb coordinates XYX2Y2)])

detections = []

# Get all classes

classes = []

# Loop through all bounding boxes and separate them into GTs and detections

for bb in boundingboxes.getBoundingBoxes():

# [imageName, class, confidence, (bb coordinates XYX2Y2)]

if bb.getBBType() == BBType.GroundTruth:

groundTruths.append([

bb.getImageName(),

bb.getClassId(), 1,

bb.getAbsoluteBoundingBox(BBFormat.XYX2Y2)

])

else:

detections.append([

bb.getImageName(),

bb.getClassId(),

bb.getConfidence(),

bb.getAbsoluteBoundingBox(BBFormat.XYX2Y2)

])

# get class

if bb.getClassId() not in classes:

classes.append(bb.getClassId())

classes = sorted(classes)

# Precision x Recall is obtained individually by each class

# Loop through by classes

for c in classes:

# Get only detection of class c

dects = []

[dects.append(d) for d in detections if d[1] == c]

# Get only ground truths of class c

gts = []

[gts.append(g) for g in groundTruths if g[1] == c]

npos = len(gts)

# sort detections by decreasing confidence

dects = sorted(dects, key=lambda conf: conf[2], reverse=True)

TP = np.zeros(len(dects))

FP = np.zeros(len(dects))

# create dictionary with amount of gts for each image

det = Counter([cc[0] for cc in gts])

for key, val in det.items():

det[key] = np.zeros(val)

# print("Evaluating class: %s (%d detections)" % (str(c), len(dects)))

# Loop through detections

for d in range(len(dects)):

# print('dect %s => %s' % (dects[d][0], dects[d][3],))

# Find ground truth image

gt = [gt for gt in gts if gt[0] == dects[d][0]]

iouMax = sys.float_info.min

for j in range(len(gt)):

# print('Ground truth gt => %s' % (gt[j][3],))

iou = Evaluator.iou(dects[d][3], gt[j][3])

if iou > iouMax:

iouMax = iou

jmax = j

# Assign detection as true positive/don't care/false positive

if iouMax >= IOUThreshold:

if det[dects[d][0]][jmax] == 0:

TP[d] = 1 # count as true positive

det[dects[d][0]][jmax] = 1 # flag as already 'seen'

# print("TP")

else:

FP[d] = 1 # count as false positive

# print("FP")

# - A detected "cat" is overlaped with a GT "cat" with IOU >= IOUThreshold.

else:

FP[d] = 1 # count as false positive

# print("FP")

# compute precision, recall and average precision

acc_FP = np.cumsum(FP)

acc_TP = np.cumsum(TP)

rec = acc_TP / npos

prec = np.divide(acc_TP, (acc_FP + acc_TP))

# Depending on the method, call the right implementation

if method == MethodAveragePrecision.EveryPointInterpolation:

[ap, mpre, mrec, ii] = Evaluator.CalculateAveragePrecision(rec, prec)

else:

[ap, mpre, mrec, _] = Evaluator.ElevenPointInterpolatedAP(rec, prec)

# add class result in the dictionary to be returned

r = {

'class': c,

'precision': prec,

'recall': rec,

'AP': ap,

'interpolated precision': mpre,

'interpolated recall': mrec,

'total positives': npos,

'total TP': np.sum(TP),

'total FP': np.sum(FP)

}

ret.append(r)

return ret

def PlotPrecisionRecallCurve(self,

boundingBoxes,

IOUThreshold=0.5,

method=MethodAveragePrecision.EveryPointInterpolation,

showAP=False,

showInterpolatedPrecision=False,

savePath=None,

showGraphic=True):

"""PlotPrecisionRecallCurve

Plot the Precision x Recall curve for a given class.

Args:

boundingBoxes: Object of the class BoundingBoxes representing ground truth and detected

bounding boxes;

IOUThreshold (optional): IOU threshold indicating which detections will be considered

TP or FP (default value = 0.5);

method (default = EveryPointInterpolation): It can be calculated as the implementation

in the official PASCAL VOC toolkit (EveryPointInterpolation), or applying the 11-point

interpolatio as described in the paper "The PASCAL Visual Object Classes(VOC) Challenge"

or EveryPointInterpolation" (ElevenPointInterpolation).

showAP (optional): if True, the average precision value will be shown in the title of

the graph (default = False);

showInterpolatedPrecision (optional): if True, it will show in the plot the interpolated

precision (default = False);

savePath (optional): if informed, the plot will be saved as an image in this path

(ex: /home/mywork/ap.png) (default = None);

showGraphic (optional): if True, the plot will be shown (default = True)

Returns:

A list of dictionaries. Each dictionary contains information and metrics of each class.

The keys of each dictionary are:

dict['class']: class representing the current dictionary;

dict['precision']: array with the precision values;

dict['recall']: array with the recall values;

dict['AP']: average precision;

dict['interpolated precision']: interpolated precision values;

dict['interpolated recall']: interpolated recall values;

dict['total positives']: total number of ground truth positives;

dict['total TP']: total number of True Positive detections;

dict['total FP']: total number of False Negative detections;

"""

results = self.GetPascalVOCMetrics(boundingBoxes, IOUThreshold, method)

result = None

# Each resut represents a class

for result in results:

if result is None:

raise IOError('Error: Class %d could not be found.' % classId)

classId = result['class']

precision = result['precision']

recall = result['recall']

average_precision = result['AP']

mpre = result['interpolated precision']

mrec = result['interpolated recall']

npos = result['total positives']

total_tp = result['total TP']

total_fp = result['total FP']

plt.close()

if showInterpolatedPrecision:

if method == MethodAveragePrecision.EveryPointInterpolation:

plt.plot(mrec, mpre, '--r', label='Interpolated precision (every point)')

elif method == MethodAveragePrecision.ElevenPointInterpolation:

# Uncomment the line below if you want to plot the area

# plt.plot(mrec, mpre, 'or', label='11-point interpolated precision')

# Remove duplicates, getting only the highest precision of each recall value

nrec = []

nprec = []

for idx in range(len(mrec)):

r = mrec[idx]

if r not in nrec:

idxEq = np.argwhere(mrec == r)

nrec.append(r)

nprec.append(max([mpre[int(id)] for id in idxEq]))

plt.plot(nrec, nprec, 'or', label='11-point interpolated precision')

plt.plot(recall, precision, label='Precision')

plt.xlabel('recall')

plt.ylabel('precision')

if showAP:

ap_str = "{0:.2f}%".format(average_precision * 100)

# ap_str = "{0:.4f}%".format(average_precision * 100)

plt.title('Precision x Recall curve \nClass: %s, AP: %s' % (str(classId), ap_str))

else:

plt.title('Precision x Recall curve \nClass: %s' % str(classId))

plt.legend(shadow=True)

plt.grid()

############################################################

# Uncomment the following block to create plot with points #

############################################################

# plt.plot(recall, precision, 'bo')

# labels = ['R', 'Y', 'J', 'A', 'U', 'C', 'M', 'F', 'D', 'B', 'H', 'P', 'E', 'X', 'N', 'T',

# 'K', 'Q', 'V', 'I', 'L', 'S', 'G', 'O']

# dicPosition = {}

# dicPosition['left_zero'] = (-30,0)

# dicPosition['left_zero_slight'] = (-30,-10)

# dicPosition['right_zero'] = (30,0)

# dicPosition['left_up'] = (-30,20)

# dicPosition['left_down'] = (-30,-25)

# dicPosition['right_up'] = (20,20)

# dicPosition['right_down'] = (20,-20)

# dicPosition['up_zero'] = (0,30)

# dicPosition['up_right'] = (0,30)

# dicPosition['left_zero_long'] = (-60,-2)

# dicPosition['down_zero'] = (-2,-30)

# vecPositions = [

# dicPosition['left_down'],

# dicPosition['left_zero'],

# dicPosition['right_zero'],

# dicPosition['right_zero'], #'R', 'Y', 'J', 'A',

# dicPosition['left_up'],

# dicPosition['left_up'],

# dicPosition['right_up'],

# dicPosition['left_up'], # 'U', 'C', 'M', 'F',

# dicPosition['left_zero'],

# dicPosition['right_up'],

# dicPosition['right_down'],

# dicPosition['down_zero'], #'D', 'B', 'H', 'P'

# dicPosition['left_up'],

# dicPosition['up_zero'],

# dicPosition['right_up'],

# dicPosition['left_up'], # 'E', 'X', 'N', 'T',

# dicPosition['left_zero'],

# dicPosition['right_zero'],

# dicPosition['left_zero_long'],

# dicPosition['left_zero_slight'], # 'K', 'Q', 'V', 'I',

# dicPosition['right_down'],

# dicPosition['left_down'],

# dicPosition['right_up'],

# dicPosition['down_zero']

# ] # 'L', 'S', 'G', 'O'

# for idx in range(len(labels)):

# box = dict(boxstyle='round,pad=.5',facecolor='yellow',alpha=0.5)

# plt.annotate(labels[idx],

# xy=(recall[idx],precision[idx]), xycoords='data',

# xytext=vecPositions[idx], textcoords='offset points',

# arrowprops=dict(arrowstyle="->", connectionstyle="arc3"),

# bbox=box)

if savePath is not None:

plt.savefig(os.path.join(savePath, classId + '.png'))

if showGraphic is True:

plt.show()

# plt.waitforbuttonpress()

plt.pause(0.05)

return results

@staticmethod

def CalculateAveragePrecision(rec, prec):

mrec = []

mrec.append(0)

[mrec.append(e) for e in rec]

mrec.append(1)

mpre = []

mpre.append(0)

[mpre.append(e) for e in prec]

mpre.append(0)

for i in range(len(mpre) - 1, 0, -1):

mpre[i - 1] = max(mpre[i - 1], mpre[i])

ii = []

for i in range(len(mrec) - 1):

if mrec[1:][i] != mrec[0:-1][i]:

ii.append(i + 1)

ap = 0

for i in ii:

ap = ap + np.sum((mrec[i] - mrec[i - 1]) * mpre[i])

# return [ap, mpre[1:len(mpre)-1], mrec[1:len(mpre)-1], ii]

return [ap, mpre[0:len(mpre) - 1], mrec[0:len(mpre) - 1], ii]

@staticmethod

# 11-point interpolated average precision

def ElevenPointInterpolatedAP(rec, prec):

# def CalculateAveragePrecision2(rec, prec):

mrec = []

# mrec.append(0)

[mrec.append(e) for e in rec]

# mrec.append(1)

mpre = []

# mpre.append(0)

[mpre.append(e) for e in prec]

# mpre.append(0)

recallValues = np.linspace(0, 1, 11)

recallValues = list(recallValues[::-1])

rhoInterp = []

recallValid = []

# For each recallValues (0, 0.1, 0.2, ... , 1)

for r in recallValues:

# Obtain all recall values higher or equal than r

argGreaterRecalls = np.argwhere(mrec[:] >= r)

pmax = 0

# If there are recalls above r

if argGreaterRecalls.size != 0:

pmax = max(mpre[argGreaterRecalls.min():])

recallValid.append(r)

rhoInterp.append(pmax)

# By definition AP = sum(max(precision whose recall is above r))/11

ap = sum(rhoInterp) / 11

# Generating values for the plot

rvals = []

rvals.append(recallValid[0])

[rvals.append(e) for e in recallValid]

rvals.append(0)

pvals = []

pvals.append(0)

[pvals.append(e) for e in rhoInterp]

pvals.append(0)

# rhoInterp = rhoInterp[::-1]

cc = []

for i in range(len(rvals)):

p = (rvals[i], pvals[i - 1])

if p not in cc:

cc.append(p)

p = (rvals[i], pvals[i])

if p not in cc:

cc.append(p)

recallValues = [i[0] for i in cc]

rhoInterp = [i[1] for i in cc]

return [ap, rhoInterp, recallValues, None]

# For each detections, calculate IOU with reference

@staticmethod

def _getAllIOUs(reference, detections):

ret = []

bbReference = reference.getAbsoluteBoundingBox(BBFormat.XYX2Y2)

# img = np.zeros((200,200,3), np.uint8)

for d in detections:

bb = d.getAbsoluteBoundingBox(BBFormat.XYX2Y2)

iou = Evaluator.iou(bbReference, bb)

# Show blank image with the bounding boxes

# img = add_bb_into_image(img, d, color=(255,0,0), thickness=2, label=None)

# img = add_bb_into_image(img, reference, color=(0,255,0), thickness=2, label=None)

ret.append((iou, reference, d)) # iou, reference, detection

# cv2.imshow("comparing",img)

# cv2.waitKey(0)

# cv2.destroyWindow("comparing")

return sorted(ret, key=lambda i: i[0], reverse=True) # sort by iou (from highest to lowest)

@staticmethod

def iou(boxA, boxB):

# if boxes dont intersect

if Evaluator._boxesIntersect(boxA, boxB) is False:

return 0

interArea = Evaluator._getIntersectionArea(boxA, boxB)

union = Evaluator._getUnionAreas(boxA, boxB, interArea=interArea)

# intersection over union

iou = interArea / union

assert iou >= 0

return iou

# boxA = (Ax1,Ay1,Ax2,Ay2)

# boxB = (Bx1,By1,Bx2,By2)

@staticmethod

def _boxesIntersect(boxA, boxB):

if boxA[0] > boxB[2]:

return False # boxA is right of boxB

if boxB[0] > boxA[2]:

return False # boxA is left of boxB

if boxA[3] < boxB[1]:

return False # boxA is above boxB

if boxA[1] > boxB[3]:

return False # boxA is below boxB

return True

@staticmethod

def _getIntersectionArea(boxA, boxB):

xA = max(boxA[0], boxB[0])

yA = max(boxA[1], boxB[1])

xB = min(boxA[2], boxB[2])

yB = min(boxA[3], boxB[3])

# intersection area

return (xB - xA + 1) * (yB - yA + 1)

@staticmethod

def _getUnionAreas(boxA, boxB, interArea=None):

area_A = Evaluator._getArea(boxA)

area_B = Evaluator._getArea(boxB)

if interArea is None:

interArea = Evaluator._getIntersectionArea(boxA, boxB)

return float(area_A + area_B - interArea)

@staticmethod

def _getArea(box):

return (box[2] - box[0] + 1) * (box[3] - box[1] + 1)4.4. 示例1

import os

import _init_paths

import cv2

from BoundingBox import BoundingBox

from BoundingBoxes import BoundingBoxes

from utils import *

###########################

# Defining bounding boxes #

###########################

# Ground truth bounding boxes of 000001.jpg

gt_boundingBox_1 = BoundingBox(

imageName='000001',

classId='dog',

x=0.34419263456090654,

y=0.611,

w=0.4164305949008499,

h=0.262,

typeCoordinates=CoordinatesType.Relative,

bbType=BBType.GroundTruth,

format=BBFormat.XYWH,

imgSize=(353, 500))

gt_boundingBox_2 = BoundingBox(

imageName='000001',

classId='person',

x=0.509915014164306,

y=0.51,

w=0.9745042492917847,

h=0.972,

typeCoordinates=CoordinatesType.Relative,

bbType=BBType.GroundTruth,

format=BBFormat.XYWH,

imgSize=(353, 500))

# Ground truth bounding boxes of 000002.jpg

gt_boundingBox_3 = BoundingBox(

imageName='000002',

classId='train',

x=0.5164179104477612,

y=0.501,

w=0.20298507462686569,

h=0.202,

typeCoordinates=CoordinatesType.Relative,

bbType=BBType.GroundTruth,

format=BBFormat.XYWH,

imgSize=(335, 500))

# Ground truth bounding boxes of 000003.jpg

gt_boundingBox_4 = BoundingBox(

imageName='000003',

classId='bench',

x=0.338,

y=0.4666666666666667,

w=0.184,

h=0.10666666666666666,

typeCoordinates=CoordinatesType.Relative,

bbType=BBType.GroundTruth,

format=BBFormat.XYWH,

imgSize=(500, 375))

gt_boundingBox_5 = BoundingBox(

imageName='000003',

classId='bench',

x=0.546,

y=0.48133333333333334,

w=0.136,

h=0.13066666666666665,

typeCoordinates=CoordinatesType.Relative,

bbType=BBType.GroundTruth,

format=BBFormat.XYWH,

imgSize=(500, 375))

# Detected bounding boxes of 000001.jpg

detected_boundingBox_1 = BoundingBox(

imageName='000001',

classId='person',

classConfidence=0.893202,

x=52,

y=4,

w=352,

h=442,

typeCoordinates=CoordinatesType.Absolute,

bbType=BBType.Detected,

format=BBFormat.XYX2Y2,

imgSize=(353, 500))

# Detected bounding boxes of 000002.jpg

detected_boundingBox_2 = BoundingBox(

imageName='000002',

classId='train',

classConfidence=0.863700,

x=140,

y=195,

w=209,

h=293,

typeCoordinates=CoordinatesType.Absolute,

bbType=BBType.Detected,

format=BBFormat.XYX2Y2,

imgSize=(335, 500))

# Detected bounding boxes of 000003.jpg

detected_boundingBox_3 = BoundingBox(

imageName='000003',

classId='bench',

classConfidence=0.278000,

x=388,

y=288,

w=493,

h=331,

typeCoordinates=CoordinatesType.Absolute,

bbType=BBType.Detected,

format=BBFormat.XYX2Y2,

imgSize=(500, 375))

# Creating the object of the class BoundingBoxes

myBoundingBoxes = BoundingBoxes()

# Add all bounding boxes to the BoundingBoxes object:

myBoundingBoxes.addBoundingBox(gt_boundingBox_1)

myBoundingBoxes.addBoundingBox(gt_boundingBox_2)

myBoundingBoxes.addBoundingBox(gt_boundingBox_3)

myBoundingBoxes.addBoundingBox(gt_boundingBox_4)

myBoundingBoxes.addBoundingBox(gt_boundingBox_5)

myBoundingBoxes.addBoundingBox(detected_boundingBox_1)

myBoundingBoxes.addBoundingBox(detected_boundingBox_2)

myBoundingBoxes.addBoundingBox(detected_boundingBox_3)

###################

# Creating images #

###################

currentPath = os.path.dirname(os.path.realpath(__file__))

gtImages = ['000001', '000002', '000003']

for imageName in gtImages:

im = cv2.imread(os.path.join(currentPath, 'images', 'groundtruths', imageName) + '.jpg')

# Add bounding boxes

im = myBoundingBoxes.drawAllBoundingBoxes(im, imageName)

# cv2.imshow(imageName+'.jpg', im)

# cv2.waitKey(0)

cv2.imwrite(os.path.join(currentPath, 'images', imageName + '.jpg'), im)

print('Image %s created successfully!' % imageName)如图:

4.5. 示例2

import _init_paths

from BoundingBox import BoundingBox

from BoundingBoxes import BoundingBoxes

from Evaluator import *

from utils import *

def getBoundingBoxes():

"""

从包含 boxes(GT 和 detections) 的 txt 文件中读取数据.

"""

allBoundingBoxes = BoundingBoxes()

import glob

import os

#读取 GT 数据

currentPath = os.path.dirname(os.path.abspath(__file__))

folderGT = os.path.join(currentPath, 'groundtruths')

os.chdir(folderGT)

files = glob.glob("*.txt")

files.sort()

# Class representing bounding boxes (ground truths and detections)

allBoundingBoxes = BoundingBoxes()

#从txt文件中读取 GT 数据.

# txt文件每一行表示一个 GT box, 格式为:class_id, x, y, width, height

# Class_id 表示 box 的物体类别标签

# x, y 表示 box 的左上角坐标

# x2, y2 表示 box 的右下角坐标

for f in files:

nameOfImage = f.replace(".txt", "")

fh1 = open(f, "r")

for line in fh1:

line = line.replace("\n", "")

if line.replace(' ', '') == '':

continue

splitLine = line.split(" ")

idClass = splitLine[0] # class

x = float(splitLine[1]) # confidence

y = float(splitLine[2])

w = float(splitLine[3])

h = float(splitLine[4])

bb = BoundingBox(

nameOfImage,

idClass,

x,

y,

w,

h,

CoordinatesType.Absolute, (200, 200),

BBType.GroundTruth,

format=BBFormat.XYWH)

allBoundingBoxes.addBoundingBox(bb)

fh1.close()

#读取检测数据

folderDet = os.path.join(currentPath, 'detections')

os.chdir(folderDet)

files = glob.glob("*.txt")

files.sort()

#从 txt 文件读取检测数据

#每一行为一个检测结果,格式为:class_id, confidence, x, y, width, height

# Class_id 表示检测框的类别标签

# Confidence 表示属于某个标签类别class_id 的置信度

# x, y 表示 box 的左上角坐标

# x2, y2 表示 box 的右下角坐标

for f in files:

# nameOfImage = f.replace("_det.txt","")

nameOfImage = f.replace(".txt", "")

#从 txt 文件读取检测数据

fh1 = open(f, "r")

for line in fh1:

line = line.replace("\n", "")

if line.replace(' ', '') == '':

continue

splitLine = line.split(" ")

idClass = splitLine[0] # class

confidence = float(splitLine[1]) # confidence

x = float(splitLine[2])

y = float(splitLine[3])

w = float(splitLine[4])

h = float(splitLine[5])

bb = BoundingBox(

nameOfImage,

idClass,

x,

y,

w,

h,

CoordinatesType.Absolute, (200, 200),

BBType.Detected,

confidence,

format=BBFormat.XYWH)

allBoundingBoxes.addBoundingBox(bb)

fh1.close()

return allBoundingBoxes

def createImages(dictGroundTruth, dictDetected):

"""

Create representative images with bounding boxes.

"""

import numpy as np

import cv2

# Define image size

width = 200

height = 200

# Loop through the dictionary with ground truth detections

for key in dictGroundTruth:

image = np.zeros((height, width, 3), np.uint8)

gt_boundingboxes = dictGroundTruth[key]

image = gt_boundingboxes.drawAllBoundingBoxes(image)

detection_boundingboxes = dictDetected[key]

image = detection_boundingboxes.drawAllBoundingBoxes(image)

# Show detection and its GT

cv2.imshow(key, image)

cv2.waitKey()

#读取包含 boxes 的 txt 文件

boundingboxes = getBoundingBoxes()

#取消下面行的注释,以基于 boxes 生成图片.

# createImages(dictGroundTruth, dictDetected)

# Create an evaluator object in order to obtain the metrics

evaluator = Evaluator()

##############################################################

# VOC PASCAL Metrics

##############################################################

# Plot Precision x Recall curve

evaluator.PlotPrecisionRecallCurve(

boundingboxes, # 包含所有 boxes(GT 和 detections)的 Object.

IOUThreshold=0.3, # IOU threshold

method=MethodAveragePrecision.EveryPointInterpolation, # As the official matlab code

showAP=True, # Show Average Precision in the title of the plot

showInterpolatedPrecision=True) # Plot the interpolated precision curve

# Get metrics with PASCAL VOC metrics

metricsPerClass = evaluator.GetPascalVOCMetrics(

boundingboxes, # 包含所有 boxes(GT 和 detections)的 Object.

IOUThreshold=0.3, # IOU threshold

method=MethodAveragePrecision.EveryPointInterpolation) # As the official matlab code

print("Average precision values per class:\n")

# Loop through classes to obtain their metrics

for mc in metricsPerClass:

# Get metric values per each class

c = mc['class']

precision = mc['precision']

recall = mc['recall']

average_precision = mc['AP']

ipre = mc['interpolated precision']

irec = mc['interpolated recall']

# Print AP per class

print('%s: %f' % (c, average_precision))4.6. 创建 GT files

[1] - 对于每张图片分别创建 GT file,并放置于 groundtruths/ 路径;

[2] - 每个 GT file 的内容格式为: <class_name> <left> <top> <right> <bottom>;

[3] - 例如,对于图片 2008_000034.jpg 的 GT boxes,保存在 groundtruths/2008_000034.txt 文件中,

bottle 6 234 45 362

person 1 156 103 336

person 36 111 198 416

person 91 42 338 5004.7. 创建检测 files

[1] - 对于每张图片分别创建 detection file,并放置于 detections/ 路径;

[2] - 每个 detection file 的内容格式为: <class_name> <confidence> <left> <top> <right> <bottom>;

[3] - 例如,对于图片 2008_000034.jpg 的 detection boxes,保存在 detections/2008_000034.txt 文件中,

bottle 0.14981 80 1 295 500

bus 0.12601 36 13 404 316

horse 0.12526 430 117 500 307

pottedplant 0.14585 212 78 292 118

tvmonitor 0.070565 388 89 500 196 5. 具体实现

5.1. 完整实现

import argparse

import glob

import os

import shutil

import sys

import _init_paths

from BoundingBox import BoundingBox

from BoundingBoxes import BoundingBoxes

from Evaluator import *

from utils import BBFormat

# Validate formats

def ValidateFormats(argFormat, argName, errors):

if argFormat == 'xywh':

return BBFormat.XYWH

elif argFormat == 'xyrb':

return BBFormat.XYX2Y2

elif argFormat is None:

return BBFormat.XYWH # default when nothing is passed

else:

errors.append(

'argument %s: invalid value. It must be either \'xywh\' or \'xyrb\'' % argName)

# Validate mandatory args

def ValidateMandatoryArgs(arg, argName, errors):

if arg is None:

errors.append('argument %s: required argument' % argName)

else:

return True

def ValidateImageSize(arg, argName, argInformed, errors):

errorMsg = 'argument %s: required argument if %s is relative' % (argName, argInformed)

ret = None

if arg is None:

errors.append(errorMsg)

else:

arg = arg.replace('(', '').replace(')', '')

args = arg.split(',')

if len(args) != 2:

errors.append(

'%s. It must be in the format \'width,height\' (e.g. \'600,400\')' % errorMsg)

else:

if not args[0].isdigit() or not args[1].isdigit():

errors.append(

'%s. It must be in INdiaTEGER the format \'width,height\' (e.g. \'600,400\')' %

errorMsg)

else:

ret = (int(args[0]), int(args[1]))

return ret

# Validate coordinate types

def ValidateCoordinatesTypes(arg, argName, errors):

if arg == 'abs':

return CoordinatesType.Absolute

elif arg == 'rel':

return CoordinatesType.Relative

elif arg is None:

return CoordinatesType.Absolute # default when nothing is passed

errors.append('argument %s: invalid value. It must be either \'rel\' or \'abs\'' % argName)

def ValidatePaths(arg, nameArg, errors):

if arg is None:

errors.append('argument %s: invalid directory' % nameArg)

elif os.path.isdir(arg) is False and os.path.isdir(os.path.join(currentPath, arg)) is False:

errors.append('argument %s: directory does not exist \'%s\'' % (nameArg, arg))

# elif os.path.isdir(os.path.join(currentPath, arg)) is True:

# arg = os.path.join(currentPath, arg)

else:

arg = os.path.join(currentPath, arg)

return arg

def getBoundingBoxes(directory,

isGT,

bbFormat,

coordType,

allBoundingBoxes=None,

allClasses=None,

imgSize=(0, 0)):

"""

Read txt files containing bounding boxes (ground truth and detections).

"""

if allBoundingBoxes is None:

allBoundingBoxes = BoundingBoxes()

if allClasses is None:

allClasses = []

# Read ground truths

os.chdir(directory)

files = glob.glob("*.txt")

files.sort()

# Read GT detections from txt file

for f in files:

nameOfImage = f.replace(".txt", "")

fh1 = open(f, "r")

for line in fh1:

line = line.replace("\n", "")

if line.replace(' ', '') == '':

continue

splitLine = line.split(" ")

if isGT:

# idClass = int(splitLine[0]) #class

idClass = (splitLine[0]) # class

x = float(splitLine[1])

y = float(splitLine[2])

w = float(splitLine[3])

h = float(splitLine[4])

bb = BoundingBox(

nameOfImage,

idClass,

x,

y,

w,

h,

coordType,

imgSize,

BBType.GroundTruth,

format=bbFormat)

else:

# idClass = int(splitLine[0]) #class

idClass = (splitLine[0]) # class

confidence = float(splitLine[1])

x = float(splitLine[2])

y = float(splitLine[3])

w = float(splitLine[4])

h = float(splitLine[5])

bb = BoundingBox(

nameOfImage,

idClass,

x,

y,

w,

h,

coordType,

imgSize,

BBType.Detected,

confidence,

format=bbFormat)

allBoundingBoxes.addBoundingBox(bb)

if idClass not in allClasses:

allClasses.append(idClass)

fh1.close()

return allBoundingBoxes, allClasses

# Get current path to set default folders

currentPath = os.path.dirname(os.path.abspath(__file__))

VERSION = '0.1 (beta)'

#

parser = argparse.ArgumentParser(

prog='Object Detection Metrics - Pascal VOC',

description='This project applies the most popular metrics used to evaluate object detection '

'algorithms.\nThe current implemention runs the Pascal VOC metrics.\nFor further references, '

'please check:\nhttps://github.com/rafaelpadilla/Object-Detection-Metrics',

epilog="Developed by: Rafael Padilla (rafael.padilla@smt.ufrj.br)")

# formatter_class=RawTextHelpFormatter)

parser.add_argument('-v', '--version', action='version', version='%(prog)s ' + VERSION)

# Positional arguments

# Mandatory

parser.add_argument(

'-gt',

'--gtfolder',

dest='gtFolder',

default=os.path.join(currentPath, 'groundtruths'),

metavar='',

help='folder containing your ground truth bounding boxes')

parser.add_argument(

'-det',

'--detfolder',

dest='detFolder',

default=os.path.join(currentPath, 'detections'),

metavar='',

help='folder containing your detected bounding boxes')

# Optional

parser.add_argument(

'-t',

'--threshold',

dest='iouThreshold',

type=float,

default=0.5,

metavar='',

help='IOU threshold. Default 0.5')

parser.add_argument(

'-gtformat',

dest='gtFormat',

metavar='',

default='xywh',

help='format of the coordinates of the ground truth bounding boxes: '

'(\'xywh\': <left> <top> <width> <height>)'

' or (\'xyrb\': <left> <top> <right> <bottom>)')

parser.add_argument(

'-detformat',

dest='detFormat',

metavar='',

default='xywh',

help='format of the coordinates of the detected bounding boxes '

'(\'xywh\': <left> <top> <width> <height>) '

'or (\'xyrb\': <left> <top> <right> <bottom>)')

parser.add_argument(

'-gtcoords',

dest='gtCoordinates',

default='abs',

metavar='',

help='reference of the ground truth bounding box coordinates: absolute '

'values (\'abs\') or relative to its image size (\'rel\')')

parser.add_argument(

'-detcoords',

default='abs',

dest='detCoordinates',

metavar='',

help='reference of the ground truth bounding box coordinates: '

'absolute values (\'abs\') or relative to its image size (\'rel\')')

parser.add_argument(

'-imgsize',

dest='imgSize',

metavar='',

help='image size. Required if -gtcoords or -detcoords are \'rel\'')

parser.add_argument(

'-sp', '--savepath', dest='savePath', metavar='', help='folder where the plots are saved')

parser.add_argument(

'-np',

'--noplot',

dest='showPlot',

action='store_false',

help='no plot is shown during execution')

args = parser.parse_args()

#

iouThreshold = args.iouThreshold

# Arguments validation

errors = []

# Validate formats

gtFormat = ValidateFormats(args.gtFormat, '-gtformat', errors)

detFormat = ValidateFormats(args.detFormat, '-detformat', errors)

# Groundtruth folder

if ValidateMandatoryArgs(args.gtFolder, '-gt/--gtfolder', errors):

gtFolder = ValidatePaths(args.gtFolder, '-gt/--gtfolder', errors)

else:

# errors.pop()

gtFolder = os.path.join(currentPath, 'groundtruths')

if os.path.isdir(gtFolder) is False:

errors.append('folder %s not found' % gtFolder)

# Coordinates types

gtCoordType = ValidateCoordinatesTypes(args.gtCoordinates, '-gtCoordinates', errors)

detCoordType = ValidateCoordinatesTypes(args.detCoordinates, '-detCoordinates', errors)

imgSize = (0, 0)

if gtCoordType == CoordinatesType.Relative: # Image size is required

imgSize = ValidateImageSize(args.imgSize, '-imgsize', '-gtCoordinates', errors)

if detCoordType == CoordinatesType.Relative: # Image size is required

imgSize = ValidateImageSize(args.imgSize, '-imgsize', '-detCoordinates', errors)

# Detection folder

if ValidateMandatoryArgs(args.detFolder, '-det/--detfolder', errors):

detFolder = ValidatePaths(args.detFolder, '-det/--detfolder', errors)

else:

# errors.pop()

detFolder = os.path.join(currentPath, 'detections')

if os.path.isdir(detFolder) is False:

errors.append('folder %s not found' % detFolder)

if args.savePath is not None:

savePath = ValidatePaths(args.savePath, '-sp/--savepath', errors)

else:

savePath = os.path.join(currentPath, 'results')

# Validate savePath

# If error, show error messages

if len(errors) is not 0:

print("""usage: Object Detection Metrics [-h] [-v] [-gt] [-det] [-t] [-gtformat]

[-detformat] [-save]""")

print('Object Detection Metrics: error(s): ')

[print(e) for e in errors]

sys.exit()

# Create directory to save results

shutil.rmtree(savePath, ignore_errors=True) # Clear folder

os.makedirs(savePath)

# Show plot during execution

showPlot = args.showPlot

# print('iouThreshold= %f' % iouThreshold)

# print('savePath = %s' % savePath)

# print('gtFormat = %s' % gtFormat)

# print('detFormat = %s' % detFormat)

# print('gtFolder = %s' % gtFolder)

# print('detFolder = %s' % detFolder)

# print('gtCoordType = %s' % gtCoordType)

# print('detCoordType = %s' % detCoordType)

# print('showPlot %s' % showPlot)

# Get groundtruth boxes

allBoundingBoxes, allClasses = getBoundingBoxes(

gtFolder, True, gtFormat, gtCoordType, imgSize=imgSize)

# Get detected boxes

allBoundingBoxes, allClasses = getBoundingBoxes(

detFolder, False, detFormat, detCoordType, allBoundingBoxes, allClasses, imgSize=imgSize)

allClasses.sort()

evaluator = Evaluator()

acc_AP = 0

validClasses = 0

# Plot Precision x Recall curve

detections = evaluator.PlotPrecisionRecallCurve(

allBoundingBoxes, # Object containing all bounding boxes (ground truths and detections)

IOUThreshold=iouThreshold, # IOU threshold

method=MethodAveragePrecision.EveryPointInterpolation,

showAP=True, # Show Average Precision in the title of the plot

showInterpolatedPrecision=False, # Don't plot the interpolated precision curve

savePath=savePath,

showGraphic=showPlot)

f = open(os.path.join(savePath, 'results.txt'), 'w')

f.write('Object Detection Metrics\n')

f.write('https://github.com/rafaelpadilla/Object-Detection-Metrics\n\n\n')

f.write('Average Precision (AP), Precision and Recall per class:')

# each detection is a class

for metricsPerClass in detections:

# Get metric values per each class

cl = metricsPerClass['class']

ap = metricsPerClass['AP']

precision = metricsPerClass['precision']

recall = metricsPerClass['recall']

totalPositives = metricsPerClass['total positives']

total_TP = metricsPerClass['total TP']

total_FP = metricsPerClass['total FP']

if totalPositives > 0:

validClasses = validClasses + 1

acc_AP = acc_AP + ap

prec = ['%.2f' % p for p in precision]

rec = ['%.2f' % r for r in recall]

ap_str = "{0:.2f}%".format(ap * 100)

# ap_str = "{0:.4f}%".format(ap * 100)

print('AP: %s (%s)' % (ap_str, cl))

f.write('\n\nClass: %s' % cl)

f.write('\nAP: %s' % ap_str)

f.write('\nPrecision: %s' % prec)

f.write('\nRecall: %s' % rec)

mAP = acc_AP / validClasses

mAP_str = "{0:.2f}%".format(mAP * 100)

print('mAP: %s' % mAP_str)

f.write('\n\n\nmAP: %s' % mAP_str)5.2. 参数说明

| 参数 | 描述 | 示例 | 默认 |

|---|---|---|---|

-h, --help | 显示帮助信息 | python pascalvoc.py -h | |

-v, --version | 版本信息 | python pascalvoc.py -v | |

-gt, --gtfolder | GT files 文件路径 | python pascalvoc.py -gt /path/to/groundtruths/ | /Object-Detection-Metrics/groundtruths |

-det, --detfolder | detections files 文件路径 | python pascalvoc.py -det /path/to/detections/ | /Object-Detection-Metrics/detections/ |

-t, --threshold | 判断 detection 是 TP 还是 FP 的 IoU 阈值 | python pascalvoc.py -t 0.75 | 0.50 |

-gtformat | GT boxes 的坐标格式 | python pascalvoc.py -gtformat xyrb | xywh |

-detformat | detections boxes 坐标格式 | python pascalvoc.py -detformat xyrb | xywh |

-gtcoords | GT boxes 坐标的参考. 如果标注的坐标是相对于图片尺寸(如 YOLO 中所使用的),则设置为 rel. 如果标注的坐标是绝对值,不依赖于图片尺寸,则设置为 abs. | python pascalvoc.py -gtcoords rel | abs |

-detcoords | Detections boxes 坐标的参考. 如果标注的坐标是相对于图片尺寸(如 YOLO 中所使用的),则设置为 rel. 如果标注的坐标是绝对值,不依赖于图片尺寸,则设置为 abs. | python pascalvoc.py -detcoords rel | abs |

-imgsize | 图片尺寸的格式 width,height <int,int>. 需要将 -gtcoords 和 -detcoords 设置为 rel. | python pascalvoc.py -imgsize 600,400 | |

-sp, --savepath | 画图结果的保存路径. | python pascalvoc.py -sp /path/to/my_results/ | Object-Detection-Metrics/results/ |

-np, --noplot | 执行过程中是否保存画图结果. | python pascalvoc.py -np | not presented. Therefore, plots are shown |