Keras 中 keras.summary() 即可很好的将模型结构可视化,但 Pytorch 暂还没有提供网络模型可视化的工具.

Github 中的 pytorchviz 可以很不错的画出 Pytorch 模型网络结构.

create visualizations of PyTorch execution graphs and traces.

- 安装:

sudo pip install graphviz

# 或

sudo pip install git+https://github.com/szagoruyko/pytorchviz模型可视化函数 - make_dot()

https://github.com/szagoruyko/pytorchviz/blob/master/torchviz/dot.py

import torch

from torch.autograd import Variable

from graphviz import Digraph

def make_dot(var, params=None):

"""

画出 PyTorch 自动梯度图 autograd graph 的 Graphviz 表示.

蓝色节点表示有梯度计算的变量Variables;

橙色节点表示用于 torch.autograd.Function 中的 backward 的张量 Tensors.

Args:

var: output Variable

params: dict of (name, Variable) to add names to node that

require grad (TODO: make optional)

"""

if params is not None:

assert all(isinstance(p, Variable) for p in params.values())

param_map = {id(v): k for k, v in params.items()}

node_attr = dict(style='filled', shape='box', align='left',

fontsize='12', ranksep='0.1', height='0.2')

dot = Digraph(node_attr=node_attr, graph_attr=dict(size="12,12"))

seen = set()

def size_to_str(size):

return '(' + (', ').join(['%d' % v for v in size]) + ')'

output_nodes = (var.grad_fn,) if not isinstance(var, tuple) else tuple(v.grad_fn for v in var)

def add_nodes(var):

if var not in seen:

if torch.is_tensor(var):

# note: this used to show .saved_tensors in pytorch0.2, but stopped

# working as it was moved to ATen and Variable-Tensor merged

dot.node(str(id(var)), size_to_str(var.size()), fillcolor='orange')

elif hasattr(var, 'variable'):

u = var.variable

name = param_map[id(u)] if params is not None else ''

node_name = '%s\n %s' % (name, size_to_str(u.size()))

dot.node(str(id(var)), node_name, fillcolor='lightblue')

elif var in output_nodes:

dot.node(str(id(var)), str(type(var).__name__), fillcolor='darkolivegreen1')

else:

dot.node(str(id(var)), str(type(var).__name__))

seen.add(var)

if hasattr(var, 'next_functions'):

for u in var.next_functions:

if u[0] is not None:

dot.edge(str(id(u[0])), str(id(var)))

add_nodes(u[0])

if hasattr(var, 'saved_tensors'):

for t in var.saved_tensors:

dot.edge(str(id(t)), str(id(var)))

add_nodes(t)

# 多输出场景 multiple outputs

if isinstance(var, tuple):

for v in var:

add_nodes(v.grad_fn)

else:

add_nodes(var.grad_fn)

resize_graph(dot)

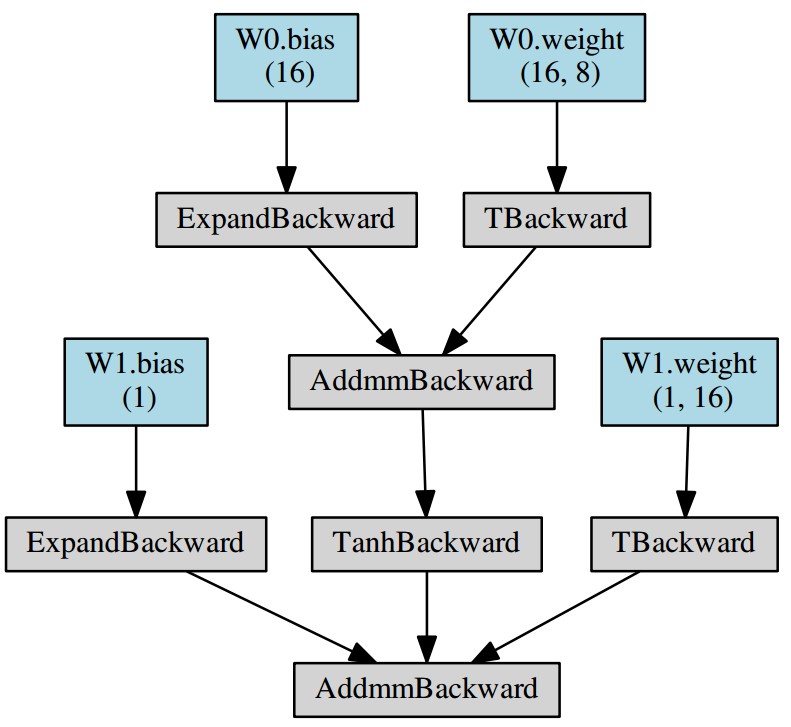

return dotDemo - MLP

https://github.com/szagoruyko/pytorchviz/blob/master/examples.ipynb

python2.7

import torch

from torch import nn

from torchviz import make_dot

model = nn.Sequential()

model.add_module('W0', nn.Linear(8, 16))

model.add_module('tanh', nn.Tanh())

model.add_module('W1', nn.Linear(16, 1))

x = torch.randn(1,8)

vis_graph = make_dot(model(x), params=dict(model.named_parameters()))

vise_graph.view()

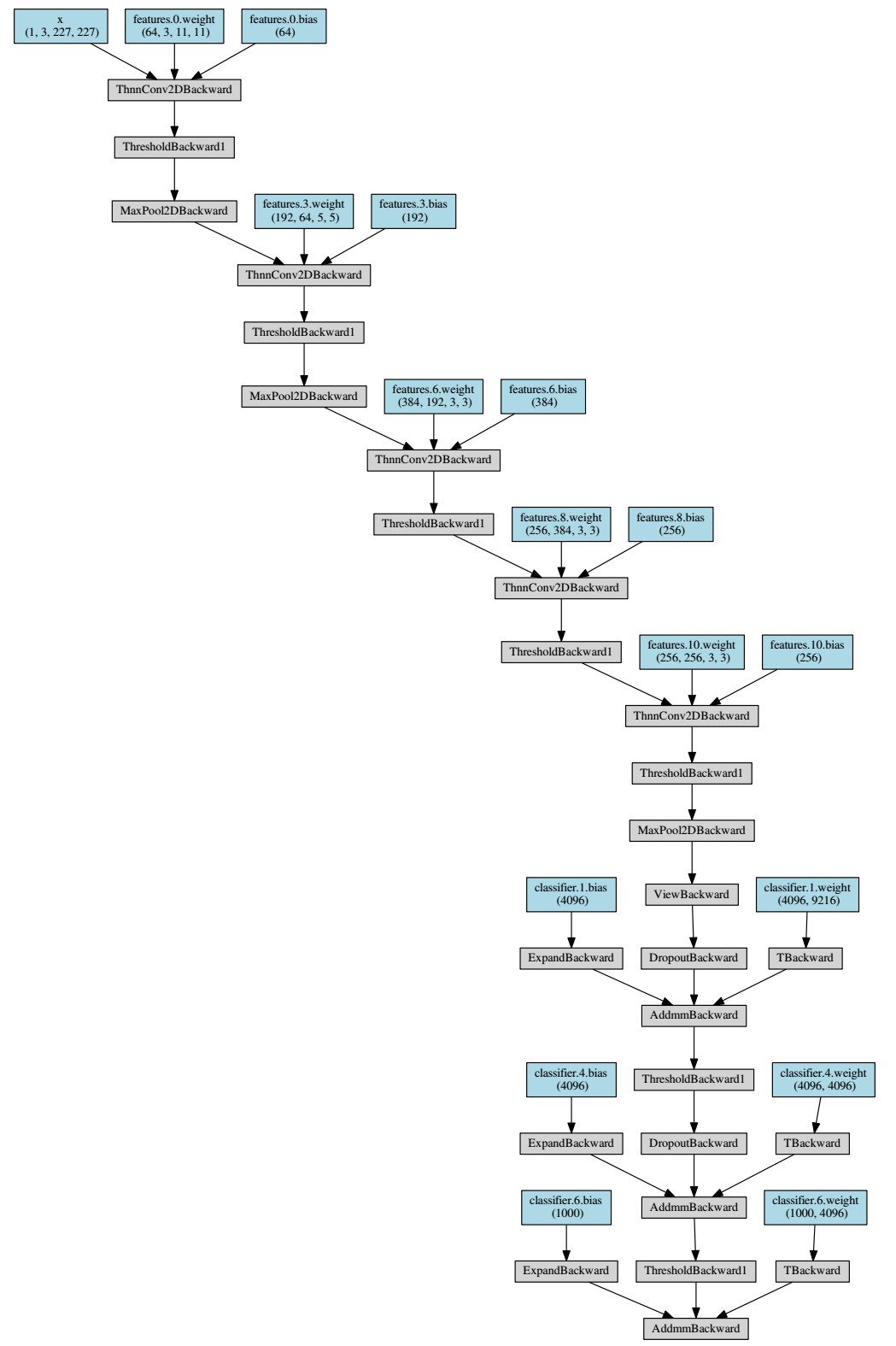

Demo - AlexNet

import torch

from torch import nn

from torchviz import make_dot

from torchvision.models import AlexNet

model = AlexNet()

x = torch.randn(1, 3, 227, 227).requires_grad_(True)

y = model(x)

vis_graph = make_dot(y, params=dict(list(model.named_parameters()) + [('x', x)]))

vise_graph.view()

模型参数打印

import torch

from torch import nn

from torchviz import make_dot

from torchvision.models import AlexNet

model = AlexNet()

x = torch.randn(1, 3, 227, 227).requires_grad_(True)

y = model(x)

params = list(model.parameters())

k = 0

for i in params:

l = 1

print("该层的结构:" + str(list(i.size())))

for j in i.size():

l *= j

print("该层参数和:" + str(l))

k = k + l

print("总参数数量和:" + str(k))输出如下:

该层的结构:[64, 3, 11, 11]

该层参数和:23232

该层的结构:[64]

该层参数和:64

该层的结构:[192, 64, 5, 5]

该层参数和:307200

该层的结构:[192]

该层参数和:192

该层的结构:[384, 192, 3, 3]

该层参数和:663552

该层的结构:[384]

该层参数和:384

该层的结构:[256, 384, 3, 3]

该层参数和:884736

该层的结构:[256]

该层参数和:256

该层的结构:[256, 256, 3, 3]

该层参数和:589824

该层的结构:[256]

该层参数和:256

该层的结构:[4096, 9216]

该层参数和:37748736

该层的结构:[4096]

该层参数和:4096

该层的结构:[4096, 4096]

该层参数和:16777216

该层的结构:[4096]

该层参数和:4096

该层的结构:[1000, 4096]

该层参数和:4096000

该层的结构:[1000]

该层参数和:1000

总参数数量和:1000