Rethinking the Inception Architecture for Computer Vision

1. 卷积网络结构的设计原则(principle)

- [1] - 避免特征表示的瓶颈(representational bottleneck),尤其是网络浅层结构.

前馈网络可以采用由输入层到分类器或回归器的无环图(acyclic graph) 来表示,其定义了信息流的传递方向.

特征表示瓶颈(representational bottleneck) 是指网络中间层会对特征的维度进行较大的压缩(pooling 等操作),从输入到输出特征的尺寸明显减少,出现特征丢失.

理论上,由于特征丢失的问题,比如特征的关联性结构,信息内容不能仅仅由输出的特征表示. 只是提供了粗略的特征估计.

优化网络结构,减少 Pooling 等导致的特征丢失. [2] - 高维的特征表示,易于网络的收敛.

在卷积网络中增加每个区块的激活允许更多解开特征(disentangled features). 网络训练更快.

输入信息被分解,各子特征间的关联性低,子特征内部的相关性强. 这样,将强相关性的特征聚合,更易于网络的收敛.[3] - 低维特征的空间聚合,不会导致特征表示能力的丢失.

例如,在进行更加分散(如,3x3)的卷积前,可以在空间聚合(spatial aggregation)前,可以采用 1x1 卷积核降低输入特征的维度.

其原因,推测为:如果在空间聚合内容中使用输出,临近神经元的强关联性,在降维时不会出现较多的信息损失.[4] - 平衡网络的宽度和深度.

网络宽度和深度的平衡,才能达到最有的网络性能.

2. 分解大尺寸核的卷积

Factorizing Convolutions with Large Filter Size

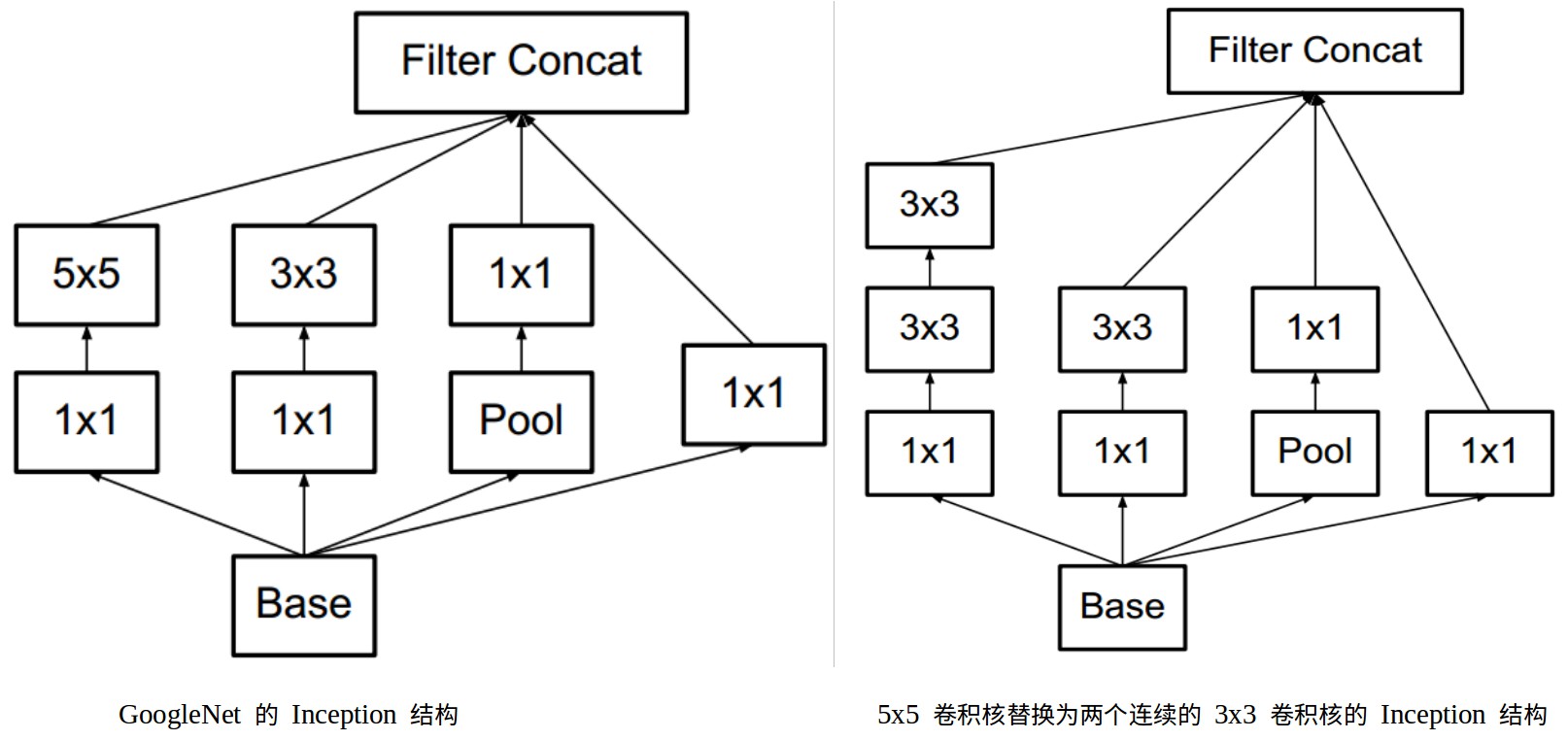

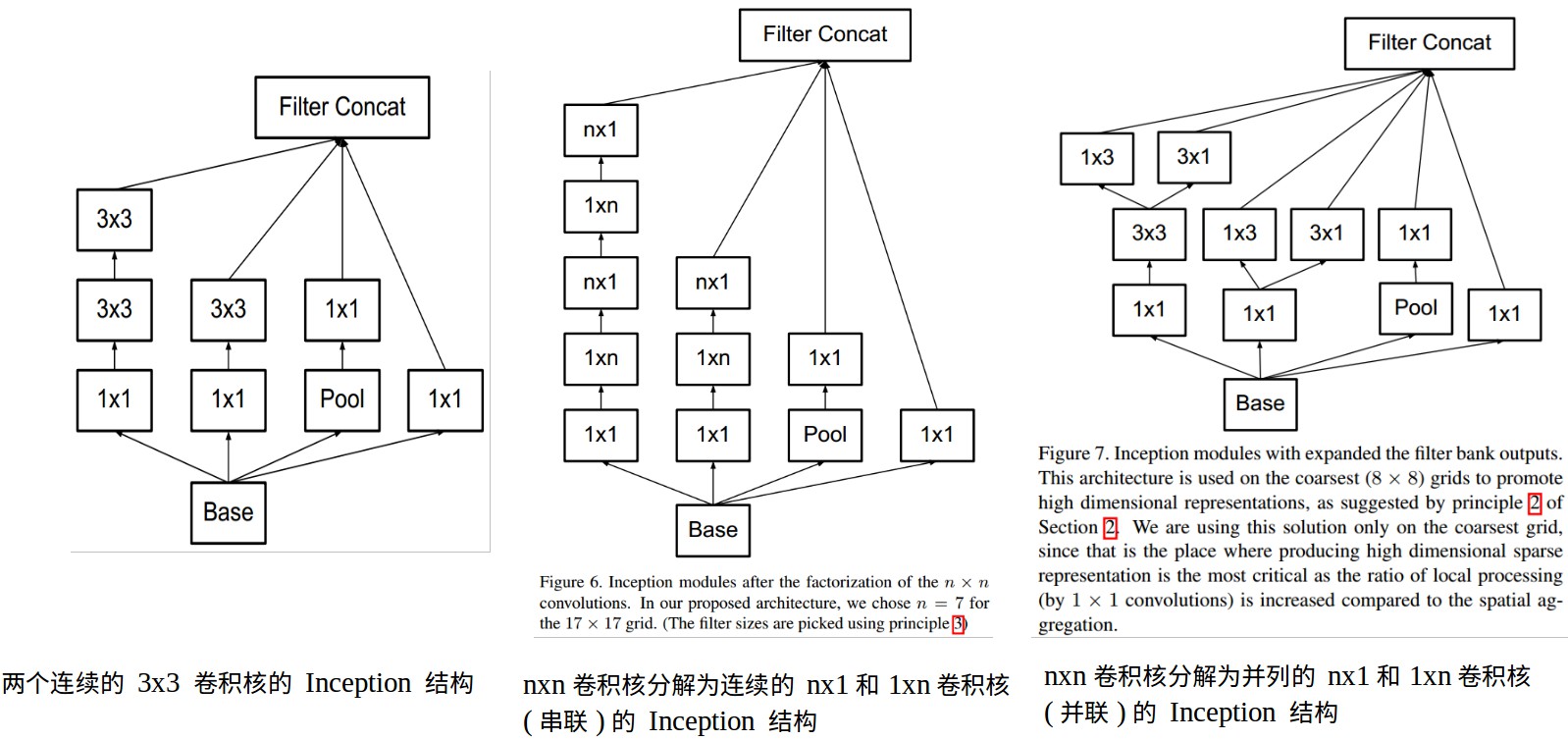

2.1 大尺寸卷积核分解为小尺寸卷积核

Factorization into smaller convolutions

一个 5x5 卷积核等价于两个连续的 3x3 卷积核.

假设 5x5 和两个连续的 3x3卷积输出的特征数相同,则计算量之比为:5x5/(3x3+3x3)=25/18

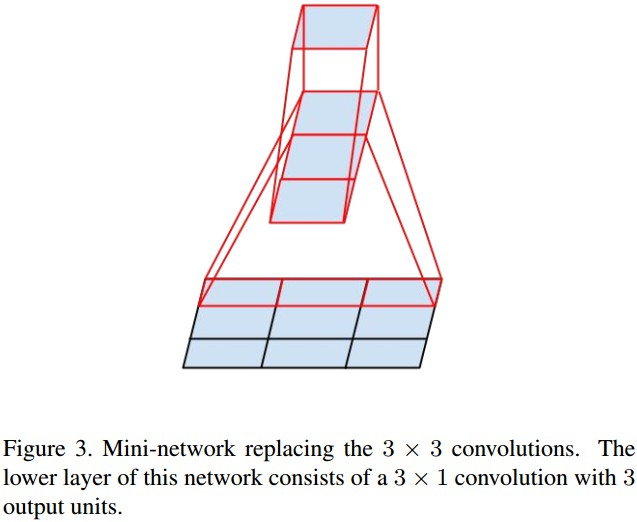

2.2 非对称卷积的空间分解

Spatial Factorization into Asymmetric Convolutions

一个 3x3卷积核等价于两个 3x1 卷积核.

将 7x7 卷积核分解成两个卷积核(1x7, 7x1),3x3 卷积核分解为 (1x3, 3x1).

既可以加速计算,又通过将 1 个卷积层分解为 2 个卷积层,加深了网络深度,提高网络的非线性.

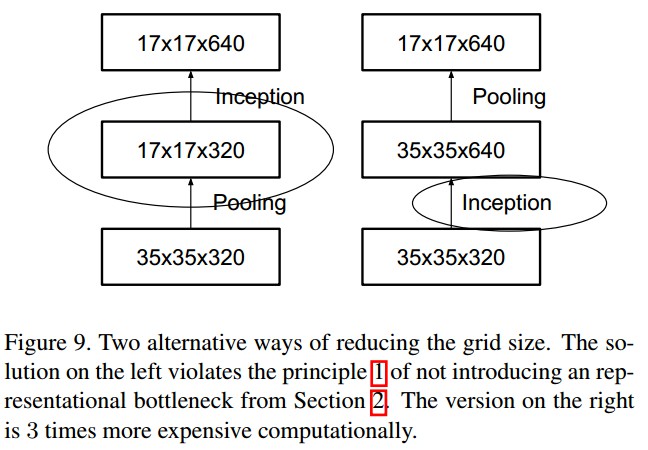

3. 有效减少网络尺寸

Efficient Grid Size Reduction

一般情况下,CNN 网络会采用 pooling 操作降低 feature maps 的网格尺寸.

为了避免出现特征表示瓶颈(representational boottleneck)问题,在采用 max 或 ave pooling 操作前,将网络 filters 的激活维度进行扩展(expanded).

例如:对于 k filters 的 dxd 网格,如果要得到 2k filters 的 (d/2)x(d/2) 网格,则,首先需要计算 2k filters 的 stride-1 卷积,然后再进行 pooling 操作. 总的计算量主要是在较大的网格上进行的 2dxdxkxk 次卷积操作.

一种可行的替代方式是,先 pooling 再卷积,则计算量为2(d/2)x(d/2)xkxk,将计算量减少到 1/4. 但,会出现特征表示瓶颈问题,特征表示的维度降低为 (d/2)x(d/2)xk,导致网络表征能力不够好(如图 Figure 9).

这里采用的方式,如 Figure 10,既去除了特征表示瓶颈问题,同时减少了计算量. 采用两个并行的步长 stride-2 操作.

4. Inception V3 网络结构.

采用 Figure 10 中的方法降低不同 Inception 模块间的网格尺寸.

采用 0-padding 的卷积,保持网格尺寸.

在 Inception 模块内部,也会采用 0-padding 的卷积来保持网格尺寸.

5. Tensorflow Slim 的 Inception V3 定义

inception_v3

inception_v3 预训练模型 - inception_v3_2016_08_28.tar.gz

Top-1/78.0

Top-5/93.9

"""

Inception V3 分类网络定义.

"""

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import tensorflow as tf

from nets import inception_utils

slim = tf.contrib.slim

trunc_normal = lambda stddev: tf.truncated_normal_initializer(0.0, stddev)

def inception_v3_base(inputs,

final_endpoint='Mixed_7c',

min_depth=16,

depth_multiplier=1.0,

scope=None):

"""

Inception V3 基础网络结构定义.

根据给定的输入和最终网络节点构建 Inception V3 网络.

可以构建表格中从输入到 inception 模块 Mixed_7c 的网络结构.

注:网络层的名字与论文里的不对应,但,构建的网络相同.

old_names 到 new names 的映射:

Old name | New name

=======================================

conv0 | Conv2d_1a_3x3

conv1 | Conv2d_2a_3x3

conv2 | Conv2d_2b_3x3

pool1 | MaxPool_3a_3x3

conv3 | Conv2d_3b_1x1

conv4 | Conv2d_4a_3x3

pool2 | MaxPool_5a_3x3

mixed_35x35x256a | Mixed_5b

mixed_35x35x288a | Mixed_5c

mixed_35x35x288b | Mixed_5d

mixed_17x17x768a | Mixed_6a

mixed_17x17x768b | Mixed_6b

mixed_17x17x768c | Mixed_6c

mixed_17x17x768d | Mixed_6d

mixed_17x17x768e | Mixed_6e

mixed_8x8x1280a | Mixed_7a

mixed_8x8x2048a | Mixed_7b

mixed_8x8x2048b | Mixed_7c

Args:

inputs: Tensor,尺寸为 [batch_size, height, width, channels].

final_endpoint: 指定网络定义结束的节点endpoint,即网络深度.

候选值:['Conv2d_1a_3x3', 'Conv2d_2a_3x3', 'Conv2d_2b_3x3',

'MaxPool_3a_3x3', 'Conv2d_3b_1x1', 'Conv2d_4a_3x3',

'MaxPool_5a_3x3', 'Mixed_5b', 'Mixed_5c', 'Mixed_5d',

'Mixed_6a', 'Mixed_6b', 'Mixed_6c', 'Mixed_6d', 'Mixed_6e',

'Mixed_7a', 'Mixed_7b', 'Mixed_7c'].

min_depth: 所有卷积 ops 的最小深度值(通道数,depth value (number of channels)).

当 depth_multiplier < 1 时,强制执行;

当 depth_multiplier >= 1 时,不是主动约束项.

depth_multiplier: 所有卷积 ops 深度(depth (number of channels))的浮点数乘子.

该值必须大于 0.

一般是将该值设为 (0, 1) 间的浮点数值,以减少参数量或模型的计算量.

scope: 可选变量作用域 variable_scope.

Returns:

tensor_out: 对应到网络最终节点final_endpoint 的输出张量Tensor.

end_points: 外部使用的激活值集合,例如,summaries 和 losses.

Raises:

ValueError: if final_endpoint is not set to one of the predefined values,

or depth_multiplier <= 0

"""

# end_points 保存相关外用的激活值,例如 summaries 或 losses.

end_points = {}

if depth_multiplier <= 0:

raise ValueError('depth_multiplier is not greater than zero.')

depth = lambda d: max(int(d * depth_multiplier), min_depth)

with tf.variable_scope(scope, 'InceptionV3', [inputs]):

with slim.arg_scope([slim.conv2d, slim.max_pool2d, slim.avg_pool2d], stride=1, padding='VALID'):

# 299 x 299 x 3

end_point = 'Conv2d_1a_3x3'

net = slim.conv2d(inputs, depth(32), [3, 3], stride=2, scope=end_point)

end_points[end_point] = net

if end_point == final_endpoint: return net, end_points

# 149 x 149 x 32

end_point = 'Conv2d_2a_3x3'

net = slim.conv2d(net, depth(32), [3, 3], scope=end_point)

end_points[end_point] = net

if end_point == final_endpoint: return net, end_points

# 147 x 147 x 32

end_point = 'Conv2d_2b_3x3'

net = slim.conv2d(net, depth(64), [3, 3], padding='SAME', scope=end_point)

end_points[end_point] = net

if end_point == final_endpoint: return net, end_points

# 147 x 147 x 64

end_point = 'MaxPool_3a_3x3'

net = slim.max_pool2d(net, [3, 3], stride=2, scope=end_point)

end_points[end_point] = net

if end_point == final_endpoint: return net, end_points

# 73 x 73 x 64

end_point = 'Conv2d_3b_1x1'

net = slim.conv2d(net, depth(80), [1, 1], scope=end_point)

end_points[end_point] = net

if end_point == final_endpoint: return net, end_points

# 73 x 73 x 80.

end_point = 'Conv2d_4a_3x3'

net = slim.conv2d(net, depth(192), [3, 3], scope=end_point)

end_points[end_point] = net

if end_point == final_endpoint: return net, end_points

# 71 x 71 x 192.

end_point = 'MaxPool_5a_3x3'

net = slim.max_pool2d(net, [3, 3], stride=2, scope=end_point)

end_points[end_point] = net

if end_point == final_endpoint: return net, end_points

# 35 x 35 x 192.

# Inception blocks

with slim.arg_scope([slim.conv2d, slim.max_pool2d, slim.avg_pool2d], stride=1, padding='SAME'):

# mixed: 35 x 35 x 256.

end_point = 'Mixed_5b'

with tf.variable_scope(end_point):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, depth(64), [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, depth(48), [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, depth(64), [5, 5], scope='Conv2d_0b_5x5')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, depth(64), [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, depth(96), [3, 3], scope='Conv2d_0b_3x3')

branch_2 = slim.conv2d(branch_2, depth(96), [3, 3], scope='Conv2d_0c_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, depth(32), [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat(axis=3, values=[branch_0, branch_1, branch_2, branch_3])

end_points[end_point] = net

if end_point == final_endpoint: return net, end_points

# mixed_1: 35 x 35 x 288.

end_point = 'Mixed_5c'

with tf.variable_scope(end_point):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, depth(64), [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, depth(48), [1, 1], scope='Conv2d_0b_1x1')

branch_1 = slim.conv2d(branch_1, depth(64), [5, 5], scope='Conv_1_0c_5x5')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, depth(64), [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, depth(96), [3, 3], scope='Conv2d_0b_3x3')

branch_2 = slim.conv2d(branch_2, depth(96), [3, 3], scope='Conv2d_0c_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, depth(64), [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat(axis=3, values=[branch_0, branch_1, branch_2, branch_3])

end_points[end_point] = net

if end_point == final_endpoint: return net, end_points

# mixed_2: 35 x 35 x 288.

end_point = 'Mixed_5d'

with tf.variable_scope(end_point):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, depth(64), [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, depth(48), [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, depth(64), [5, 5], scope='Conv2d_0b_5x5')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, depth(64), [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, depth(96), [3, 3], scope='Conv2d_0b_3x3')

branch_2 = slim.conv2d(branch_2, depth(96), [3, 3], scope='Conv2d_0c_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, depth(64), [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat(axis=3, values=[branch_0, branch_1, branch_2, branch_3])

end_points[end_point] = net

if end_point == final_endpoint: return net, end_points

# mixed_3: 17 x 17 x 768.

end_point = 'Mixed_6a'

with tf.variable_scope(end_point):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, depth(384), [3, 3], stride=2,

padding='VALID', scope='Conv2d_1a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, depth(64), [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, depth(96), [3, 3], scope='Conv2d_0b_3x3')

branch_1 = slim.conv2d(branch_1, depth(96), [3, 3], stride=2,

padding='VALID', scope='Conv2d_1a_1x1')

with tf.variable_scope('Branch_2'):

branch_2 = slim.max_pool2d(net, [3, 3], stride=2,

padding='VALID', scope='MaxPool_1a_3x3')

net = tf.concat(axis=3, values=[branch_0, branch_1, branch_2])

end_points[end_point] = net

if end_point == final_endpoint: return net, end_points

# mixed4: 17 x 17 x 768.

end_point = 'Mixed_6b'

with tf.variable_scope(end_point):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, depth(192), [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, depth(128), [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, depth(128), [1, 7], scope='Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1, depth(192), [7, 1], scope='Conv2d_0c_7x1')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, depth(128), [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, depth(128), [7, 1], scope='Conv2d_0b_7x1')

branch_2 = slim.conv2d(branch_2, depth(128), [1, 7], scope='Conv2d_0c_1x7')

branch_2 = slim.conv2d(branch_2, depth(128), [7, 1], scope='Conv2d_0d_7x1')

branch_2 = slim.conv2d(branch_2, depth(192), [1, 7], scope='Conv2d_0e_1x7')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, depth(192), [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat(axis=3, values=[branch_0, branch_1, branch_2, branch_3])

end_points[end_point] = net

if end_point == final_endpoint: return net, end_points

# mixed_5: 17 x 17 x 768.

end_point = 'Mixed_6c'

with tf.variable_scope(end_point):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, depth(192), [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, depth(160), [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, depth(160), [1, 7], scope='Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1, depth(192), [7, 1], scope='Conv2d_0c_7x1')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, depth(160), [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, depth(160), [7, 1], scope='Conv2d_0b_7x1')

branch_2 = slim.conv2d(branch_2, depth(160), [1, 7], scope='Conv2d_0c_1x7')

branch_2 = slim.conv2d(branch_2, depth(160), [7, 1], scope='Conv2d_0d_7x1')

branch_2 = slim.conv2d(branch_2, depth(192), [1, 7], scope='Conv2d_0e_1x7')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, depth(192), [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat(axis=3, values=[branch_0, branch_1, branch_2, branch_3])

end_points[end_point] = net

if end_point == final_endpoint: return net, end_points

# mixed_6: 17 x 17 x 768.

end_point = 'Mixed_6d'

with tf.variable_scope(end_point):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, depth(192), [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, depth(160), [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, depth(160), [1, 7], scope='Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1, depth(192), [7, 1], scope='Conv2d_0c_7x1')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, depth(160), [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, depth(160), [7, 1], scope='Conv2d_0b_7x1')

branch_2 = slim.conv2d(branch_2, depth(160), [1, 7], scope='Conv2d_0c_1x7')

branch_2 = slim.conv2d(branch_2, depth(160), [7, 1], scope='Conv2d_0d_7x1')

branch_2 = slim.conv2d(branch_2, depth(192), [1, 7], scope='Conv2d_0e_1x7')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, depth(192), [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat(axis=3, values=[branch_0, branch_1, branch_2, branch_3])

end_points[end_point] = net

if end_point == final_endpoint: return net, end_points

# mixed_7: 17 x 17 x 768.

end_point = 'Mixed_6e'

with tf.variable_scope(end_point):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, depth(192), [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, depth(192), [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, depth(192), [1, 7], scope='Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1, depth(192), [7, 1], scope='Conv2d_0c_7x1')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, depth(192), [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, depth(192), [7, 1], scope='Conv2d_0b_7x1')

branch_2 = slim.conv2d(branch_2, depth(192), [1, 7], scope='Conv2d_0c_1x7')

branch_2 = slim.conv2d(branch_2, depth(192), [7, 1], scope='Conv2d_0d_7x1')

branch_2 = slim.conv2d(branch_2, depth(192), [1, 7], scope='Conv2d_0e_1x7')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, depth(192), [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat(axis=3, values=[branch_0, branch_1, branch_2, branch_3])

end_points[end_point] = net

if end_point == final_endpoint: return net, end_points

# mixed_8: 8 x 8 x 1280.

end_point = 'Mixed_7a'

with tf.variable_scope(end_point):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, depth(192), [1, 1], scope='Conv2d_0a_1x1')

branch_0 = slim.conv2d(branch_0, depth(320), [3, 3], stride=2,

padding='VALID', scope='Conv2d_1a_3x3')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, depth(192), [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, depth(192), [1, 7], scope='Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1, depth(192), [7, 1], scope='Conv2d_0c_7x1')

branch_1 = slim.conv2d(branch_1, depth(192), [3, 3], stride=2,

padding='VALID', scope='Conv2d_1a_3x3')

with tf.variable_scope('Branch_2'):

branch_2 = slim.max_pool2d(net, [3, 3], stride=2,

padding='VALID', scope='MaxPool_1a_3x3')

net = tf.concat(axis=3, values=[branch_0, branch_1, branch_2])

end_points[end_point] = net

if end_point == final_endpoint: return net, end_points

# mixed_9: 8 x 8 x 2048.

end_point = 'Mixed_7b'

with tf.variable_scope(end_point):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, depth(320), [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, depth(384), [1, 1], scope='Conv2d_0a_1x1')

branch_1 = tf.concat(axis=3, values=[

slim.conv2d(branch_1, depth(384), [1, 3], scope='Conv2d_0b_1x3'),

slim.conv2d(branch_1, depth(384), [3, 1], scope='Conv2d_0b_3x1')])

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, depth(448), [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(

branch_2, depth(384), [3, 3], scope='Conv2d_0b_3x3')

branch_2 = tf.concat(axis=3, values=[

slim.conv2d(branch_2, depth(384), [1, 3], scope='Conv2d_0c_1x3'),

slim.conv2d(branch_2, depth(384), [3, 1], scope='Conv2d_0d_3x1')])

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(

branch_3, depth(192), [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat(axis=3, values=[branch_0, branch_1, branch_2, branch_3])

end_points[end_point] = net

if end_point == final_endpoint: return net, end_points

# mixed_10: 8 x 8 x 2048.

end_point = 'Mixed_7c'

with tf.variable_scope(end_point):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, depth(320), [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, depth(384), [1, 1], scope='Conv2d_0a_1x1')

branch_1 = tf.concat(axis=3, values=[

slim.conv2d(branch_1, depth(384), [1, 3], scope='Conv2d_0b_1x3'),

slim.conv2d(branch_1, depth(384), [3, 1], scope='Conv2d_0c_3x1')])

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, depth(448), [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(

branch_2, depth(384), [3, 3], scope='Conv2d_0b_3x3')

branch_2 = tf.concat(axis=3, values=[

slim.conv2d(branch_2, depth(384), [1, 3], scope='Conv2d_0c_1x3'),

slim.conv2d(branch_2, depth(384), [3, 1], scope='Conv2d_0d_3x1')])

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(

branch_3, depth(192), [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat(axis=3, values=[branch_0, branch_1, branch_2, branch_3])

end_points[end_point] = net

if end_point == final_endpoint: return net, end_points

raise ValueError('Unknown final endpoint %s' % final_endpoint)

def inception_v3(inputs,

num_classes=1000,

is_training=True,

dropout_keep_prob=0.8,

min_depth=16,

depth_multiplier=1.0,

prediction_fn=slim.softmax,

spatial_squeeze=True,

reuse=None,

create_aux_logits=True,

scope='InceptionV3',

global_pool=False):

"""

Inception v3 分类模型.

网络训练的默认图片输入尺寸为 299x299.

默认参数构建的 Inception V3 模型是论文里定义的模型.

也可以通过修改参数 dropout_keep_prob, min_depth 和 depth_multiplier,

定义 Inception V3 的变形.

参数:

inputs: Tensor,尺寸为 [batch_size, height, width, channels].

num_classes: 待预测的类别数.

如果 num_classes=0或None,则忽略 logits 层,

返回 logits 层的输入特征(dropout 层前的网络层).

is_training: 是否是训练阶段.

dropout_keep_prob: 保留的激活值的比例.

min_depth: 所有卷积 ops 的最小深度值(通道数,depth value (number of channels)).

当 depth_multiplier < 1 时,强制执行;

当 depth_multiplier >= 1 时,不是主动约束项.

depth_multiplier: 所有卷积 ops 深度(depth (number of channels))的浮点数乘子.

该值必须大于 0.

一般是将该值设为 (0, 1) 间的浮点数值,以减少参数量或模型的计算量.

prediction_fn: 计算 logits 预测值输出的函数,如softmax.

spatial_squeeze: 如果是 True, logits 的 shape 是 [B, C];

如果是 false,则 logits 的 shape 是 [B, 1, 1, C];

其中,B 是 batch_size,C 是类别数.

reuse: 是否重用网络及网络的变量值.

如果需要重用,则必须给定重用的 'scope'.

create_aux_logits: 是否创建辅助 logits.

scope: 可选变量作用域 variable_scope.

global_pool: 可选 boolean 值,选择是否在 logits 网络层前使用 avgpooling 层.

默认值是 fasle,则采用固定窗口的 pooling 层,将 inputs 降低到 1x1.

inputs 越大,则 outputs 越大.

如果值是 true, 则任何 inputs 尺寸都 pooled 到 1x1.

Returns:

net: Tensor,如果 num_classes 为非零值,则返回 logits(pre-softmax activations).

如果 num_classes 是 0 或 None,则返回 logits 网络层的 non-dropped-out 输入.

end_points: 字典,包含网络各层的激活值.

Raises:

ValueError: if 'depth_multiplier' is less than or equal to zero.

"""

if depth_multiplier <= 0:

raise ValueError('depth_multiplier is not greater than zero.')

depth = lambda d: max(int(d * depth_multiplier), min_depth)

with tf.variable_scope(scope, 'InceptionV3', [inputs], reuse=reuse) as scope:

with slim.arg_scope([slim.batch_norm, slim.dropout], is_training=is_training):

net, end_points = inception_v3_base( inputs, scope=scope, min_depth=min_depth,

depth_multiplier=depth_multiplier)

# Auxiliary Head logits

if create_aux_logits and num_classes:

with slim.arg_scope([slim.conv2d, slim.max_pool2d, slim.avg_pool2d],

stride=1, padding='SAME'):

aux_logits = end_points['Mixed_6e']

with tf.variable_scope('AuxLogits'):

aux_logits = slim.avg_pool2d(aux_logits, [5, 5], stride=3, padding='VALID',

scope='AvgPool_1a_5x5')

aux_logits = slim.conv2d(aux_logits, depth(128), [1, 1], scope='Conv2d_1b_1x1')

# Shape of feature map before the final layer.

kernel_size = _reduced_kernel_size_for_small_input(aux_logits, [5, 5])

aux_logits = slim.conv2d(aux_logits, depth(768), kernel_size,

weights_initializer=trunc_normal(0.01),

padding='VALID', scope='Conv2d_2a_{}x{}'.format(*kernel_size))

aux_logits = slim.conv2d(aux_logits, num_classes, [1, 1], activation_fn=None,

normalizer_fn=None, weights_initializer=trunc_normal(0.001),

scope='Conv2d_2b_1x1')

if spatial_squeeze:

aux_logits = tf.squeeze(aux_logits, [1, 2], name='SpatialSqueeze')

end_points['AuxLogits'] = aux_logits

# Final pooling and prediction

with tf.variable_scope('Logits'):

if global_pool:

# Global average pooling.

net = tf.reduce_mean(net, [1, 2], keep_dims=True, name='GlobalPool')

end_points['global_pool'] = net

else:

# Pooling with a fixed kernel size.

kernel_size = _reduced_kernel_size_for_small_input(net, [8, 8])

net = slim.avg_pool2d(net, kernel_size, padding='VALID',

scope='AvgPool_1a_{}x{}'.format(*kernel_size))

end_points['AvgPool_1a'] = net

if not num_classes:

return net, end_points

# 1 x 1 x 2048

net = slim.dropout(net, keep_prob=dropout_keep_prob, scope='Dropout_1b')

end_points['PreLogits'] = net

# 2048

logits = slim.conv2d(net, num_classes, [1, 1], activation_fn=None,

normalizer_fn=None, scope='Conv2d_1c_1x1')

if spatial_squeeze:

logits = tf.squeeze(logits, [1, 2], name='SpatialSqueeze')

# 1000

end_points['Logits'] = logits

end_points['Predictions'] = prediction_fn(logits, scope='Predictions')

return logits, end_points

inception_v3.default_image_size = 299

def _reduced_kernel_size_for_small_input(input_tensor, kernel_size):

"""

定义核大小,用于对小尺寸的核大小的自动减小.

Define kernel size which is automatically reduced for small input.

创建 graph 时,如果输入图片的尺寸未知,则该函数假设输入图片尺寸足够大.

参数:

input_tensor: 输入 Tensor,尺寸为 [batch_size, height, width, channels].

kernel_size: desired kernel size of length 2: [kernel_height, kernel_width]

Returns:

a tensor with the kernel size.

TODO(jrru): Make this function work with unknown shapes. Theoretically, this

can be done with the code below. Problems are two-fold: (1) If the shape was

known, it will be lost. (2) inception.slim.ops._two_element_tuple cannot

handle tensors that define the kernel size.

shape = tf.shape(input_tensor)

return = tf.stack([tf.minimum(shape[1], kernel_size[0]),

tf.minimum(shape[2], kernel_size[1])])

"""

shape = input_tensor.get_shape().as_list()

if shape[1] is None or shape[2] is None:

kernel_size_out = kernel_size

else:

kernel_size_out = [min(shape[1], kernel_size[0]),

min(shape[2], kernel_size[1])]

return kernel_size_out

inception_v3_arg_scope = inception_utils.inception_arg_scope

PyTorch - InceptionV3

Keras - InceptionV3